I don’t think there is any expectation of this being automatic. The shaders would themselves need to be designed to be seamless from the outset and that’s on the user.

@Willy_Wonka The color space thing sounds reasonable… I could just give you a ‘gamma’ node? That would let you do the conversion but could also be used for other stuff… I’m not sure about individual channels, it sounds like it should be possible but I would have to test it.

Im not sure I follow how a “gamma” node would help exactly? Do you mean that I would divide the color by X during packing (post process) and then multiply by X in my shader in order to read it correctly? This would work in Blender but I did a test in UE4 recently and Unreal Engine doesnt allow pbr channels like specularity to go above 1 (or well, increasing it above 1 doesnt result in any visual change in the shader, its like they are clamping it to 0-1 range).

Regarding the other stuff…

Putting a material on a plane Putting a material on a plane and baking it isn’t particularly difficult. How I would actually set up the interface to let you specify what to bake though… I suppose it would have to be a bake pass type, the texture names would have to be appended to the file name.

Personally I think it would be nice if there would be some tickbox for the current pass node that sets a “default” for whether the shaders will bake into tileable textures or baked regularly and then have some way of determening on a per material basis whether to override those defaults or not, because maybe you have some materials that would work as tileable textures whilst you have some shaders that include stuff like decals which cannot quite be baked the same way.

Maybe something like:

- A dropdown on the current pass node “Bake type” with the options “Bake to UVs” (regular bake, like Bake Wrangler performs bakes currently) and “Bake each material into an individual set of tile-able textures”.

- An float slider deciding how large the bake plane should be in cm (with the objects transform scale always remaining at one) (this is important because when making shaders, you often use object or world coordinates to decide the size for your procedural textures and sometimes you might need a large area to avoid obvious tiling & sometimes you might literally just need like a cm (like for a barbed wire fence)). In a situation where you have a shader that is perfectly tileable as is, this would also be pretty much everything you need to make sure the texture also tiles like expected.

And then if a suffix to the material name _tile_50x50 or _UV is detected, it will override the settings set in the pass node for that particular material. This way you could perform just one bake but still be able to mix-match between materials that becomes tile-able textures and ones that bakes to UV. Useful if you have some large mesh you wanna bake, but a few unique decals that need to be baked individually (or if its a character, maybe the face needs more precise detail whilst the rest of the skin can be tile-able).

And then one last setting on the pass node “Ignore material suffixes” to prevent the pass nodes settings to be overriden. This would be useful if you’re reusing the same materials for several objects that you’re baking sepately. Like maybe you used the same metal for the floor in a corridor as the metal on a gun, the gun you might not want to tile since its so small and might not always be used in a scene using the same tileable texture. But for the floor this might not be the case as it could cover an a lot larger area making tiling a better solution.

I’ve thought about adding shader nodes for various things before. There are some advantages, but it also has the issue of then having to mess around with your shaders which is one of the things that is really annoying about bake in vanilla blender…

Yes this Im also concerned about with Piotr’s design. I would argue its a little bit of a different feature request than mine since it doesnt really necessarily apply to tile-able textures. I think?

The problem of course is that this won’t result in a seamless texture. There are procedural ways to make it seamless. I don’t know any that create a particularly amazing result. I’m also pretty sure it would take a horribly long time in an uncompiled python script to apply them to any decently sized texture…

When I well think about it maybe it doesnt actually need to be seamless: How to Tile a Texture Without Repetition - Blender Tutorial - YouTube . It might be better to just do some magic in the shader like Blender Guru demonstrates (As you can see he kinda just ignores the fact that the textures are seamless and instead rotate them to avoid obvious repetition as well as counteract the now-visible seams by making them follow a pattern rather than straight lines).

Altough I would place my bet there must be some decent Py libraries out there for this kind of image manipulation. You could certainly use image magick for it (although Im not sure if image magick is quite as fast as whatever post process solutino youre using rn). But if its difficult to implment its rather a nice to have than a must.

that is a shame that Blender does not provide more information for a mesh with UDIM UV tiles…

@Willy_Wonka While not strictly 100% accurate, applying a gamma of 2.2 to a linear map should make it close to sRGB, no?

It could be that @Piotr_Adamowicz suggestion works best though. Like the presence of the node in the material could indicate that it should be baked to a plane. Then you wouldn’t have to rely on naming conventions, etc… Then all you need is an option to ignore the node being there.

There are python libs, but then I have to get users to install them along side the add-on, which is less than ideal.

@amdj1 Yeah the UDIM support is very low. I could add a property to link the image to objects… I suppose that could also work.

It could be that @Piotr_Adamowicz suggestion works best though. Like the presence of the node in the material could indicate that it should be baked to a plane. Then you wouldn’t have to rely on naming conventions, etc… Then all you need is an option to ignore the node being there.

But then you would need to insert a bunch of those nodes. ![]()

@Willy_Wonka While not strictly 100% accurate, applying a gamma of 2.2 to a linear map should make it close to sRGB, no?

Never heard of that. Here are the formulas for converting sRGB back and forth: A close look at the sRGB formula (entropymine.com) Altough I tried implementing these in Unreals shader graph a while ago and even though I got “sort of” right results, I would like to remember it looking slightly off still compared to using Unreals sRGB unpacker (Idk why that is, but it was still close enough to be useable).

It could be that @Piotr_Adamowicz suggestion works best though. Like the presence of the node in the material could indicate that it should be baked to a plane. Then you wouldn’t have to rely on naming conventions, etc… Then all you need is an option to ignore the node being there.

Couldnt we have both solutions? ![]() I would personally think it sounds quite cumbersome to insert a bunch of nodes into the shader graphs. And what if you change your mind and say that you dont want anything to get baked to a plane? Having a bunch of settings on the pass nodes that can be overriden sounds a lot easier to manage/less cumbersome at least for my use case.

I would personally think it sounds quite cumbersome to insert a bunch of nodes into the shader graphs. And what if you change your mind and say that you dont want anything to get baked to a plane? Having a bunch of settings on the pass nodes that can be overriden sounds a lot easier to manage/less cumbersome at least for my use case.

Altough Im working on a design suggestion for how I think Blenders bake overhaul should be (based on a design by Brecht Van Lommel (brecht), I quoted it here (blender.org)).

Im guessing it would require a bit of work to implement if you want to give a ago at it but if you like I could link it here once Ive posted it.

I think the problem with your maths it should be 1/2.2 not 2.4. Gamma should just be a POW function any way… You either you need to raise your linear value to the 2.2 power or to the 1/2.2 power. I don’t remember, which way but one of those should give you passable sRGB values. (At least, that’s what I’ve seen other people do, because while sRGB doesn’t map to that exactly, it averages out at that value)

Yup. x^2.2 is what you want for linear->sRGB and x^1/2.2 for the reverse. While this is not strictly accurate, it’s good enough for black and white images.

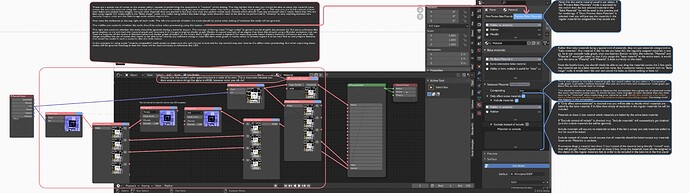

Im not asking you to implement this exactly as it looks rather cumbersome but. Conceptually what do you guys think of this as a baking system?

Just realized that I forgot to add a “bake job system”. Going to bed now but I will be updating the image with that tomorrow.

I also believe I wrote “material” and “material 2” or something like that somewhere which was supposed to say mettalic, rubber & scratched rubber

Yeah, I don’t think I quite get it

Yes its incomplete, but the idea is that you can bake from a node in the material graph like you suggested. Except that you can also make a material bake from other materials and pack it with post processing using those pink nodes.

And then since its based on the shader graph you would be able to preview the results immediately without having to setup material separately (since your baking node graph is actually also a material).

It was Brecht Van Lommel who suggested baking with materials like this. Altough he said he didn’t mean it to have any nodes between the image baking node and the output node like I have.

I quoted something that he posted in  D3203 Baking system overhaul: Move baking settings from render settings into BakePasses (blender.org) in the comments here:

D3203 Baking system overhaul: Move baking settings from render settings into BakePasses (blender.org) in the comments here:  T68925 Baking workflow revamp (blender.org) (his original text is qutie hard to find in the D3203 thread unless you use F3 search so I suggest going to T68925 first).

T68925 Baking workflow revamp (blender.org) (his original text is qutie hard to find in the D3203 thread unless you use F3 search so I suggest going to T68925 first).

Not sure if its actually a good design but I mean if it would work I kinda dig being able to setup the material baking system at the same time as setting up the shader it will be read from. The same bake-image node could probably also be used to bake images directly from a regular shader graph

I don’t have time to dig through that again, but I had read Brecht’s proposal a while ago and it sounds like NIH syndrome to be honest. At first glance it appears overthought and undergeneralized. While having some bake functionality in the Material nodes is a good idea, it shouldn’t be the primary way you bake, methinks. More importantly, it doesn’t look very extensible for whatever weird use cases we might need in the next 10 years (like the stuff UE is doing). Bake Wrangler got it right with a separate node tree. But I’ll hold off judgement until I see more. Brecht is a smart guy, so I’m hoping I’m just not seeing the full picture.

This will take up too much space in the node editor. Better to make a separate node texture editor.

The baked model can have several dozen parts. For each baking part, can be baked several high-poly models and these are add more nodes in the node editor. Let the model contain ten parts, then in the node editor it is about 20 nodes. If the model contains 30 parts, then in the node editor it is about 60 nodes. I’ve had more than 50 parts and that’s more than 100 nodes. There was not enough zoom possibilities in the node editor to zoom out and see all the nodes. It is better to simplify the current number of nodes, imho

I don’t really understand how that system is supposed to work and I can’t really be bothered following the discussions around it. But I don’t think baking should be tied to the material system at all. The problem with these solutions is that baking is used in many different ways. What works for one work flow can be a nightmare for another. And if anyone wants to see how I think baking should work, they just need to download my add-on

I would like to hear from people who are using UDIMs in blender currently. How are you using them and how would you want to bake them out?

@Piotr_Adamowicz also want to discuss how we could make your lamps bake work with less nodes and strings… Preferably a single Mesh node and a single sorting node with one connection to each pass…

The sorting node is exactly what I was thinking. Something that connects each of the inputs on the left to each of the outputs on the right.

I’m not sure how a single Mesh node could possibly work though, since those are actually separate pieces being baked. To be honest, I like these to be separate because it’s nice and clear visually what bakes where. Less headscratching and thinking “what’s going on here” that way

If you want it, I can PM you the .blend file.

Yeah, a single Mesh node makes what’s happening a bit more opaque. But it might be nice to have the option…

I’m imagining it would work through name matching… I guess send me the file so I can see what would be required to make it work.

Out of curiosity. Is this with baking tiling textures for materials becoming a reality in any form?

Probably? There are a couple of things I’m working on right now (splitting output files by object and automatic matching of objects by name plus reducing the spaghetti bowl between mesh and pass). And there is the open issue of UDIMs which I want to hear from people about.

Baking to a plane instead of an object shouldn’t be challenging, but I need to think about how I want to present it in the UI. Since it’s not really linked to an object in any way, I’m thinking maybe you just use the material as the input…