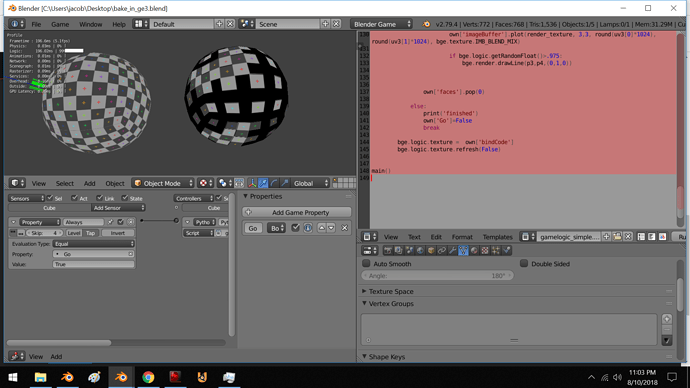

this script is setup to bake overlaying geometry sitting over a quad to a texture realtive to the UV of the polygon

I will be using it to bake low poly standins of my planet after it’s generated in game.

help me refine it?

import bge

def main():

cont = bge.logic.getCurrentController()

own = cont.owner

always = cont.sensors['Always']

if 'faces' not in own:

#trying to get the texture loaded and replace the current texture with it

TargetObj = own.scene.objects['Bake_Target']

texture = bge.texture.Texture(TargetObj, 0,0 )

own['bindCode'] = texture

print(texture.bindId)

imageBuffer = bge.texture.ImageBuff(1024, 1024, color=0, scale=False)

texture.source = imageBuffer

print(texture.source)

own['imageBuffer']=imageBuffer

print(type(own['imageBuffer']))

own['cam2'] = own.scene.objects['cam2']

#bge.logic.texture = textureID

#print(bge.logic.texture.source)

faces = []

for i in range(own.meshes[0].numPolygons):

#placeholder

face = own.meshes[0].getPolygon(i)

v1 = own.meshes[0].getVertex(0,face.v1)

v2 = own.meshes[0].getVertex(0,face.v2)

v3 = own.meshes[0].getVertex(0,face.v3)

width = 1024

height = 1024

#print(v1.UV)

uv1 = v1.UV

uv2 = v2.UV

uv3 = v3.UV

uv1[0]*=width

uv1[1]*=height

uv2[0]*=width

uv2[1]*=height

uv3[0]*=width

uv3[1]*=height

dist = round((uv1-uv2).magnitude)

dist2 = round((uv2-uv3).magnitude)

faces.append([face ,[dist,dist2]])

own['faces']=faces

print('stored faces')

elif always.positive:

Trans = own.worldTransform

for i in range(1):

if len(own['faces'])>=i+1:

face = own['faces'][i][0]

v1 = own.meshes[0].getVertex(0,face.v1)

v2 = own.meshes[0].getVertex(0,face.v2)

v3 = own.meshes[0].getVertex(0,face.v3)

v4 = own.meshes[0].getVertex(0,face.v4)

v1xyz = Trans * v1.XYZ

v2xyz = Trans * v2.XYZ

v3xyz = Trans * v3.XYZ

v4xyz = Trans * v4.XYZ

mid1 = v1xyz.lerp(v2xyz, .5)

mid2 = v1xyz.lerp(v4xyz,.5)

N = ((v1.normal + v2.normal)/2).normalized()

N = ((N + v3.normal)/2).normalized()

N = ((N + v4.normal)/2).normalized()

mid1 += N*2

bge.render.drawLine(v1xyz,v2xyz,(1,0,0))

bge.render.drawLine(v2xyz,v3xyz,(1,0,0))

bge.render.drawLine(v3xyz,v4xyz,(1,0,0))

bge.render.drawLine(v4xyz,v1xyz,(1,0,0))

point = v1xyz.lerp(v3xyz,.5)

point2 = point + (N*2)

bge.render.drawLine(point,point2,(0,0,1))

for X in range(own['faces'][i][1][0]):

for Y in range(own['faces'][i][1][1]):

p1 = v1xyz.lerp(v2xyz, X/own['faces'][i][1][0])

p2 = v4xyz.lerp(v3xyz, X/own['faces'][i][1][0])

p3 = p1.lerp(p2, Y /own['faces'][i][1][1])

p4 = p3 + (N*.25)

own['cam2'].worldPosition = v1xyz.lerp(v2xyz,.5) + (N*2)

#print('aligning minor axis')

own['cam2'].alignAxisToVect(own['cam2'].getVectTo(mid2)[1],0,1)

own['cam2'].alignAxisToVect(own['cam2'].getVectTo(mid2)[1],1,1)

#print('aligning camera z')

own['cam2'].alignAxisToVect(N,2,1)

own['cam2'].worldPosition = p4

render_texture = bge.texture.ImageRender(own.scene, own['cam2'])

render_texture.capsize = (3,3)

render_texture.refresh()

uv1 = v1.UV.lerp(v2.UV,X/own['faces'][i][1][0])

uv2 = v4.UV.lerp(v3.UV,X/own['faces'][i][1][0])

uv3 = uv1.lerp(uv2, Y/own['faces'][i][1][1])

own['imageBuffer'].plot(render_texture, 3,3, round(uv3[0]*1024), round(uv3[1]*1024), bge.texture.IMB_BLEND_MIX)

if bge.logic.getRandomFloat()>.975:

bge.render.drawLine(p3,p4,(0,1,0))

own['faces'].pop(0)

else:

print('finished')

own['Go']=False

break

bge.logic.texture = own['bindCode']

bge.logic.texture.refresh(False)

main()