Blender is not complex.

It was possible to make simple 3D models during decades with older release of Blender.

Geometry nodes are a way to simplify creation of complex scenes.

It is a complex way to create simple 3D models. But if it is your goal, you can simply ignore them.

That is demand of user that is complex.

Making something in 3 dimensions means defining what you want to see in 3 dimensions.

Making such definition by a text corresponds to a long wall of text.

When a writer do a description of a building, an object in a novel, that can correspond to a long paragraph of several pages or that can be few words.

That depends of its intentions, of what he absolutely wants to communicate to reader.

But almost always, there are enough blanks that can be filled by readers to let them, imagine what is described, according to their preferences.

An interpretation done by AI means that AI is filling the blanks. The result may differ a lot to what you expected if you are not the one who trained it.

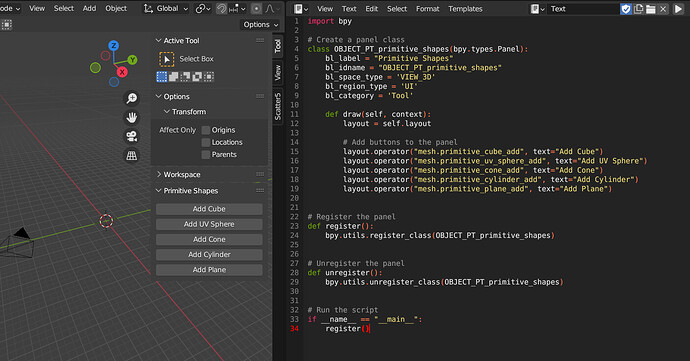

If you are fine with a low expectation, you can work quickly with AI.

If you are not, you can pass hours to refine your request.

The reason why there is an interface, with a 3D viewport, is to have an instant feedback of adjustment done to what is displayed on screen.

The tool is designed to take, as first input, creation of simple 3D forms, and immediately offering ability to move, scale, rotate them, combine them or complexify their surface, their mesh.

Since Ngons, a face of this mesh can correspond to any polygonal shape.

You can make a face that corresponds to an L, a T or silhouette of a mickey mouse head.

Is it simpler to cut shape you want with knife tool, or to explain what object of mass culture reference is closer, to what it evokes to you, with a potential misunderstanding from AI ?

AI is not magic. To have a pertinent use of AI as nodes, you have to define precisely to what task you want it to be assigned.

And it has to be more variable than a nodegroup or a versatile rig, that can be stored as an asset and immediately reused.

To train this AI, you need to have thousands, millions of examples of expected results ready.

The language, AI will understand, will correspond to how those examples were tagged.

That is not a common language.

It is possible to imagine an AI picking 3D models from libraries, creating a GN nodetree to combine them, creating a correct light set-up, placing camera, creating a rig, and producing movements, according to sentences written by a user.

That would not change the fact that : if user wants to tweak resulting scene manually ; he would have to understand what he is tweaking, when he is pulling a gizmo in 3D Viewport.

The same way there is no “Make A Beauty Render” in UI.

Writing that in an AI prompt will not be a guarantee to obtain one.