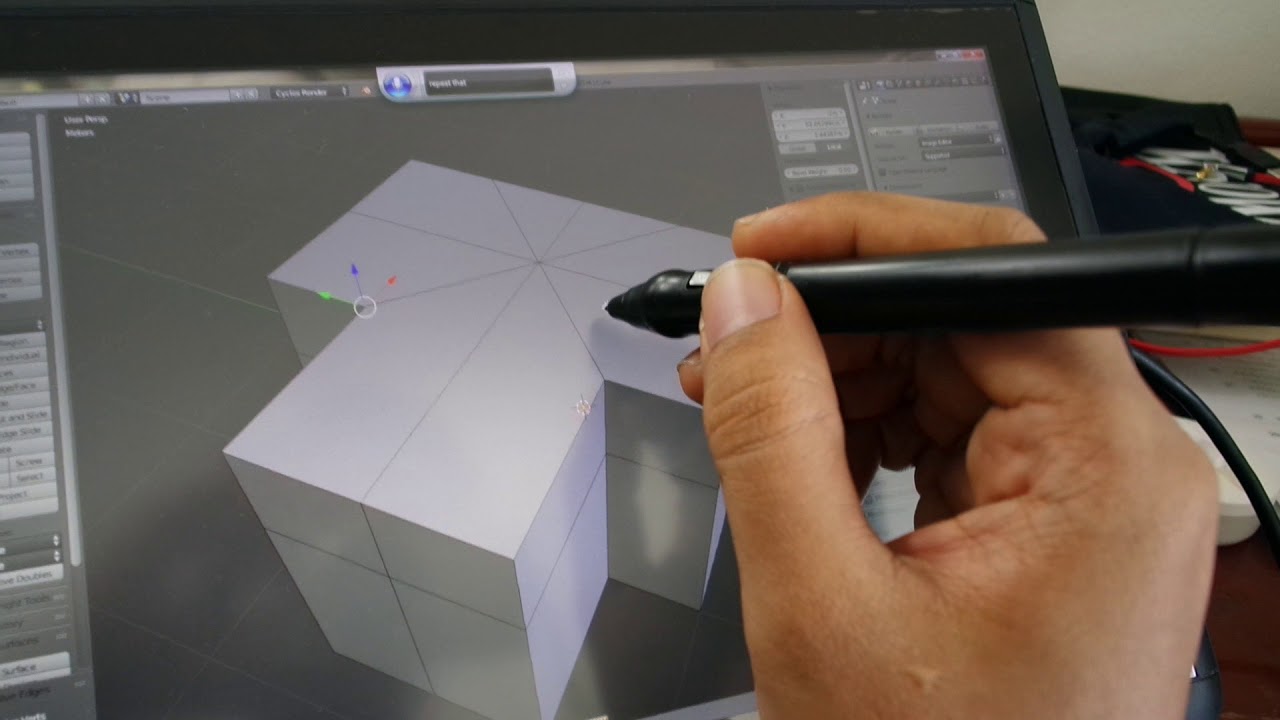

Well, I already work in Blender with voice since 2016… because I am a pen display user with RSI so my hand will not like holding mouse or keyboard for long period of time…

https://youtu.be/LcilO5CsfHM?t=20

That approach in the above video requires only Vocola 3.1 freeware + Windows Speech Recognition which is already included in your Windows 7, or 10 + AutoHotKey to solve Shift key problem…

It is so easy to search in Blender 2.9 since the Command Search really improve and almost every operations/commands available in this. So I created my command search in Vocola code like this…

export (Collada=col|Alembic=ale|3D Studio=3ds|3DS|FBX|Wavefront=wav|Object=obj|OBJ) = BlendVCL.CommandSearch(“export $1”,0);

And I can now say “export Collada” or “export 3D Studio” or “export OBJ” or whatever type in the code. Next is my BlendVCL plugin written specially for Vocola to do the search for me and it takes less than 1 seconds to search and pick the right command for export my model.

Another sample Vocola code …

select N Gon = doCommandPort(“bpy.ops.mesh.select_face_by_sides(number=4, type=‘GREATER’, extend=True)”,“”,“”);

It will select N GON faces in my mesh when I say “select N Gon”, this command requires the Blender Command Port addon to be active when my Vocola extension send the Python code “bpy.ops.mesh.select_face_by_sides(number=4, type=‘GREATER’, extend=True)” directly to Blender. You can find this Addon on GitHub.

Here is another sample video showing how I control Blender UI internally by sending a Python code to it. I could do anything from split view, changing the size of Gizmo, show per-object mesh wireframe, show specific type of object in the view…

https://youtu.be/czlMDC_OEhQ?t=175

All codes and extension available at my GumRoad. The free set will let you use the Command Search and simple key strokes but no Python code. The extended version will have ALL functionality but I think you should try the free set first !

https://gumroad.com/l/BlenderVCL

an add-on would use an existing voice recognition library. i am not sure what the advantages over voicemacro would be though?

an add-on would use an existing voice recognition library. i am not sure what the advantages over voicemacro would be though?