I’m coming over to Blender from C4D, and re-learning workflows that I had there for compositing 3D objects into live footage (tracking, lighting, etc.).

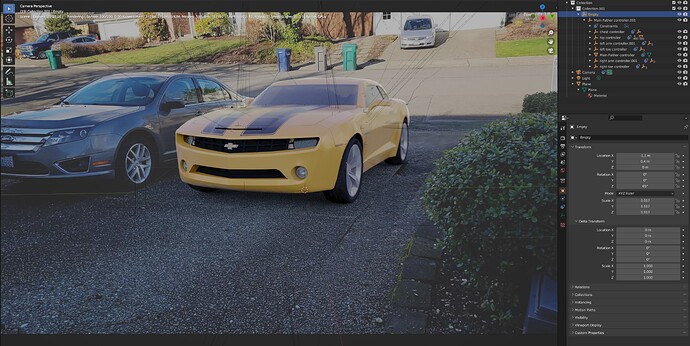

This was a render I had done using C4D and Octane, using only an HDRI (captured using Insta360 cam) of the environment for lighting. I only needed to adjust the intensity of the HDRI environment to match the lighting. The yellow Bumblebee car is the model, and all the rest is the live footage of my driveway.

I’m trying to match this now with Blender for Octane. I successfully tracked footage in Blender, created a ground plane on the driveway, and I’ve brought the model in and used the same HDRI for lighting. However, the render is very blurry, particularly towards the rear of the car.

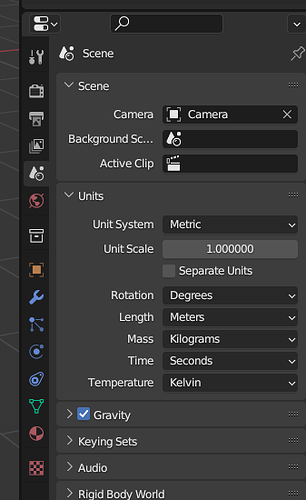

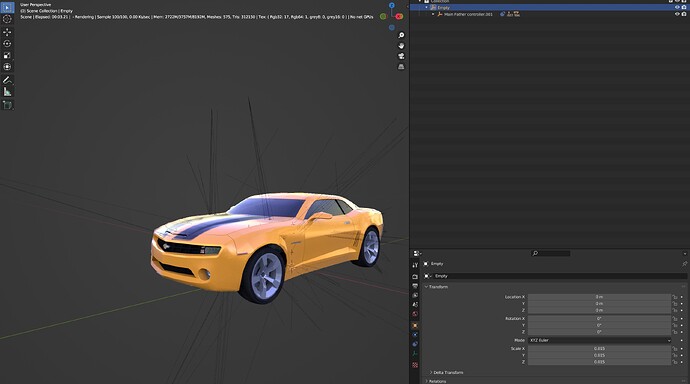

I’m thinking this is a scaling issue. When I brought the model in, I parented an empty null to it for easy positioning/scaling. I found the model was HUGE and I had to scale it way down. In fact, as you can see in the screenshot, the scale is brought down to 0.017 of original size.

I’m also pretty sure it’s a scaling issue because if I take the original blend file of just the model, and bring in the same HDRI (no tracked background footage…just HDRI for lighting), the render is not blurry.

In C4D, you can set vector constraints along an axis and put in the length as “known” and actually enter the length. For example, if I have two tracked points on my tracked footage, and I know the distance between those points is 1.5 meters, then I can give C4D a vector constraint and tell C4D the distance between those points is 1.5 meters. Then, whenever I bring in any 3d model, it’s automatically appropriately sized.

Is there a way to do the equivalent in Blender? I would think that would fix my scaling issue. Or perhaps there’s another way to fix this? I’ve tried searching the web for info on constraints in Blender but can’t find anything on this…I only find stuff about measuring distances.