Bao2: Thanks for the hint, those lines were indeed useless after my recent threading changes.

Anyone knows when Brecht’s demo about OpenSubdiv will be presented at Siggraph? Is that today? This page doesn’t mention.

Nevertheless, Brecht is already on it, and expects to have a first WIP demo of Cycles-OpenSubdiv at Siggraph next week! Ton

EDIT:

Entering blender in the search there:

http://s2013.siggraph.org/search/google/blender?query=blender&cx=004945459997085312052%3Arjh5jdlmsma&cof=FORID%3A11&sitesearch=

This is really great news - at least for PTex and render time!!!

Will the “OpenSubdiv” also provide faster screen refresh when modeling?

I saw some of the demos in Modo and OpenSubdiv there adds some really nice modeling and texturing features

like removing the edge darkening when the subdiv level is low like in Blender.

I believe at the moment its only intergrated into cycles… and there is a working test at siggraph at the moment… I missed it at the birds of a feather but i will definetly stop past their booth today and check it out… anyone got any specific questions they want answered from ton / brecht?

Yes, I’d have a question for Ton: When shall I have back all the former functionality of mouse swiping in Mac OS X (since it is also a Mac user)?

Of course I’m not really asking you to put him the question… just to say.

paolo

Any news on SSS shader support for GPU?

You may be waiting a while. Only one renderer has pulled it off (Octane) and no one is quite sure how. Traditional SSS approaches and the GPU aren’t really made for one another.

I don’t doubt that SSS on the GPU would tie up a lot of resources so I was wondering if there wouldn’t be a benefit in adopting a fast fake SSS shader without traced scattering? A simpler shader that might be easier to port to the GPU and it would also benefit CPU rendering where only the impression of SSS is needed. Maybe this could be an interesting challenge for Thomas Dinges?

DingTo just committed a fix for the Sobol sampler.

http://lists.blender.org/pipermail/bf-blender-cvs/2013-July/057701.html

Is it possible that the problem addressed here was why the Sobol sampler did so bad on that diamond box scene (posted a month or so ago) compared to the Correlated Multi-Jitter sampler? If it does solve that issue than it would indeed make the argument for the new sampler a bit less convincing.

Iray and Arion also have SSS on GPU. Btw Arion seems the most feature complete GPU wise…AFAIK

That is not a fix for the Sobol Sampler. This commit fixes the override of fx/fy variable, when using CMJ sampling. I couldn’t see a quality difference though, but this should give a slight speed-up when using CMJ.

Eugenio jr., both are hybrid render engines and only parts of render process are calculated on the GPU.

But nobody knows which parts.

Cheers, mib.

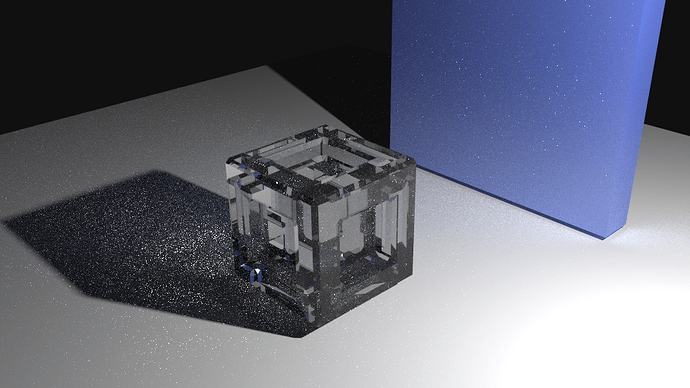

About the quality difference, I tested my old caustics test scene that I used to compare Sobol and CMJ, and the result with CMJ now seems to have better distribution with caustic bounces than before as seen in these images.

After the fix.

Before the fix

Notice the caustics behind the cube and the reflected caustic paths on the wall, the caustic samples behind the cube has a somewhat more even coverage and the average distance between samples on the wall is further apart.

There’s also a higher visibility of bright samples in the shadow behind the wall, to me, one of the more important things is that these fixes improves the sampling of difficult light paths (as I can tell that there’s not much room for improvement with the easy ones.)

I tried to see the differences and I fell asleep

LOL@Bao2… xD

I real feel like this, these days.

I hope you feel the same, with my posts I mean.

LOL and LOL.

Open both images in two tab and flip back and forth.

They are definitely different, but I cannot tell which one “is better”.

It’s not going to be a quantum leap of any sort, but looking myself I can see less ‘holes’ in caustic sample coverage in some areas and less abrupt changes in sample intensity in others.

The shadowed area in the back also contains more and brighter samples and a real close look shows a significant improvement in the reflective caustics on the floor near the base of the blue object.

I really don’t quite understand this:

How come y’all seem to presume the caustic samples being more evenly spaced or more widespread or such to be a sign of better sampling.

Let me spell this out: A caustic is basically (not trying to speak in raytracing-terms here) a bright spot on a dull surface (diffuse shader), caused by lightrays havin’ been focussed in that specific spot due to either the reflective properties of another, accordingly shaped, nearby surface (a concave mirror, basically), or due to the refractive properties of an accordingly shaped transparent, nearby object, with IOR to allow for notable refraction and being accordingly shaped (like a lens, basically).

Of course caustics can also be a combination thereof.

So I repeat my question: What leads you to the assumtion, a more widespread or evenly spaced dirtribution of those fireflys would be a sign of more efficient sampling of an optical phenomenon which by it’s nature is all about lightrays being focused in specific, small spots?

Aren’t ‘aprupt changes in sample intensity’ as Ace Dragon phrased it, exactly what is to be expected in the case of caustics?

Wouldn’t the only way to make any sense of those pictures and to dare drawing any conclurions about ‘better’ or ‘worse’ samples distribution be to bring the scene(s) over to Luxrender and then let those caustics actually converge there, so we see, what the ‘right’ solution actually would look like?

(Always assuming we all agree on Lux to be precise and correct enough.)

greetings, Kologe