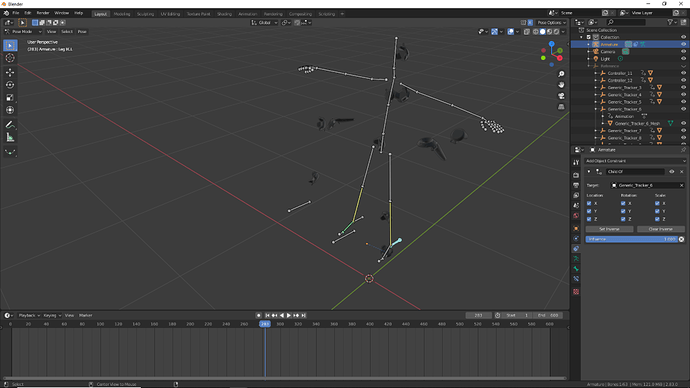

I’ve been trying to come up with a working mocap solution using HTC Vive with 9 trackers and the 2 controllers and get that into Blender onto a rig using Brekel OpenVR Recorder.

https://brekel.com/openvr-recorder/

I actually get the data recorded without issues with the software, but it only records to FBX. I can also get the FBX imported into Blender (2.83 just fyi) without any issues. At that point, I can’t seem to get the imported FBX data to map to the bones of the rig (rig is very simple human bone rig with IK, not rigify or autorig type).

The FBX imports with no bones, just meshes and empty’s with animation attached to each mesh/empty. They also seem to all be separate objects.

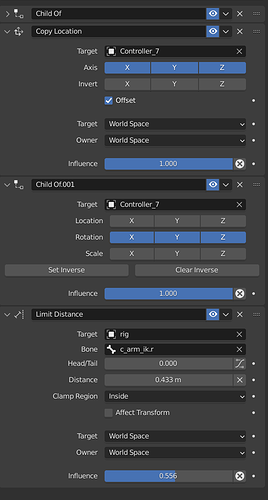

When I try to put on a constraint for the bone to be parented as a child of one of the emptys, it won’t parent the empty to the bone, but instead to the entire rig. Then as soon as you start the animation, the entire rig is thrown around like crazy!

I’ve tried joining (ctrl j) all the meshes with the rig but that doesn’t change anything.

I’ve tried highlighting a mesh/empty, then highlighting the rig and going into pose mode, selecting the bone, ctrl p and chosing the object at the parent, but then…nothing happens at all…the mesh/empty just stops being animated and the rig doesn’t move at all.

Really trying to not get super discouraged. I assume that the process is somewhat simple…and I’m just missing something easy (I hope). There are videos of someone doing the same thing, except not in Blender (unfortunately) but instead in Maya, and it seems easy…so I know there’s got to be a way to do it in Blender!

I’ve also reached out to the creator of Brekel, but he simply told me he doesn’t know Blender at all, and pointed me to the video (included in link above), that I’d already watched a few times.

I’ve done a TON of Googling and YouTube searching…and I can’t find anything that helps.

Any help would be greatly appreciated!

emo