I’ve tried several ways to build a budget mocap environment that can be used with blender.

- Xbox Kinect based motion capture

First, I bought two used old Xbox Kinects, connected them to a PC, and recorded mocaps using the brekel body. I was able to get some pretty bad results. However, when a part of the body was covered, tracking was not performed properly, and Microsoft stopped supporting Kinect, causing problems with long-term use.

Brekel v3 Beta multiple Kinects for motion capture - YouTube

Kinect mocap test(Kinect v1+Kinect v2+Brekel body v3)

- Camera-based motion capture.

I tried video-based motion capture such as Deepmotion and Plask. It was greatly affected by the brightness of the surrounding environment and the color of the clothes worn by the actors. I also tried TDPT, an ios-based motion capture app. It is an app that enables real-time motion streaming and export. I was able to get pretty decent results. However, like the Kinect, if a part of the body is covered, tracking is not performed properly.

Plask - Insanely Free AI Mocap Solution! [Tutorial / Review] - YouTube

トラッキング技術勉強会 スマホだけで全身モーキャプiOSアプリTDPT - YouTube

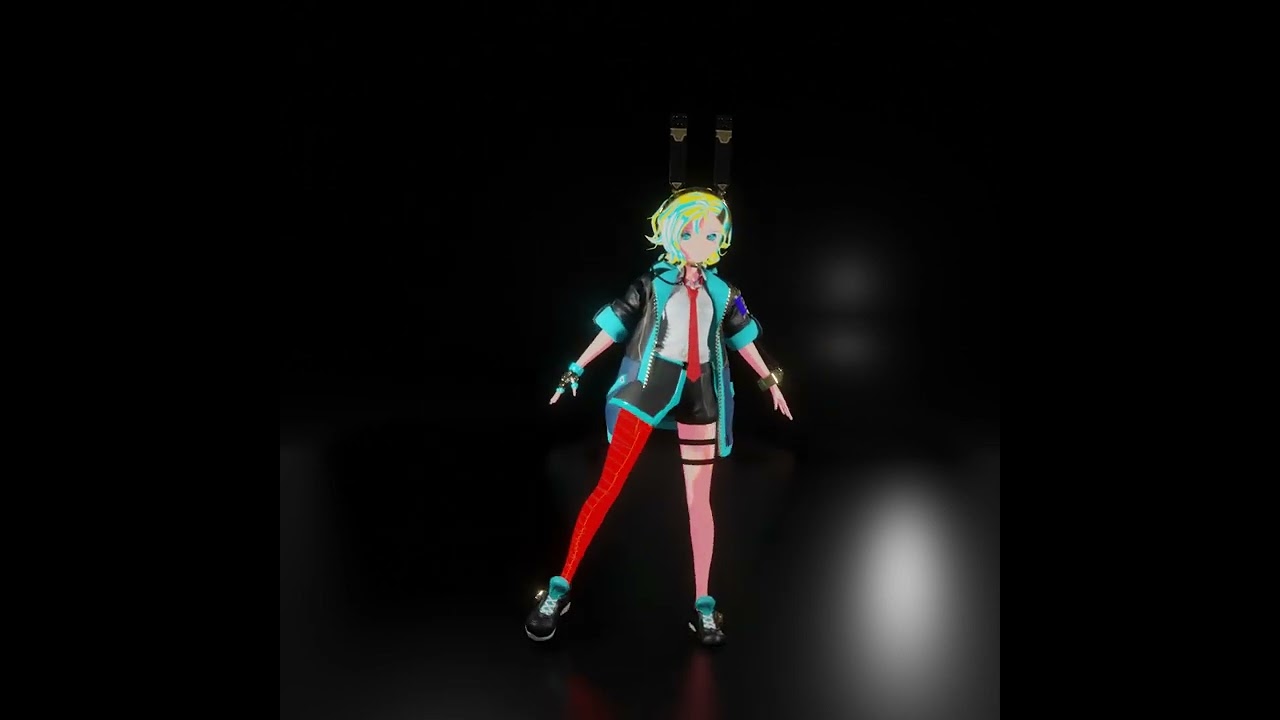

TDPT mocap test

- Steam vr based motion capture.

The ideal environment is to buy a lighthouse-based base station and vive tracker, but since it is expensive, I first tried diying and testing a slime vr tracker that can be used in steam vr. slimevr is an imu-based VR tracker and has been released as an open source. The parts cost is very cheap(Almost $100) .

The VR equipment I have is Quest 2, and it is possible to connect it to a PC using a virtual desktop. The body parts where the slimevr tracker is worn are chest, waist, hips, knees, ankles, and feet. The head and both hands use the hmd from Quest 2 and the left and right controllers. The steam vr mocap tool used for testing is mocap fusion. I was able to get some pretty good results. Because it is imu-based, it was not affected by the body being obscured by obstacles, and it was possible to precisely track the rotation of the joint. However, since slimevr operates in the fk method, which calculates the position of the rest of the body based on the position of hmd, the foot slips when moving. It was possible to minimize it by entering the body length as accurately as possible. The same imu-based mocap suit (Rokoko, Xsens) seems to prevent slipping by checking whether the foot is in contact with the floor in the software. It is thought that it is possible to filter motion in a similar way in blender.

In addition, in the case of arm tracking, the joint does not rotate smoothly because arm tracking was performed using the ik method based on the position of the left and right controllers. Slimevr doesn’t support elbow tracker yet, but it is said to be included in development plans. And Slimevr’s latest update adds the ability to record motion as bvh on its own. The motion of the arm is excluded as it is still in the experimental stage.

Inspired by the results of the mocap test using SlimeVR and Steam VR, I am now considering purchasing a vive tracker. However, I am hesitating because the price is relatively high. ![]()

If anyone has any other inexpensive mocap methods or ideas for improvement, please let me know!

SlimeVR tracker full set

SlimeVR my ULTIMATE review! - YouTube

Mocap Fusion (Trailer) - YouTube

Rokoko Studio BETA - Essential Cleanup Filters - YouTube

Slime VR mocap test (Using mocap fusion)

Slime VR mocap test (Using slime vr bvh recording tool. Without arm motion)

Slime VR in-game test (VR Chat)