In my first post, the formula I used was not correct and the fault was mine. Both setups are ‘perfect’, that reads the math is correct; though the first is not producing the result Cycles would like to recieve when you plug a texture to the NormalMap node.

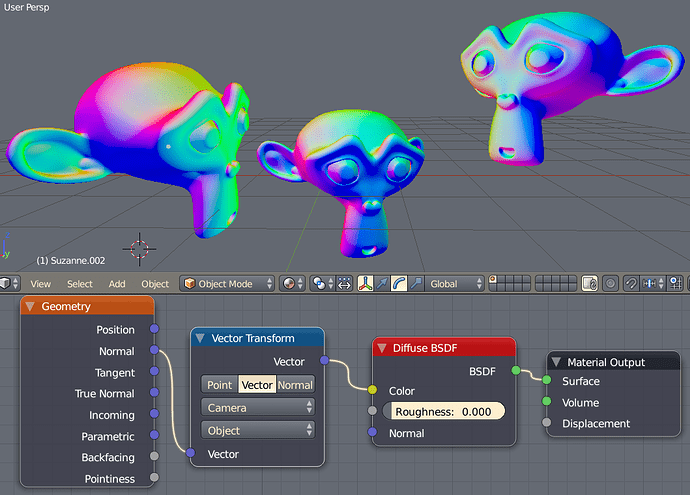

There are two ways of making tangent normal maps. Some engines, use the formula I posted first, but Cycles uses the second formula (which was not really what I expected).

Normally, specially in games, normal textures are stored in an unsigned 8 bit per channel. But the original normal vector has a range from -1 to 1 in each component. The trick is to change the range from [-1,1] to the unsigned [0,255] range of the 8bits (or in normalized color components, to turn the [-1,1] into [0,1]).

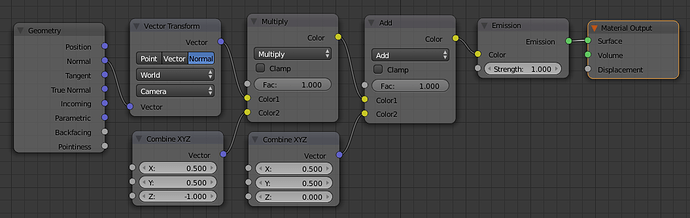

In this case, we multiply the [-1,1] by 0.5, which results in a range scaled to [-0.5,0.5] and then add 0.5, to end up with the [0,1] that we need. Because the Z’ component is allways positive (it points to above the surface), most games engines just use that component unchanged because it lets us have more values for that axis (256 values). Note that the Vector transform uses a negative Z in camera space, where -1 points to the camera, and 1 points to the view direction, so we multiply this by -1 to get it inverted.

Cycles, and most render engines, they just simplify the code and they treat all components the same way. So the operation can be resumed into CameraNormal*[0.5,0.5,-0,5]+[0.5,0.5,0.5]. However in this case, there are 4bits of the blue channel that are not used (since 0-127 correspond to negative values which don’t apply to Normal vectors). That’s my second post.

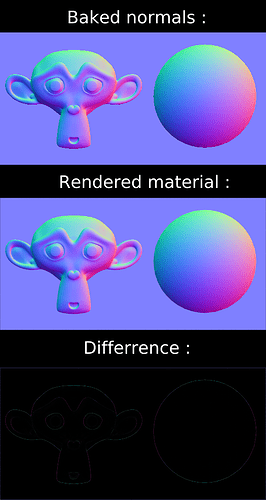

If you now test the result from my last setup against the bake, you’ll only find differences in the aliased part of the baked result. Cycles’ baking does just one sample per pixel when baking normals, and this produces an aliased effect. While rendering from the camera, you have an antialiased result (at least if you render it with more than 1 sample).