Hey all, thanks for going to these lengths in order to help out on this, it’s very much appreciated!

Unfotunately none of them would work for me (too cumbersome). They provide an interesting insight on different approaches to the problem though, so although I won’t be using them I’m pleased they were pointed out and demonstrated.

And I have some good news (ecstatic news if I’m honest about it)!

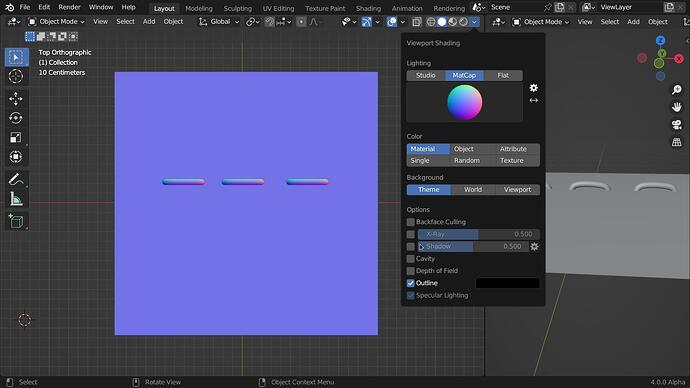

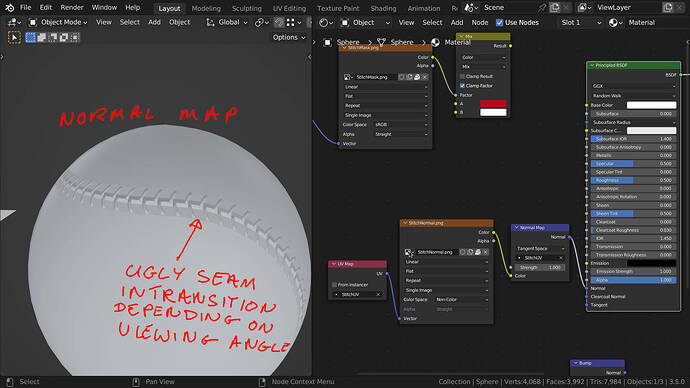

I just found out something that I think the Blender community in general are not aware of (I know I certainly wasn’t), and it’s quite a biggie cause it’s a technique that effectively lets you paint Normal maps live in Blender on even the lowest resolution objects while allowing you to genertate a Normal map so detailed, it’s restricted only by the resolution of the Normal map itself, not by the resolution of the mesh!

I got wondering why (since we can paint Bump maps in Blender and see the result live in the viewport), why on earth can’t we do the same with Normal maps?

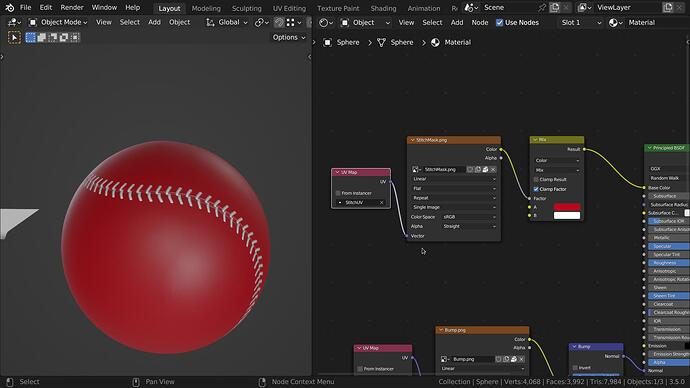

Well guess what, it turns out Blender is capable of internally converting Bump into Normal information and it’s actually really easy as demonstrated in the attached video. You will notice that in the video he uses a procedural bump, but no doubt the same process is possible with a painted map too!

This is big, I certainly think so, because it allows you to do texture-map-resolution Normal maps directly in Blender, and all completely independent of the resolution of the mesh! With this at your disposal, there is nothing stopping any Blender user painting live using Bump, and then converting it internally to a high reolution Normal map.

This is the sort of stuff I’ve always been led to believe I would need ZBrush to achieve. Not so, I just tried it and it works perfectly, it literally allows you to paint insanely detailed Normal maps directly in Blender, all onto a low poly mesh, and all it needs is a Bump to Normal conversion once you’ve painted (or as shown here, procedutally generated) your Bump map!

Out of all the videos I’ve ever seen regards Blender tips, this one has to take the top slot for being a game-changer. I’d bet my life there are literally thousands of people out there buying ZBrush because they have no idea this is even possible!

I was so excited by it that I almost posted before trying it yesterday. But I thought it was too good to be true so I thought I’d better try it first, and wow, it actually works, you can actually paint super-detailed Normal maps in Blender thanks to Blender’s ability to internally ‘Bake’ a Normal map directly from Bump information with no high resultion mesh needed, so it’s all handled smooth as butter by your computer, no need for it to break into a sweat!