I’ll add what I can to the already excellent answers you got.

Your question lacks, in part, precision, because the textures that you download from websites like Polyhaven, ambientCG and whatnot are also procedurally generated, but then baked into an image format.

So, a better way to ask this is: should I texture everything using shaders or should I use texture images (that I create myself or that I download from somewhere)? With which one do I get better/more realistic results?

And the answer to this is: it depends, and mostly you’ll find yourself doing both.

Shaders, which in Blender (or any other engine really) people use to make procedural textures, are fundamentally bits of code that manipulate single pixels. What you do, with them, is fundamentally twisting and manipulating some coordinates to generate a series of maps that you can use to create your material. The main advantage of a map created in such way is that it does not tile like an image texture would. Also, you can do some things, particularly animations and dynamic effects, that would not be done easily or efficiently with image textures.

The disadvantages of shaders for the creation of materials are also many. To say the ones that come to my mind in this moment:

- shaders, as I have written above, operate on a per pixel basis. This means that some things that are incredibly easy to do if you’re working on an image are plainly impossible to do with shaders, or they require a number of hacks and tricks that would be trivial with an image-based workflow. One banal example: blur. Since a shader operates on a pixel, and not on the whole material, it is not aware of what surrounds each pixel. Thus, blurring is impossible.

- shaders are calculated at the moment, and thus perform worse than a baked image.

- shaders are not portable. You have to redo them for each engine. The logic in the end is always the same, but you can’t just move a Blender material to, say, Unreal without effort or baking. (MaterialX might change this)

Now, let’s talk about image textures. The ones you can find on the website you mentioned are also generated procedurally, but in a different manner. Specifically, using the most common and best texturing software there is, Substance Designer. There are also other applications to produce textures (Quixel Mixer, Materialmaker, Substance Painter, etc.). Substance Designer is procedural, but it bakes its final result as an image. Inside it you mostly manipulate the whole canvas using nodes, meaning all kinds of effects are easily possible. You can also make a material generated there procedural by exposing any value, and then you can export the material as a Substance archive, to be used procedurally in every engine (Blender included, using their relative addon).

The advantage of this is clear: you can create very sophisticated and detailed materials in a fast and iterative way, manipulating whole textures as opposed to single pixels. The results you produce, being baked, can be used anywhere, and they will be tiling correctly.

The disadvantage is that, being the material baked, it is necessarily tiling. Such tilingness must be accounted for when generating the material. A common workflow is to apply a material using Substance Painter, which is quite good at providing tools and techniques to conceal it. But, of course, a lot of the lifting will be done by the shader you “feed” these images to…

which brings us to the final part of my answer: in reality, you cannot give up shaders, even if you won’t use them for generating the various maps. In fact, those image textures will still be fed to a shader! And setting the shader up properly is fundamental, for both hiding the tilingness, adding and mixing extra noises and materials, and, most importantly, to emulate the physical properties of a material.

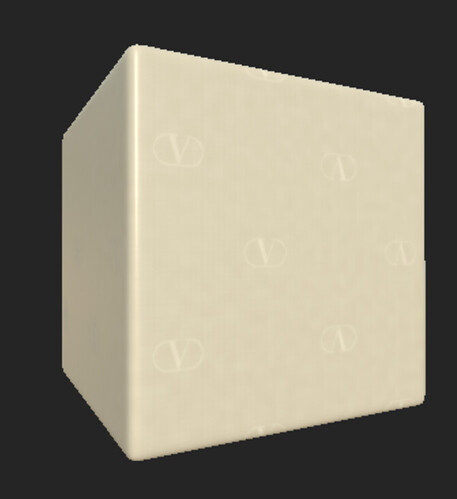

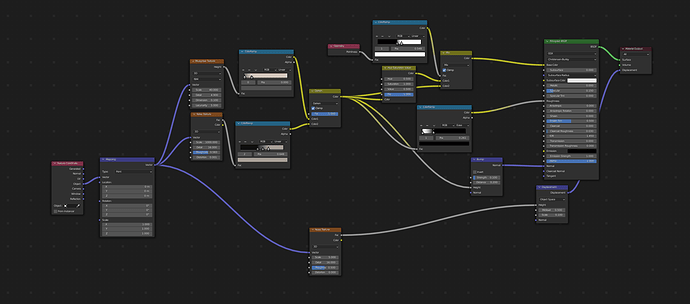

Let me give you an example: recently for a customer I worked on a silk material. In the end, to make things short, the project was changed, so I can share here some things. So, first I created the cloth on Substance Designer. This is the material I have ended up creating using Substance Designer (screenshot from Substance):

Not realistic, right? The things is, all the textures I needed are generated correctly, but it’s not enough. I need a proper shader to simulate with absolute realism the cloth. Let me show you a test render I’ve made with Blender. It uses those exact same textures, but they are now being fed to a properly set up shader:

The render was done as a very quick demo for my own tests, but, as you can see, now realism has been achieved. By using a properly set up shader, I was able to imitate the properties of the cloth in a precise way.

So, what is the conclusion? Well, if you want to achieve “realism”, you have to use both. Actually, you have no choice: in any case you will use shaders. But, depending on the use case, I do think that image textures allow for an amount of details (and manipulation power if you generate them yourself) that would be not easily achievable if you were generating those very same maps using shaders. And Blender is going in this direction too, after years of ignoring texturing, as from what I have heard one day Texture Nodes should become a thing.