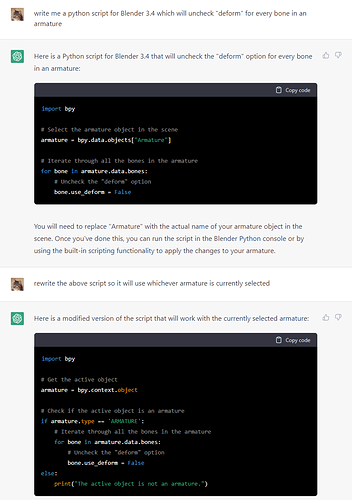

Honestly, it does a lot better than I’d expect. I’ve now had it write three functional scripts. One was for random location. One was for random color. And the last one was for the script talked about in this thread.

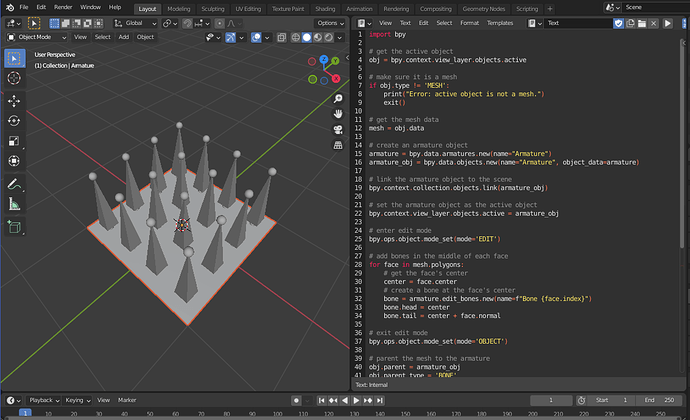

I gave it the input of “Write a python script for Blender that adds bones in the middle of each face on a plane.”.

The first script it gave me didn’t work.

import bpy

# get the active object

obj = bpy.context.view_layer.objects.active

# make sure it is a mesh

if obj.type != 'MESH':

print("Error: active object is not a mesh.")

exit()

# get the mesh data

mesh = obj.data

# add bones in the middle of each face

for face in mesh.polygons:

# get the face's center

center = face.center

# create a bone at the face's center

bone = obj.pose.bones.new(name=f"Bone {face.index}")

bone.head = center

bone.tail = center + face.normal

Then after just 3 revisions it gave me a near fully functional script.

import bpy

# get the active object

obj = bpy.context.view_layer.objects.active

# make sure it is a mesh

if obj.type != 'MESH':

print("Error: active object is not a mesh.")

exit()

# get the mesh data

mesh = obj.data

# create an armature object

armature = bpy.data.armatures.new(name="Armature")

armature_obj = bpy.data.objects.new(name="Armature", object_data=armature)

# link the armature object to the scene

bpy.context.collection.objects.link(armature_obj)

# set the armature object as the active object

bpy.context.view_layer.objects.active = armature_obj

# enter edit mode

bpy.ops.object.mode_set(mode='EDIT')

# add bones in the middle of each face

for face in mesh.polygons:

# get the face's center

center = face.center

# create a bone at the face's center

bone = armature.edit_bones.new(name=f"Bone {face.index}")

bone.head = center

bone.tail = center + face.normal

# exit edit mode

bpy.ops.object.mode_set(mode='OBJECT')

# parent the mesh to the armature

obj.parent = armature_obj

obj.parent_type = 'BONE'

obj.parent_bone = "Bone 0"

# apply automatic weights to the mesh

bpy.ops.object.parent_set(type='ARMATURE_AUTO')

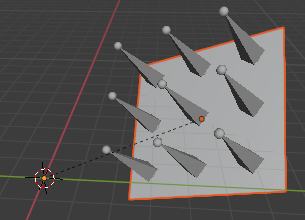

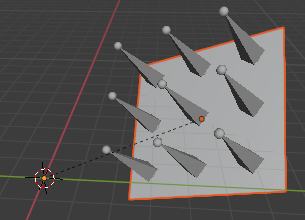

This script of course works for 3D objects as well.

The only issue was that it didn’t account for the transforms on the object itself (rotation, location, and scale). But after just two more revisions it fixed the code.

import bpy

# get the active object

obj = bpy.context.view_layer.objects.active

# make sure it is a mesh

if obj.type != 'MESH':

print("Error: active object is not a mesh.")

exit()

# get the mesh data

mesh = obj.data

# create an armature object

armature = bpy.data.armatures.new(name="Armature")

armature_obj = bpy.data.objects.new(name="Armature", object_data=armature)

# link the armature object to the scene

bpy.context.collection.objects.link(armature_obj)

# set the armature object as the active object

bpy.context.view_layer.objects.active = armature_obj

# enter edit mode

bpy.ops.object.mode_set(mode='EDIT')

# add bones in the middle of each face

for face in mesh.polygons:

# get the face's center in local space

center = obj.matrix_world @ face.center

# create a bone at the face's center

bone = armature.edit_bones.new(name=f"Bone {face.index}")

bone.head = center

bone.tail = center + obj.matrix_world.to_3x3() @ face.normal

# exit edit mode

bpy.ops.object.mode_set(mode='OBJECT')

# parent the mesh to the armature

obj.parent = armature_obj

obj.parent_type = 'BONE'

obj.parent_bone = "Bone 0"

# apply automatic weights to the mesh

bpy.ops.object.parent_set(type='ARMATURE_AUTO')

Not gonna lie…it’s already out smarted me…

Also, for anyone curious, here are the other two scripts I mentioned.

Random Location

Prompt: “Write a python script for Blender that places all selected objects in a random location.”

Revisions: 0 (worked on the first attempt)

import bpy

import random

# Set the range for the random locations (x, y, z)

min_range = -10

max_range = 10

# Get the list of selected objects

selected_objects = bpy.context.selected_objects

# Iterate over the list of selected objects

for obj in selected_objects:

# Set the object's location to a random value within the specified range

obj.location = (random.uniform(min_range, max_range), random.uniform(min_range, max_range), random.uniform(min_range, max_range))

# Update the scene

bpy.context.view_layer.update()

Random Color

Original Prompt: “Write a python script for Blender that gives all selected objects a material with a random color.”

Revisions: 4

import bpy

import random

# Get the list of selected objects

selected_objects = bpy.context.selected_objects

# Iterate over the list of selected objects

for obj in selected_objects:

# Remove any existing materials from the object

obj.data.materials.clear()

# Create a new material for the object

mat = bpy.data.materials.new(name="RandomColorMaterial")

# Set the material's color to a random value with an alpha value of 1

mat.diffuse_color = (random.uniform(0, 1), random.uniform(0, 1), random.uniform(0, 1), 1)

# Assign the material to the object

obj.data.materials.append(mat)

# Update the scene

bpy.context.view_layer.update()