This is possibly a slightly unusual question, and apologies if I am posting it in the wrong place; I was a little unclear on where best to put this particular topic.

I am a (hobbyist) developer for a non-commercial, open source computer game (Simutrans-Experimental, being a fork of Simutrans). Part of that development work involves creating graphics for the game. The graphics engine is rather old (late 1990s), and has no 3D rendering of any kind: instead, the graphics are sprites. Being a new user, I am not permitted to post any links, but a web search for “Simutrans” should make clear what the graphics look like. (They might well be so old as to be trendy now for being “retro”, but I digress).

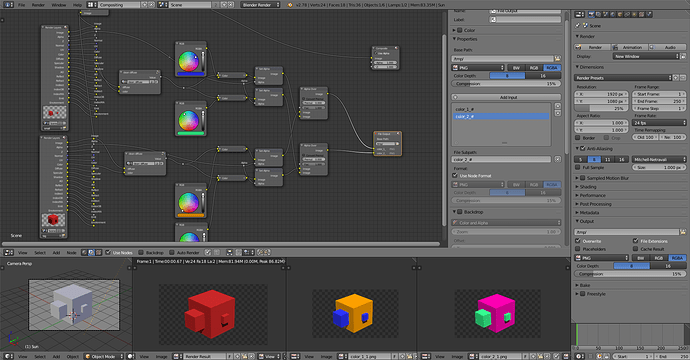

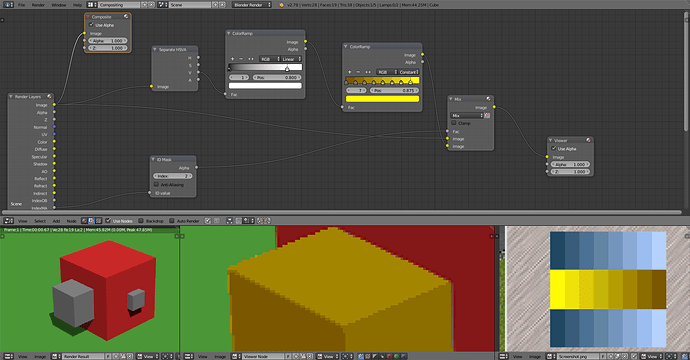

There are different “paksets” for Simutrans (i.e. different sets of graphics and data for the game), and the graphics for each of these paksets is produced using different workflows. The pakset on which I work uses Blender as an integral part of its workflow: using a standardised lighting and camera setup, the basic Blender rendering engine and a script which rotates the camera a specific number of times (4 for buildings, 8 for vehicles), the correct 128x128 pixel images can be generated automatically from the Blender scene. Because the graphics are very low-fi, the Blender models are extremely simple and crude, but this has the advantage of allowing a very small number of people to produce a good number of them in a relatively short time. Speed of production and automation of workflow are therefore very important. A recent change to the Simutrans code, allowing alpha blending using an alpha transparency channel in a .png file, has made this process even quicker than it used to be: no post-processing of the graphics is required at all once they are rendered from Blender.

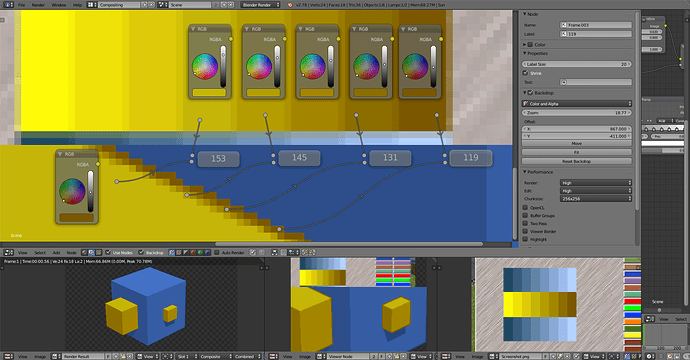

That is all by way of background. The real issue is this: Simutrans has a feature, similar to that found in other transport games of the era, in which players can choose a pair of colours as their transport company’s corporate colours (a primary and a secondary colour), and that colour will automatically be applied to objects in the world using a colour substitution system: any pixels with one of a range of about 12 different values (if I recall correctly, six shades of a specific hue of cyan and six shades of a specific hue of yellow) will be replaced by an equivalent shade of the player’s chosen colours.

However, this system was designed for hand-drawn graphics, not graphics produced by Blender. The pakset on which I work has only implemented this system in a very limited way (and, importantly, not at all for vehicles where it might be most useful) because making any use of it requires extremely labour-intensive pixel editing of the .png files after they have been rendered. Because of the very large number of vehicles, it is impractical to use the player colour system for vehicles in this particular pakset.

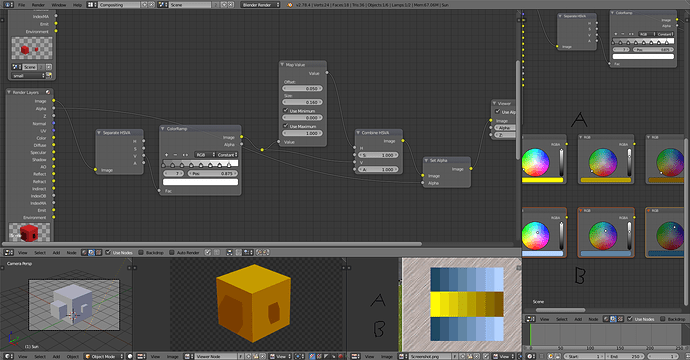

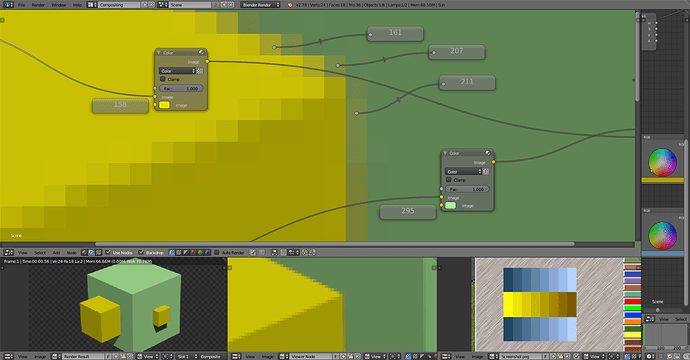

I was wondering whether there is any possible way of automating this process in Blender either with nodes or scripting, by having a special material used for the primary and secondary player colours which would ensure that the output colours are always in the range of the standard pixel values.

The real difficulty that I anticipate with this (and it is a difficulty which might well, I appreciate, not be surmountable, but I thought that it was worth a shot at asking the experts in case I am wrong about that) is that the graphics all make full use of anti-aliasing. Obviously, it would be no good if the special player colours were to blend in with other colours, as the blended colours would not be substituted by the game, leading to an unnatural transition between the substituted colour in the game and the blended pixels to a non-substituted colour. Is there any way of which anyone can think of circumventing this without disabling anti-aliasing (which would reduce the quality of the graphics so much as to be unacceptable)?

I should be grateful for anyone’s views on the point.