Hey there geniuses!

Advice welcomed though professional expertise needed…

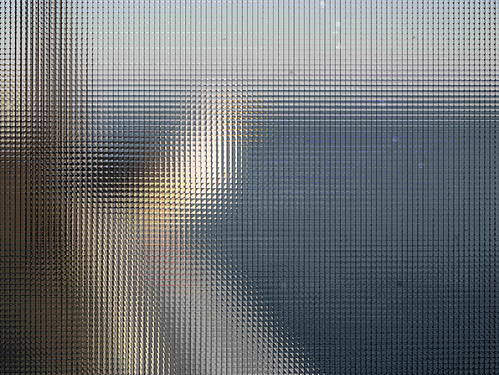

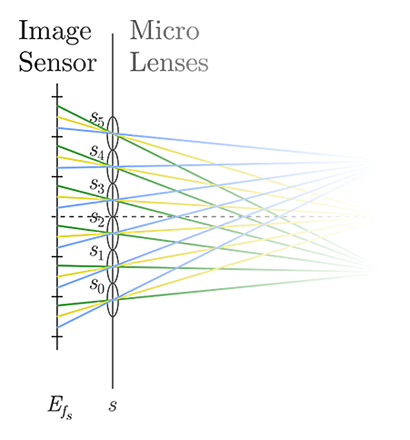

I’ve been tasked with building a piece of software that processes “plenoptic/light-field camera” data. Simply - in real life - this means we’d add a “microlens array” on top of our camera’s sensor to take unique pictures. A microlens array is a clear piece of glass with little bumps on it (like the eyes of a housefly) and there are a number of different ways we can arrange these bumps.

What I want to do is create a Blender file that has a virtual camera with a microlens array attached to it. With a few clicks, you should be able to swap out a range of different microlens configurations and environments to photograph.

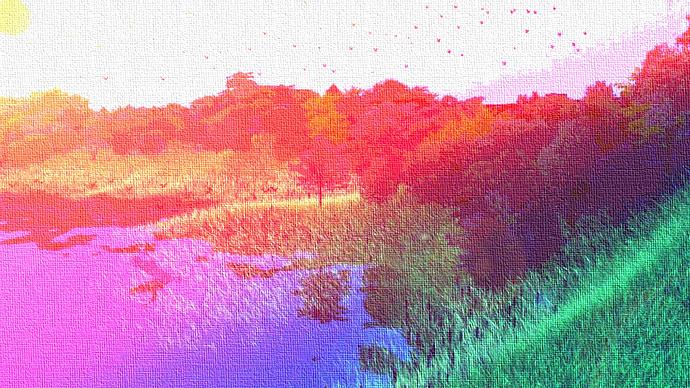

The photos taken by the virtual camera must be perfectly photorealistic and mimic the real light-field dataset enough to be interpreted by my software. All resources here.

Who can best help:

Someone who understands how light and cameras work in Cycles inside and out. We can’t afford to kinda get a replica dataset, it needs to be indistinguishable a real plenoptic camera. I’ve thought to use Blender because I’ve built large photographic datasets using it in the past with good results.

If you think you can help:

Send me a message and we can arrange a deal. We will start with a one hour consultation to discuss the problem and your possible approaches. From there we’ll hopefully finalise a solution for mass-acquisition of data within a week.

Please reach out with any questions

Tom