Anyone had tried so far ?

Parallax mapping is performed at shader level, so it relies entirely on the GPU.

i though (confirmed by daedalus) that GPU was completely discarded by Range/upbge/bge. Never saw any display or advertisement promoting the use of any graphic card whatsoever since BGE 1.0

MattFrnndz is right! The shaders weigh on the gpu, the informations sent to the shaders are performed by the cpu, but the complexity of a shader is a matter of the gpu. The material panel or the shader node editor generate glsl code and glsl code is compiled and then executed by the gpu not by the cpu.

I remember in Blender 2.79 that only Cycles was able to handle GPU and so the internal Blender render was running only on the CPU (both multitexture and glsl). Since Range is based on 2.79 i really wonder why this would be different.

And if it’s different, is there any proof and showcase of performance difference . And even if there’s differences, how do we need to activate the GPU and how do we know our GPU is compatible with Range.

Too much mystic around this. Wouldn’t be cool to see simply a BGE game running with and without GPU activated ? Because i feel there’s no real differences

Wait wait, there’s nothing mystical about it ![]() , and it’s all very clear and verifiable!

, and it’s all very clear and verifiable!

Offline rendering engines use CPU: blender_render (in 2.79b and <) and cycles

(Cycles also has the option to use the gpu instead of the cpu)

The bge/upbge real time rendering engine (and so also the 3D viewPort) are dependent on the GPU.

If you have a computer with two graphics cards you can easily verify what I’m telling you.

All you need is a notebook with an Intel processor and a dedicated GPU (like the one I usually work on).

Intel processors have an integrated graphics card (iGPU) (not particularly powerful) and the OS allows you to choose whether to launch an application (Blender for example) on the iGPU or on the dedicated GPU (Nvidia/Amd).

Usually when I write shaders in GLSL code or prototype them with the shader node editor, i test them with both cards to see how the shader runs even on the weak card (the intel GPU).

With complex shaders, or many 2D filters there is a difference in fps when using one GPU or the other; and this already shows you that real-time rendering is a GPU thing and not a CPU thing.

Every computer has a video card, at least the one integrated into the processor.

But an iGPU (integrated video card) is still a GPU!

If you hear that old versions of blender do not require a video card… well, that’s a poorly worded phrase. It should be understood in this sense:

Older versions of Blender (<= 2.79b) worked with openGL (<=2.1).

These versions of openGL are now quite old and integrated video cards since several years ago were already able to support them. This is to say that Blender 2.79b/Upbge 0.2.5 can run on a PC that does not have a dedicated video card.

But this means that realtime graphics will be handled by the integrated GPU (but not by the CPU)

GLSL code does not run on the CPU!

Well, i would be happy to see someone posting some BGE games benchmark with their GTX 1050 on vs off. I fear there’s not much difference, but i would be very happy to be wrong on this ![]()

No problem buddy, then get ready to be surprised ![]() , I’ll give you two quick screenshots at almost 1080p resolution (because there are bars at the top):

, I’ll give you two quick screenshots at almost 1080p resolution (because there are bars at the top):

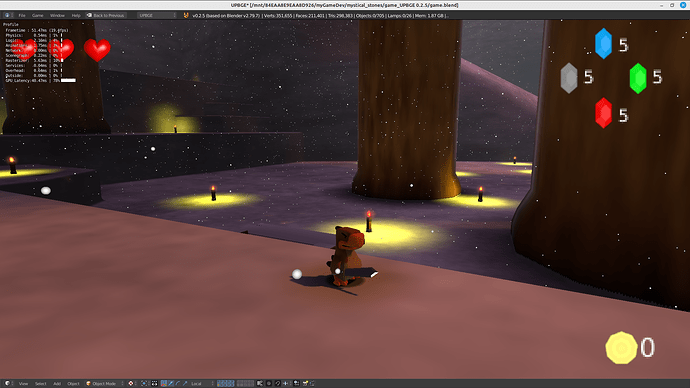

This is the prototype of my game where all the shaders are built with the node editor (apart from the one relating to the snow particles) and there are no 2D filters!

The first image is taken using upbge 0.2.5 running on the iGPU of my notebook: around 20 fps

This second image is taken again with upbge 0.2.5 running on the GTX 1050Ti of my notebook 111 fps

I would say that the difference is quite a bit!

As you can see, especially with fairly high resolutions the iGPU tends to struggle a lot.

PS:Don’t pay attention to the scenario, it’s a temporary scenario used to test a few things ( as you can guess from all these lamps positioned so randomly)

I hope I convinced you buddy!

nice. So you have nothing to activate to have upbge/range using the GPU ? And other question, how could I know if a Ryzen 7 5800G (apu) gonna accelerate stuff on Range engine ?

You don’t have to activate anything in upbge! If your PC has only one GPU, you don’t have to do anything, when you launch upbge your GPU will work. Otherwise, if your PC has 2 GPUs, integrated and dedicated, then you can choose which one to activate on upbge.

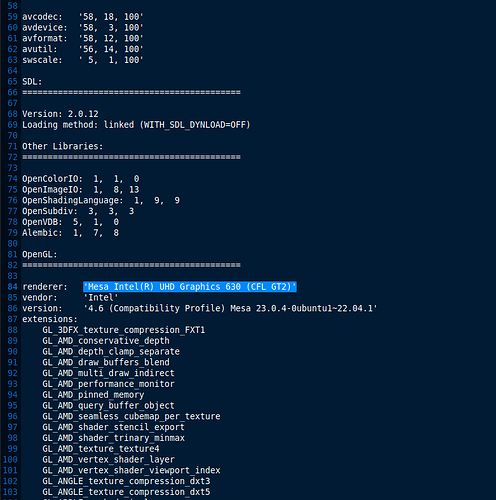

When you launch upbge, to check which video card is working, save the system info and look at the renderer

That is my iGPU

If instead I launch upbge on the dedicated GPU and print the system info I get this:

That is the GTX 1050 Ti in my notebook

And as you can read below, both the dGPU and iGPU support openGL up to the latest version available which is 4.6