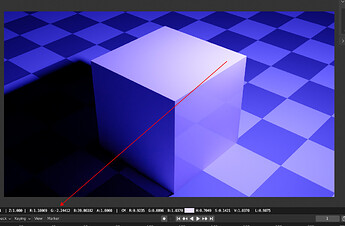

About denoising.When get the image in Blender denoised,before or after tonemapping?And if you think outside of the box at Resolve Eg.Then you can place a denoise node at everyplace in the nodechain you want (before or after tonemapping).

Not sure about open domain, but the virtual light in front of the camera makes somehow sence.

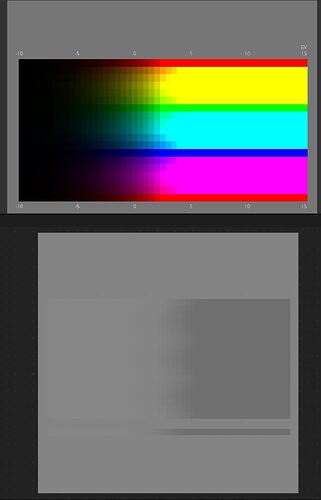

I think beside the selected gamut and whitepoint,The most important value with all this Tonemapping topic is the neutral gamma grey of the display device and the EV0 grey in the tonemapping as anchorpoint.

If the middlepoint dont fit,everything gets wrong.Because the middletones around this neutral grey ,like skintones, grass,plants etc are the most important.

This is a good example for the retina tonemapping paper i posted at devtalk.I made some tests with some formulas from this paper in the compositing with the original hdri.I think this works very well.

But instead of denoise it does a cone based light compression or lifting based on light strength and conebased gauss sharpness.

As a side topic,Monitor or TV calibration.I have read about this in the net, and all Display brands have the same problem with HDR footage.How the footage are mastered,vs the max nits the device can display.

Even if you can display say 1000 nits,then you maybe only want 600 nits because its mastered for this amount .Or if you have a OLED that can only display say 500 nits but the footage is masterd at 600,you get the idea.

And the 100 nits reference for SDR looks way to low to me.

And to comeback to topic.As sayed,The Neutral gamma grey of the Display Device seems to me the key point here,as the grey anchor point with tonemapping.

What about Dolby Vision?The Perceptual quantizer seems to make good job?