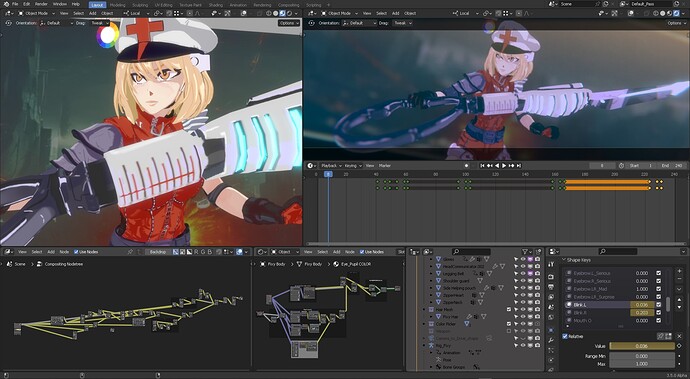

Hello, I wanted to share the progress I am doing with the Realtime Compositor in Blender 3.5 with a retro anime look and feel with my original character “Fixy”.

For the past 3 weeks I’ve been working with the Realtime Compositor in Blender, trying to accomplish an anime stylized look for “Fixy” (OC). Here’s the capture directly from Blender 3.5 RT compositor.

I work with a TitanX (1080gtx) Nvidia card. I imagine people with RTX series will get even faster and better results.

Highlights:

-

Alpha doing “light wrap” over the pre-rendered background in an image sequence (otherwise, you can’t light wrap in realtime animation (not until we get Render Layers, or Shader to AOV in realtime)

-

RGB channels can be split, blurred and grained individually. They work well in this proof of concept for fringing edges. To make it better: Posterize and Sharpen must be added in the compositor tree

-

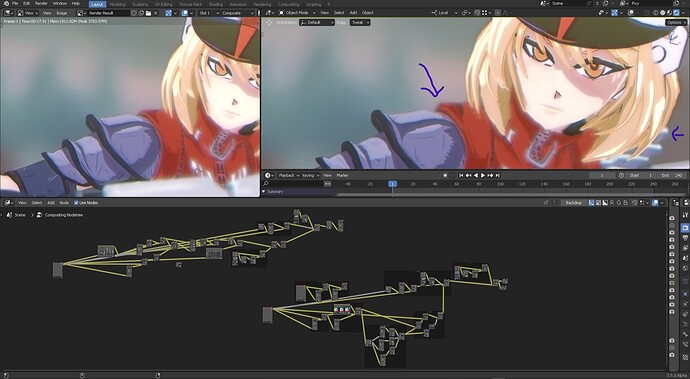

Screen space effects affects how you “grain” and how you “blur”. It looks fantastic at a certain zoom level in the camera viewport, but when rendered, the blur or highlights can be lost. Calculate based on final render.

-

Render layers are an absolutely must, to separate lights and shadows in the realtime compositor. I believe that would be the definitive step to creating high quality stylized anime in Blender 3.5+

![]() Blender 3.5 Realtime compositor:

Blender 3.5 Realtime compositor:

![]() After Effects compositing and effects:

After Effects compositing and effects:

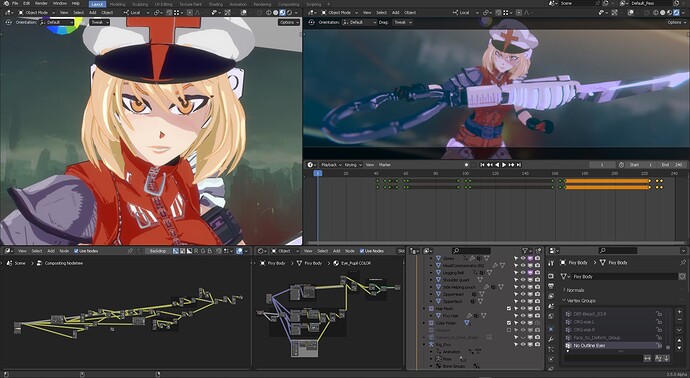

This is how it looks in the viewport for Blender 3.5 with Realtime Compositor active for CAMERA (Top left side editor):

This is a short camera test (not really aiming for full animation, it was a test for light wrap as mentioned above):

The Compositing tree. notice how the alpha edges are mixed with the background, a necessary step when doing Light-wrap for characters.

Also, you may notice 2 different compositing trees: I experimented with different filters for the anime look.

As a peer compositor used to say when I asked “why so many nodes”? -He’d say “Nodes are free”.

I have also been looking at stable diffusion using Blender, to get depth information generated from 3D. So far, I’ve gotten these results with Stable Diffusion 2.1 and a 1.4 CKPT custom trained model.

I want to share how cool it is to use ai for your OWN artwork. It’s a world-changing perspective.

Let me know if you want to see more experiments with this model.

Thanks!