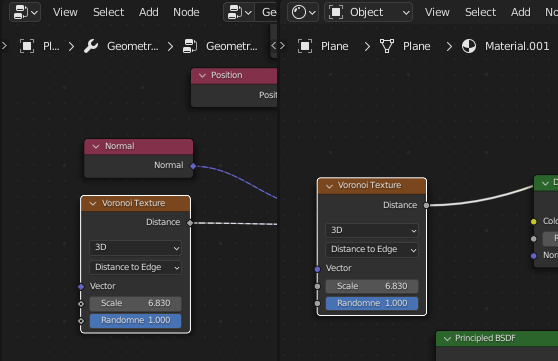

Neat, good to know. I’m sometimes a bit paranoid about capturing attributes. I don’t always find it obvious, when I can trust them to do what I want them to…

Not really sure where to start in terms of a step by step so I’ll limit myself to answer your concrete questions. I hope I can manage at least that.

The 0.25

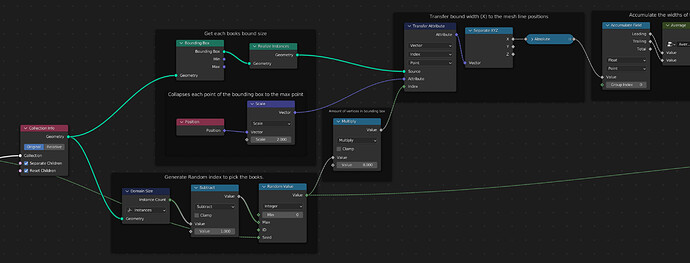

The calculation of the bounding box size is based on the assumption that the object origin of the book objects does not lie outside the objects bounds.

With that in mind, we know that two the absolute coordinates of two diagonally opposed vertices add up to the objects bound size. Since the bounding box mesh we’re accumulating the vertex positions of has 8 vertices, meaning 4 pairs of diagonally opposed vertices. So the result of the accumulate node is 4 times the size of the actual bounding box.

Multiplying the random value by 8

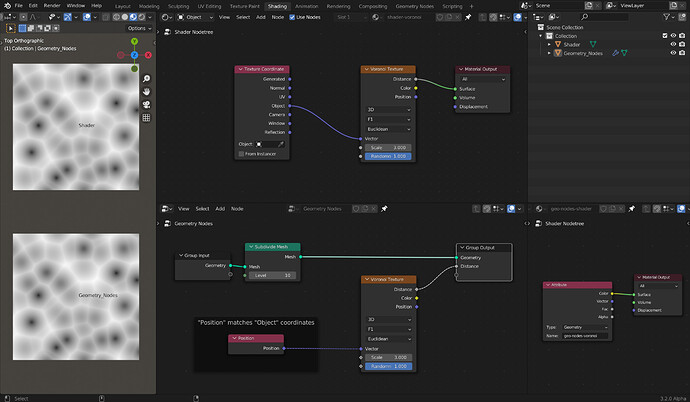

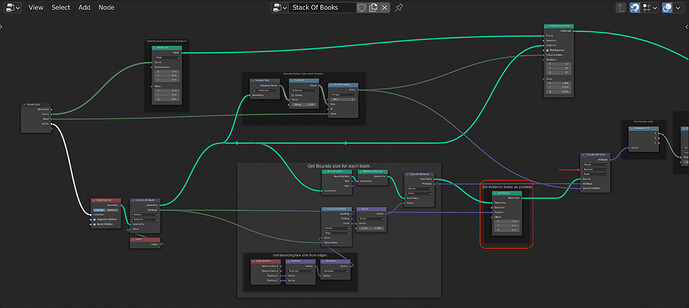

Our goal is to now get the bounding box size from the realized instances back to the actual book instances. The realized instances are large mesh and there is no domain that neatly translates between the mesh and the instances.

What we know is, that each point in the mesh has the size of the bounding box it belonged to properly stored (that’s what we do with the Capture Attribute* node at the end of the “Get each books bound size” frame) and we can retrieve it with the Attribute Transfer. Fortunately we know that each bounding box mesh had exactly 8 vertices. So the index 0 is referring to the first point of what used to be the first instance’s (instance index 0) bounding box, the index 8 refers to the first point of what used to be the second instance’s (instance index 1) bounding box and so on.

This only works out assuming the Realize Instances node doesn’t shuffle things around, which it doesn’t appear to do.

As you see the whole setup is quite brittle and looking back at it there are probably a few things I might do differently now:

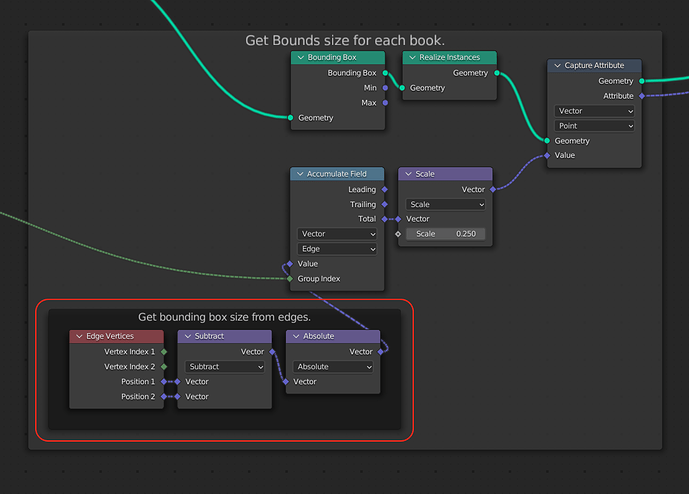

Bounding Box Calculation

Rather than hoping that the meshes origin is within the bound, we can get the bounding box dimensions directly from it’s edges:

With this you get each edge as a vector. Because the edges come from the bounding box mesh the are nicely aligned with the x, y or z axis and we can just add them with the accumulate node. We need to use the absolute, since some edges could be oriented to point in the negative direction.

This again will give us 4 times of the actual bounding box size since the box mesh has 12 edges, with 4 each parallel to one of the axises.

Getting rid of multiplying by 8

The assumption that the realize instances node always keeps the order in tact might not hold true at some point. Or sometimes you might have to do a few more operations that change the indices.

You can use the Transfer Attribute in “Nearest” mode to transfer attributes based on attributes of your choosing to make things more stable. E.g. once the points in the mesh with realized instances have the attribute with the bounding box size, their physical location is not important to us anymore. Therefore we could simply set the points position to the index and then use the random value as “Source Position” to directly get the attribute from right points.

It feels a bit hacky because you have to set the position of the points to something that doesn’t really make sense as a position, but it works.

@thinsoldier: It’s exactly how @Strangerman explained