I actually just discovered this area of the website, and it looks like it’l help quench my thirst for documenting my recent endeavours ! I’ve recently been dabbing in photogrammetry and NERFs and I find the topic fascinating.

Since I started learning Blender I’ve always loved pushing the limits of procedural workflows, using shader nodes, animation nodes, geometry nodes, code-generated art, etc. Nowadays I’m leaning towards what seems like the opposite workflow : capturing reality to produce CG art, instead of trying to imitate reality with CG tools.

It also aligns quite well with what I’m doing in my job, where I (among other things) reconstruct buildings using laser scanner data.

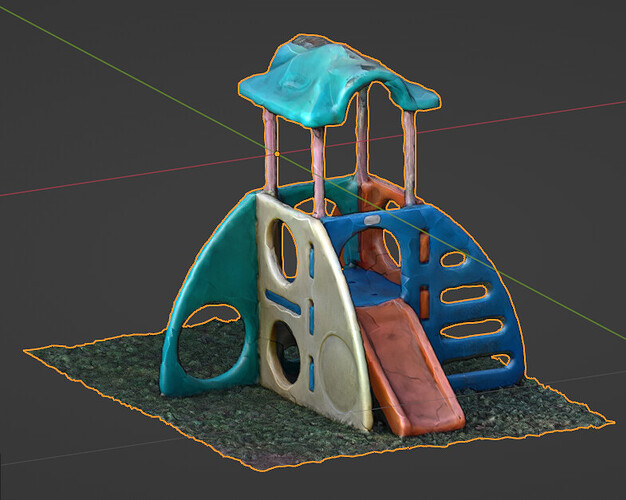

So my goal right now is to build an expansive library of things I stumble upon in my random whereabouts, and of course share what I can with the world wide web.

Enough talk, let’s see what we can get out of a regular android camera and a bit of processing power.

I’ve tried using a few different apps and the one that really stick to me is poly.cam. I’m still getting the hang of it but it already threw quite satisfying results !

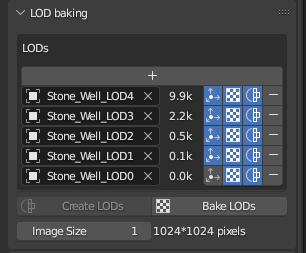

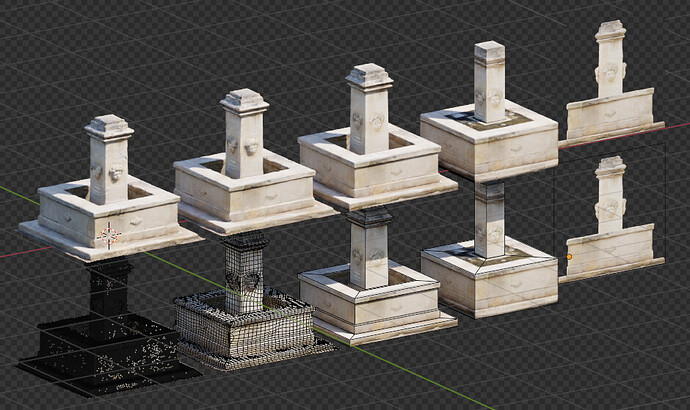

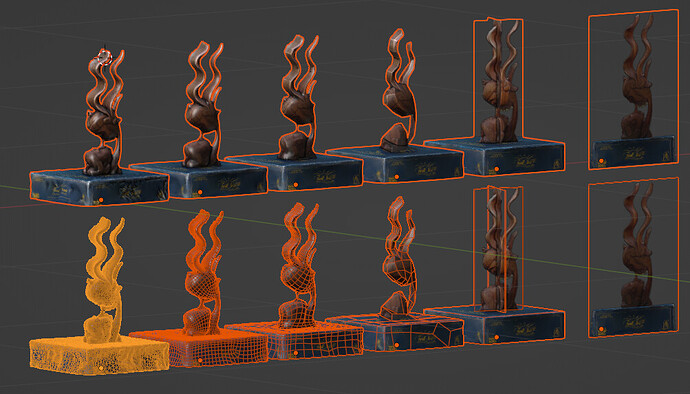

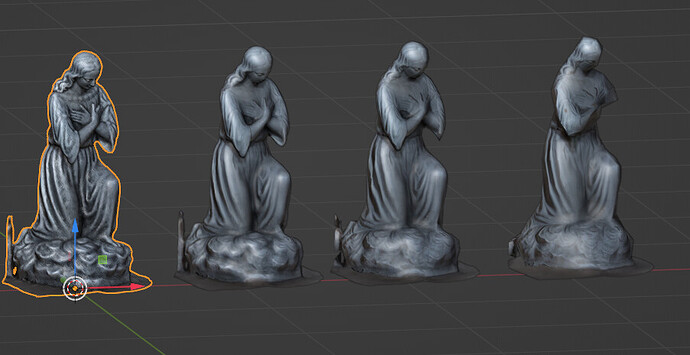

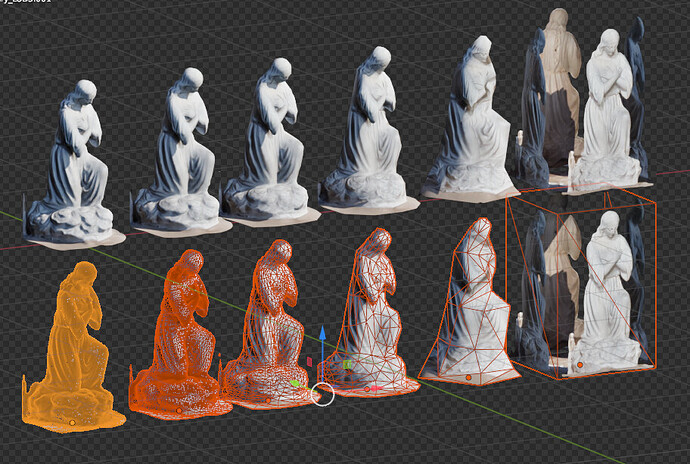

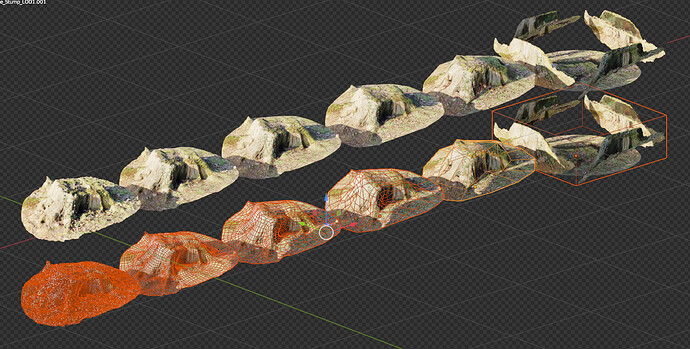

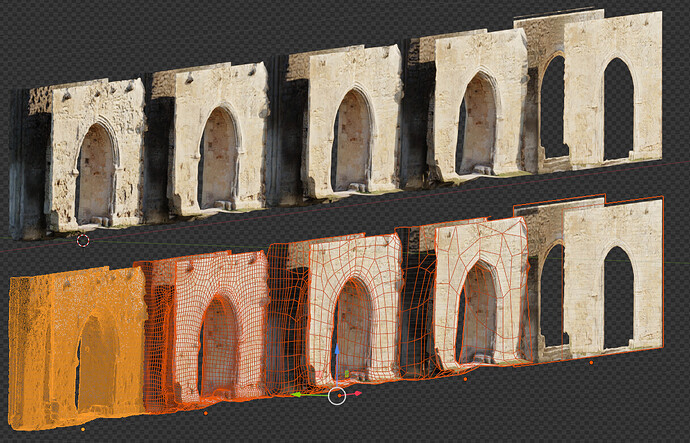

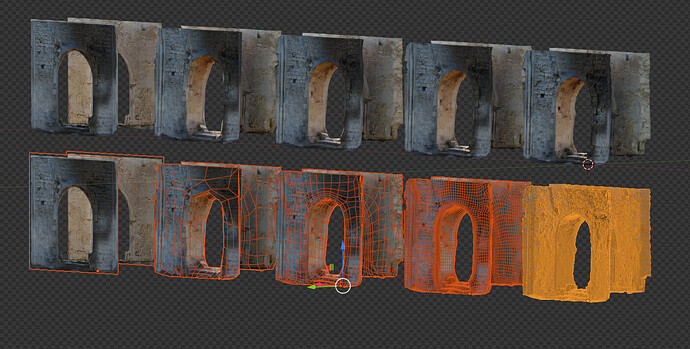

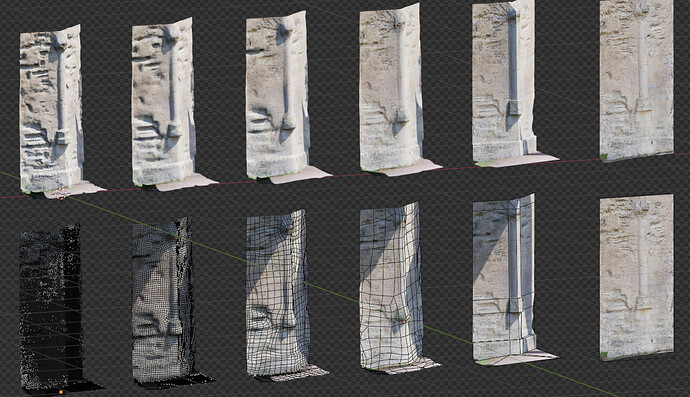

Then I’ve set out to build a little script to remesh & bake my models to create different LODs, to see how far we can push this thing. The idea is to be able to beat doing it by hand or even procedurally time-wise and quality-wise, granted the thing I want to model exists in real life.

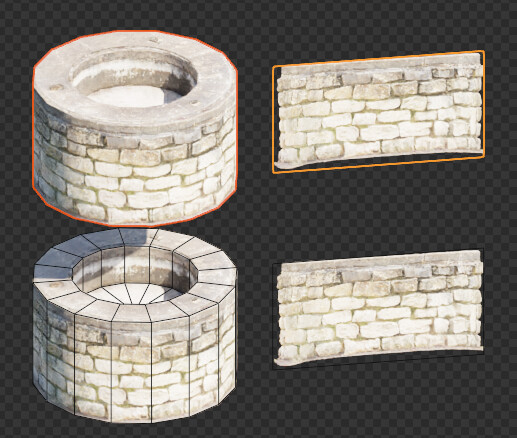

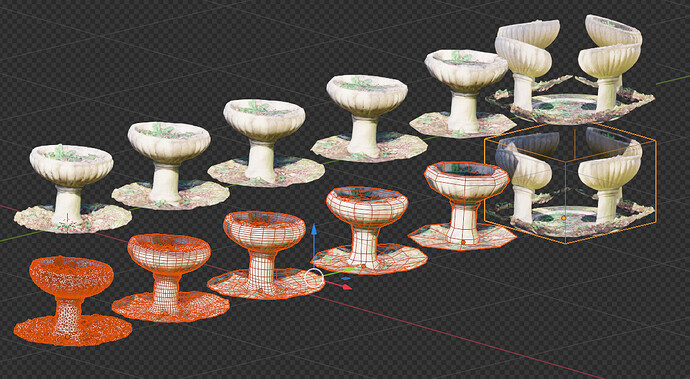

Here’s my first crash test. It’s a somewhat old stone well that laid casually in the middle of a street I walked on in one of my recent holidays. (32 Rue du Peulx - Google Maps) Oh and by the way I’m using the trial version of quad remesher to remesh the base object to make it more quad-y and less triangle-y and it works quite well, I’ll surely invest in it when the trial ends.

The textures in the back are a bit stretched because of the wall behind it which didn’t let me take good front facing photos.

Here’s the models over on sketchfab for the grabs

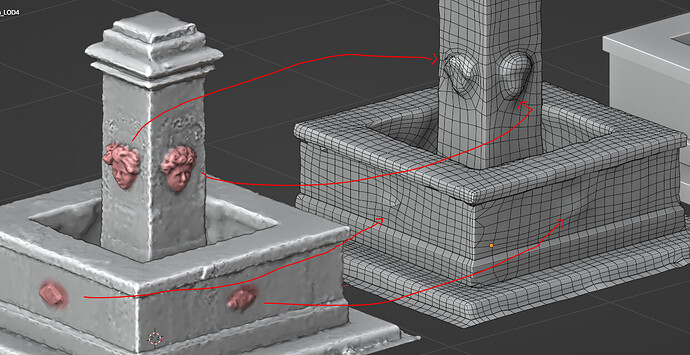

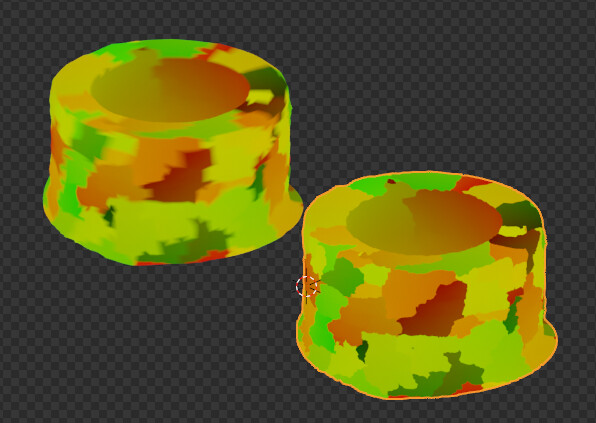

Note that at first instead of baking the data I tried to project the UV map onto the low poly models using a GN node tree

It does work, up to a point

UVs on photogrammetrised objects are notoriously bad, and it generated a lot of seams that make the texture data jump from one point of the texture to another along a quad, hence the ugly seams where the UVs are interpolated.

I’m sure there is a way to circumvent it, I’m working on it. It would let met use a single material and I wouldn’t need to bake new maps for the objects, which would be a huge time saver.

To be continued