Hmm… no text… nor more info ??

For using any 360-panorama image instead of an actual scene… noone needs to use AI…

…and there is no hint that the 360s are made with AI…

Even if… how would these scene have any “special” assets in it if not adding them also like the character ? For example any “interaction” with some of the computerscreens in the background or even walking thriugh the air locks… so it’s more a restriction of a static “fake” scenes… the viewer can’t “follow” the characters… they have to “jump” between rooms… ???

So what’s so “innovative” here ? If there would be some technologie to blend from on “room” (as a 360) to another… then this could be interesting… but someone could also use the 360 projection to re-create some basci geometry and use texture projection to do some “moving with some better parallax scrolling”… but if this will be used into detail… then a traditonal scene modeling… would be easier to handle…

![]()

Seems like a pretty good use case for AI.

With SD it should be possible to create variations of the rooms and make small but consistent changes such as changing a door from open to closed or something.

Other detail can just be modelled in Blender as real props.

Seems like a pretty good use case for AI.

With SD it should be possible to create variations of the rooms and make small but consistent changes such as changing a door from open to closed or something.

Other detail can just be modeled in Blender as real props.

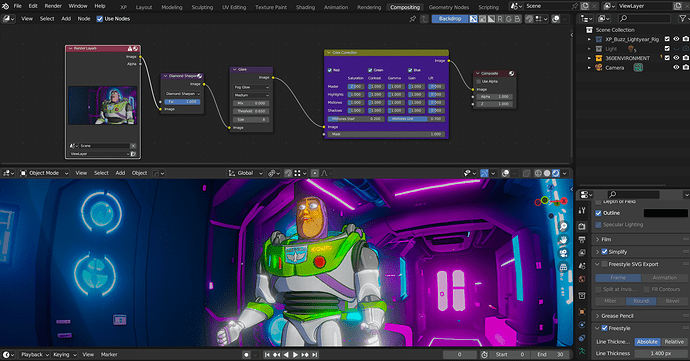

You need to install these FREE toon matcaps (Not mine BTW),to get this look in Blender with the work bench engine along with the viewport compositor for Bloom and color adjustment

The 360 degree background images were AI generated from this site last year BEFORE they went subscription ,I hoarded over 150 of them.

so it’s more a restriction of a static “fake” scenes… the viewer can’t “follow” the characters…

The obvious use case here is for sequential still NPR art and limited camera movement animation

as the Matcap effect is global, any actual scene prop geometry will still have the “took” look

I got the Idea last for Blender last year when I used a similar technique to make a 50 minute animated film last summer (over 74 days) with Iclone global “toon” render engine.

All the voices are AI generated as well.

yes you have to get “creative” with camera angles etc but with some compositing

and actual prop geometry mixed in ,you can get a very fast production pipeline going for NPR still and animations.

here is a short clip from the film.

Here is the basic viewport compositing node set up to add( much needed)

realtime “pop” to the global matcap shaders

but you may add any other filters to your taste of course.

Some other quick tips:

Simple map based textures (without normal maps) work best.

Technically you do not even need the principled BSDF node

Also avoid and “realistic” Hair types wth alpha transparencies as they do not

work with the work bench engine and will just render as blocky hair card geometry.

Also you may optionally add free style strokes to the render depending on your scene objects

but the work bench engine has its own basic global outline around every mesh.

No motion blur but not really “a thing” with low frame rate toon styles

but Depth of field does work if you need it.

The AI aspect is not central to this Matcap/workbench technique

Here I used the 360 degree AI sky boxes only for far background filler skies

everything else actual geometry so camera animation is more viable.

YMMV