The modifier menu was made like that in preparation for a lot of geometry nodes driven modifiers to be added in the coming releases and in the future. It’s good to future proof it now so people can get used to it.

I don’t believe lack of parity is a “suck” in the first place. It’s two different render engines, each has strength and weakness. Artists should use the one that works best for what is most important to them.

Certainly there are things in each that aren’t in the other (light groups, “bevel” edge shading, for example), but there are other solutions out there that solve this problem.

you missunderstood me , blender foundations goal is to achieve parity between these two engines ( hence we dont get exclusive eevee nodes). And i think thats the wrong mindset.

Every engine should be as powerful as it can be without taking the other engine into account

Where did they say that?

Also we have Eevee exclusive nodes- Shader to RGB?

The things people say with such confidence.

grease pencil 3.0 will support geometry nodes and there you can do a conversion to mesh. as a mesh it can mix in better with the rest of the scene for lighting and shadows. i think that’s the plan?

This is the approach that I think will work for most people.

Grease Pencil 3 is essentially a big refactor. We’re rewriting everything and focusing on feature parity with GPv2 without adding tons of new features (because that would blow up the scope of the project). The integration with geometry nodes was mostly done by the geometry nodes team.

thats the only old one for one style of npr rendering only and becasue the community was so vocal about it …

pretty sure it was communicated that nodes have to work and behave similar across these two engines, like all the time. Same goes for realtime compositor and offline compositor.

if you are on the devteam, can you confirm or deny that greasepencil 3.0 will use scene geometry and shaders or will it still use the old system ? i need to plan ahead a bit for a bigger project on what software to use for which part of the pipeline and knowing this would solve a lot of questions i have. I hope i can do as much as possible in blender, but if not, i can actually concentrate and develop the blender stuff ill really use accordingly and not stay in the experimentaion phase forever.

can you confirm or deny that greasepencil 3.0 will use scene geometry and shaders

I’m not sure what you mean by this. If you’re asking if grease pencil will be automatically converted to a mesh, then the awnser is no.

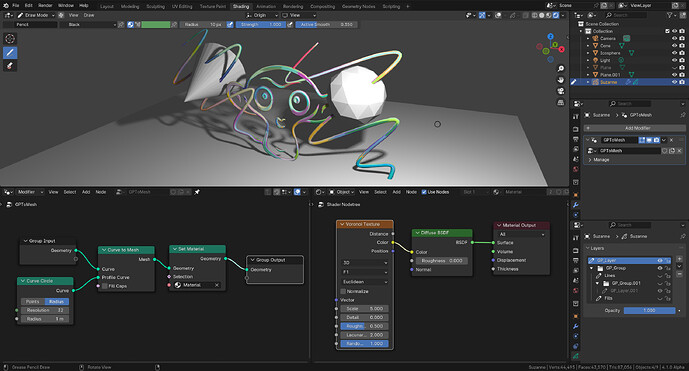

But it seems like what you want can be easily achieved with geometry nodes. Here is an example:

I’m using geometry nodes to convert the grease pencil strokes to a mesh and assign a mesh material to it. Then you can also use mesh materials as usual.

You can even pass attributes like the stroke length, etc. to the shader if needed.

But he is right.

Having to pass through a submenu or type letters is slower than the old way.

Now, I think that two distinct buttons to add old modifiers through old menu and new geometry nodes would have result in a quicker workflow.

But, at end, old modifiers should converted into geometry nodes. And at that moment, we would still end up with an unique menu. Solution is probably to have a custom menu for favorite modifiers.

He is also right about custom sub-panels. We don’t have an operator to open them all, at once. Our custom set-up is more well organized but implies more clicks.

User can still ignore subpanles, or avoid to close them by default, for his custom set-up.

But for non customizable stuff, like principled shader, subpanels closed is not the fastest default.

i dont mean for interaction with modelling tools and so on , it should still use the gp toolset of courswe , but just that it will be rendered as normal geometry. I was waiting for that. Im 100% confident/sure that the first announcement for gp3.0 included that itll use standard geometry and gemoetry and therefor fully integrated into the actual renderer.

i would like to use grease pencil in the truest sense as a 2d animation tool. so fills and colors and their use in a 2d animation context are of big importance, not just strokes.

the problem is that now, it wont respect transparent materials which are in front and that the filters which come with grease pencil also act up strange when grease pencil objects are obfuscated by other geometry.

if gp sits better in the sceneand is not composited on top, i could use blender for 90% of everything, but if not, theres little reason to use blender for 2d animation at all and i could just switch to another software which is better at drawing and 2d animation, since i have to do all the work there again anyways (setting up image planes and camera animation , and decorate the scene with dust and other halftransparent objects).

I imagined we finally had a holy grail software which can seamlessly combine all the different elements and play back all that in realtime too.

You are right in that the geometry used by Grease Pencil 3 is standardized. It uses CurvesGeometry just like the new hair curves. But this doesn’t mean that it is integrated into other renderers and that was never the plan.

Again, maybe you’re misunderstanding the screenshot I sent. It’s showing a grease pencil object with all of its drawing and animation capabilities (I drew the strokes you can see using the gpv3 draw tool), but rendered as a mesh using mesh materials (which could of course have transparency).

yeah im only asking for the rendering part of gp 3.0 , otehrwise the workflow features are good enough for me already :

here is a screenshot …

as you can see around the particles and around the foreground buildings the background is shining through , thats one of the graphical artifacts i talked about earlier. Becasue the depth sorting and stuff like that isnt 100% correct.

this scene cannot be done with eevee with gp 2.0 becasue of the tacked on compositing of the greasepencil object. i have to either render 3 viewlayers and put them together in a compositor or set up the background and foreground layer and camera animation completely in another program and just do the 2d animation in blender or another program. Or i do the animation in another program and then import the png sequence into blender.

I imagined i would be able to combine npr rendered objects , cool shaders, greasepencil objects and textured planes. But gp 2.0 doesnt let me do this… and this is a rather simple scene , i havent added any halftransparent forground wind effects and stuff like that yet

Which isn’t necessarily a terrible thing. It’s not as if full-blown studios doing NPR don’t use compositing.

Full blown studios also have a guy/team whos responsible for just file and folder management , i dont have one though. I also dont have servers where i can save terrabytes of intermediate image sequences.

Why do you think so many smaller studios go on adventures to use unreal engine and similar ? becasue they, as a small team also have a hard time coping with big pipelines and reducing the pipeline is biggest relieve.

if everything worked, could reduce the full pipeline to just eevee + realtime compositor and maybe a last simple pass in aftereffects/nuke, eevee really is that good!

if i dont do npr/2d i can easily achieve parity or advance the grpahics quality of several tv shows on cartoon network with just eevee and bit of postprocessing. But since i wanna do 2d, grease pencil is the last missing part of the puzzle.

Maybe this conversation should be continued elsewhere, as I feel like we’re getting too far off-topic.

Using GPv3 In this case, you could use a geometry nodes setup to turn the smoke grease pencil into a mesh and use some toon shading setup to get the same effect.

That would mean that the smoke correctly occludes the background so the buildings are not lit from behind. It would also cast shadows, etc. No compositing required. And you can still continue doing your normal frame-by-frame animation since the effect is procedural.

I doubt it has that much to do with generating ink lines on celshading. And it’s not as if taking everything from Blender to Unreal is a quick one-click walk in the park, so clearly they’ve got some needs that I personally don’t…

Honest opinion here - in several ways, I think the compositor in Blender freaking sucks.

(the full compositor, not the RT version which doesn’t even have the most basic thing needed in composting - supporting multiple layers.)

It’s utterly slow. Working with retargeting multiple alpha sources is … bizarrely over complicated. Playback really isn’t a thing.

I know some people like it, and they do great work with it. It’s free - that’s the one good thing I can say about it. It’s ok for a quick comp check to get a feel for what one might wish to do, but if anyone actually has AE or Nuke in their pipeline and instead is using Blender for the bulk of their NPR post work - sorry, I think that’s bordering on insanity. ![]()

Exporting an ink pass (GP, Pencil, whatever) as a view layer in Blender is quite easy, and comping it is also not a big deal. So it feels like this wish goes into … i don’t know, a place where the issue isn’t that much of a problem in the first place.

no its not brodering on insanity, anime and 2d doesnt need the heaviest of postproduction, a few blurs and 2d movements and sometimes glows are the epitome of whats needed.

what drives me insane is exporting and importing image sequences and the time which it takes away from drawing and being creative.

Inside eevee i can finetune everything in a few seconds to a few minutes and check it in one go (the same is true if things are rendered in unreal) . If i go through aftereffects that can easily grow to hours and if the project gets too big i might begin to confuse the image sequences on top. The biggest advantage of such a realtime renderer is the easy evaluation → colors, timing, composition.

thanx for the suggestions, i have developed several workarounds of course. Ill probably go the route of just exporting the gp objects as images, refine in clipstudio and then importa back as an animated imageplane.