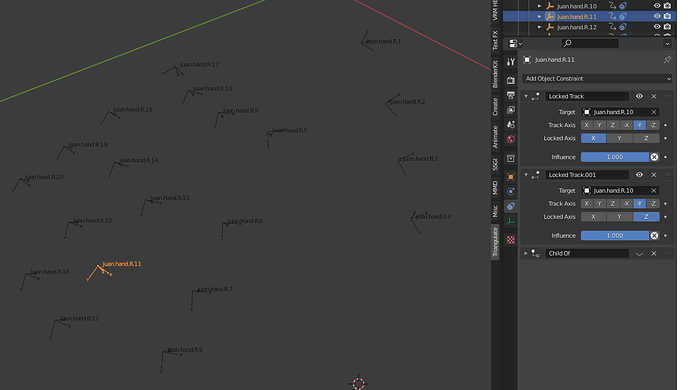

Recently I made a real-time motion capture addon for blender, but have been unable to transfer the data into an actual armature (also in real-time, using constraints). The addon outputs a series of empties with the location of joints for the hands, but does not give any sort of rotation or “scale” information (the scale of the whole thing varies depending on the distance of subject and other factors). For the past few weeks I tried using the different “tracking” constraints for getting the relative rotations of the empties, but each one of them seemed to brake in different scenarios (I can’t really say what I tried, as several hours of searching the web was spent in each one of them, with no good results). Right now each empty has the following constraints:

This makes them point to the bone behind them with the results I want (which I then copy to the bones with “Child Of” constraints). That is, if the hand is orientating directly to the camera, as a rotation of the whole thing does not make the bones change theirs. To counter this, I added an empty which represents the hand rotation and a “Child Of” constraint (with only rotation activated) after the “Locked Track” ones, but this creates a sort of “double” rotation on the bones (as an inclination of the hand makes the empties rotate fowards).

What should I do? Any help is apreciated.