@Eary_Chow @kram10321 Hope you don’t mind me tagging you, but I didn’t wanna ask in the filmic v2 thread as I think it will be more easily found in its own thread and a lot of answers tend to get lost there. I have some of questions. If I’ve used wrong terminology anywhere, please correct me.

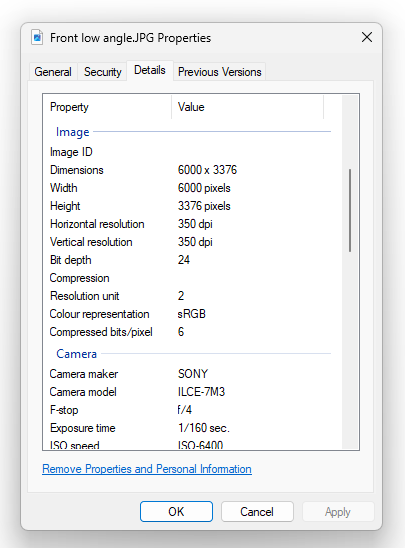

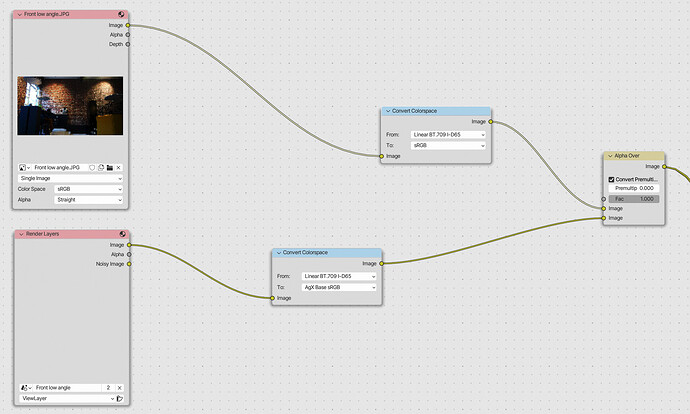

I’ve got a 3D scene in Blender and I’ve got a simple photo in JPG that’s in sRGB according to Windows file properties:

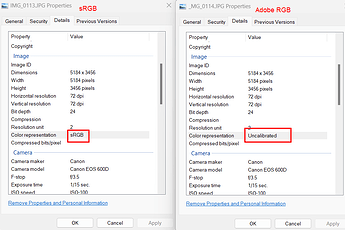

- Is that the correct way to find out the color space of a photo? Is there a better way?

- Is that the ICC profile?

- Is there information missing here and if so, what exactly?

- Can sRGB mean multiple things?

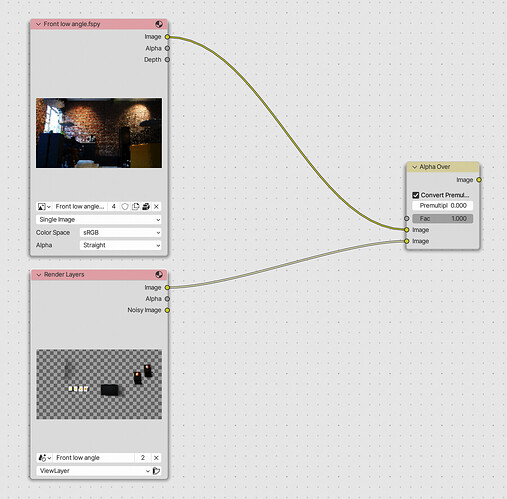

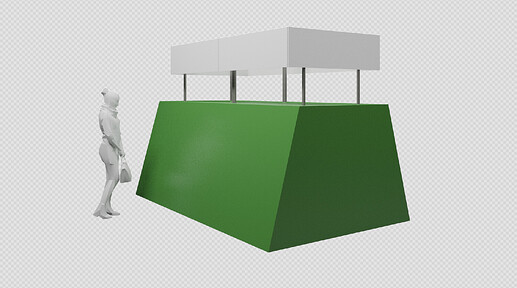

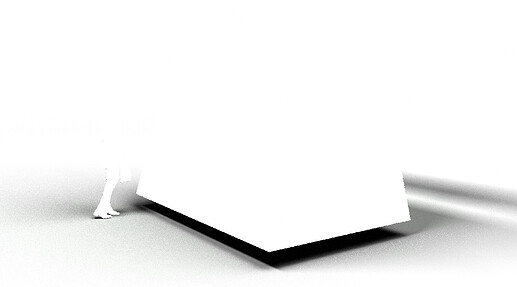

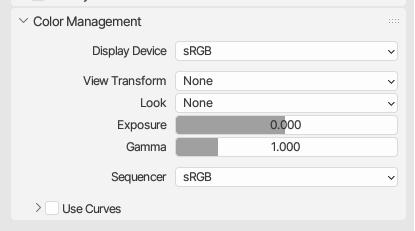

Now I’d like to use this photo as the backdrop to my render. If I understand correctly we start by setting the Color management to none (and the display device correctly to my displays gamma)?

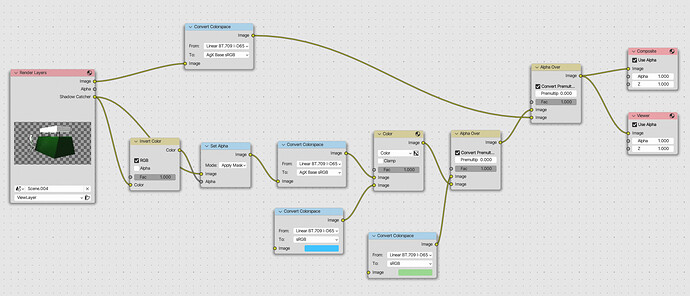

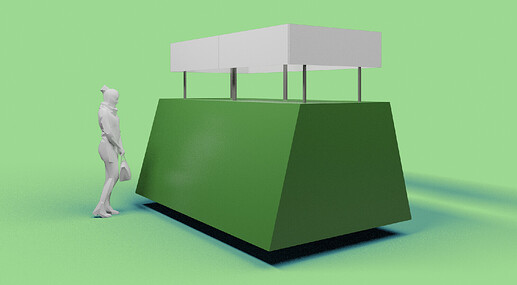

Next, we use the Alpha over node to place my render with transparant background over the photo.

The documentation says the render’s output has premultiplied alpha (like openEXR), so we have to tick the “Convert Premultiplied (to Straight Alpha)” box: https://docs.blender.org/manual/en/3.6/glossary/index.html#term-Alpha-Channel

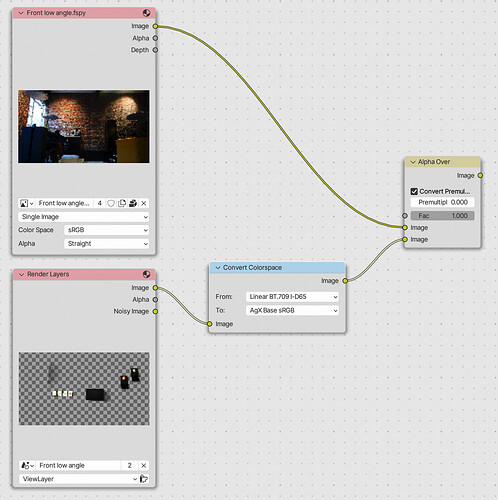

Alright, so now we have to “manage the colours”. From following the Filmic v2 thread for the longest time I’ve understood that the render output is in Linear BT.709 with D65 whitepoint and we have to move to my (and most people’s) display gamma which is sRGB(1), but through the AgX transform. So we do this:

- Is the render output color gamma(space?) something hardcoded to Cycles, or does the OCIO config decide that?

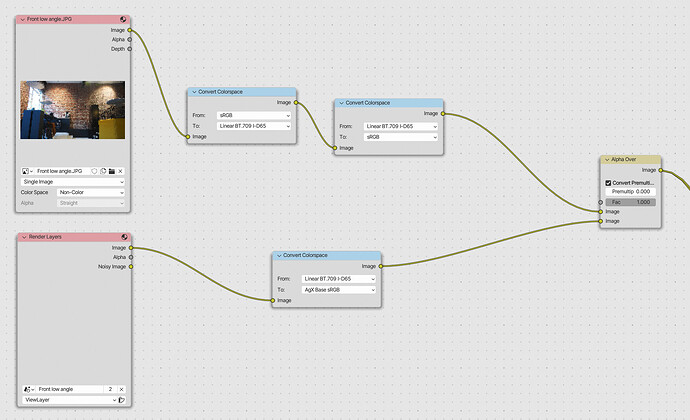

Next, I would think we’d work analagously with the photo. I’d add a colorspace converter from sRGB to AgX Base sRGB like this:

This results in very dark colours, so:

- Why is this wrong and what would be correct?

The solution is likely obvious to many, but I’d really like to gain a true understanding for this mess so I can help others that are in the same position.

(1) I’ve seen the P3 thread, which complicates things because Blender doesn’t have a function to apply an ICC profile to image exports. Mac Users: Let's Solve The Color Problem (Gamma Shift On Mac)