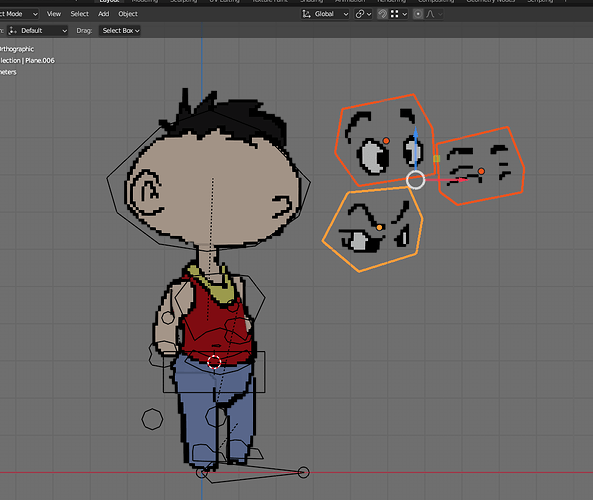

I’m creating an animated 2D mesh that I’d like to export to a game engine. I’d like to have some way to switch between several different expressions. For example, in my test character here I have three different meshes with different eyes on them which I’d like to overlay on my model depending on the expression I’d like to show. Is there a way to do this?

What’s your plan for getting the character into the game? Render to sprite sheet?

No, I wanted to export the thing as a rigged and animated mesh, similar to how you do it with 3D models. I was thinking of something along the lines of how Cult of the Lamb does their characters, or Night in the Woods.

You can create switcher with geometry nodes. but I don’t know how you’d export that for game engine

Why use different meshes at all? You could use one plane with three different textures and switch between those by moving or changing the UV map. This is a super common technique for 2D, and it works in Blender and game engines as well

I agree with you that UV animation would be really helpful. But I have no idea how I would set that up or export it. How would you in Blender have the UVs change on a mesh in response to setting a keyframe in the Actions editor? Also, how would you export this to a game engine? I checked the GLTF website and they still do not support uv animations (there is a proposal to add this, but they’ve been working on it for at least two years at this point).

If there is a way to do UV animations in Blender and export them, I’d really like to know because it would solve a lot of other problems I have too.

I don’t know what those are or how they do things.

If your plan is to export a skeletally-animated mesh-- a mesh with an armature-- then you could parent those expressions to a bone and move them behind, or scale them to 0, in the course of a single frame to hide them. I suppose you could do something similar with shapekeys (aka blendshapes, aka morphs.)

Almost anything else, you have to implement in the game engine, Blender has nothing to do with it. This would include changing the UV map-- UV manipulations don’t get exported, you implement them in your game engine.

I came across this video which seems to show how to do this with mouth movements. But it has no narration, is low quality and is for an older version of Blender. Does anyone have any idea what’s going on here? How is this person able to get the moving bone to update the texture?

He is probably using a python driver, to change UVs of an unique image containing all mouth shapes, according to bone position.

The image on plane and bone are used as controller, placed out of frame.

You could move your move shapes, out of frame too, or behind the character, with a constant interpolation.

In fact, how do it is rather relative to abilities of game engine used.

There are probably existing tutorials on that point, or a chapter in documentation of game engine.

I found a tutorial that covers the technique in a bit more depth. Not something I can export directly, but maybe I can get a similar effect by playing with shape keys.

I could also use the scaling a bone to zero technique someone else mentioned, but that would require having one bone for each expression all located in the same place which could be hard to work with once there are more than just a few of them.

The trouble with all of these solutions is that there is no real way to export them. Whether it is through writing a custom shader or implementing a driver, the exporters Blender comes with are not sophisticated enough to pick them up.

Part of the problem is with the way Blender’s Actions are designed. Each action coresponds to one object, such as a mesh or an armature. This means that you cannot animate multiple objects within a single action track, such as having a single action that both moves a rigged mesh and updates shader. The only way around this is to set up drivers so that a bone’s coordinate can be routed into a shader parameter - which would work for an entirely within Blender project but which none of the exporters is able to pick up on.

Like I said above, UV animation would solve a lot of the problems I’m having. But the Action editor prevents me from animating both a mesh and a shader in the same track (without using drivers), and the exporters don’t handle shaders very well anyways. I don’t see any kind of pipeline that allows me to export UV animation with Blender.

You would have same problems with other software.

If their USD support is not absolute, there is data that can not be exported.

If animation is done through drivers, each software having its own way to handle that ;

there is no exporter for that, whatever software is used to animate.

I already said it. The info you are looking for is not relative to Blender. It is relative to used game engine.

Is it possible to animate that in game engine ?

Is it possible to create drivers to apply wanted properties by a bone/object in game engine ?

What is workaround used by members of game engine community ?

What import format are they using ?

There is no ability that somebody can answer to that, here, without knowing game engine used.

You would have asked same question on game engine forum, you probably would have had a more pertinent answer quicker.