Embree on GPU is still experimental!

It has had some improvements already compared to when it showed up a few weeks ago, and it will likely keep improving so please keep that in mind.

The same should also be said for AMD, a lot of their performance improvement was flat out negative (hence the 7% average that was shown a while back) and that will likely be fixed as time goes on.

CUDA, and by extension Optix, have had a very long time to mature and (If I recall correctly?) got away with not supporting some things initially (like volumes, or AO/Bevel), the same can’t be said for HIP/oneAPI.

I have done some testing with the A770 LE 16GB (and a 3700x), with command line rendering. Here are the results with Embree on GPU off vs on:

barbershop_interior: 182.47s, 196.307s, 203.713s, 3509.37M -> 154.55s, 156.257s, 163.413s, 3230.63M

bmw27_gpu: 19.1767s, 20.4567s, 21.8967s, 1458.15M -> 15.6667s, 15.7467s, 17.1933s, 1447.06M

classroom: 42.8133s, 43.1333s, 44.13s, 2122.68M -> 34.0433s, 34.24s, 35.22s, 2116.7M

cosmos_laundromat: 99.34s, 131.537s, 154.113s, 8566.36M -> 74.63s, 87.45s, -1s, 6201.84M

flat-archiviz: 94.4233s, 96.2767s, 127.443s, 3905.09M -> 76.1167s, 76.8467s, 108.017s, 3874.1M

junk_shop: 6.29333s, 11.73s, 16.89s, 6116.52M -> 5.45667s, 6.82667s, 12.0633s, 5789.44M

monster_under_the_bed_sss_demo_by_metin_seven: 68.0667s, 68.7267s, 70.7933s, 2051.31M -> 57.3267s, 57.5s, 59.5733s, 2029.29M

one_lotsa_hair: 10.4467s, 24.4333s, 42.6267s, 4193.68M -> 24.5333s, 27.51s, 45.68s, 2943.94M

Reading the numbers: Time from when “Sample 0/…” is shown to final sample, time from when first “Updating Geometry BVH” is shown to final sample, total time (incl. CPU/other-bound things like calculating modifiers, reading the blend file, etc.) and peak memory.

If you want me to test something more, I can.

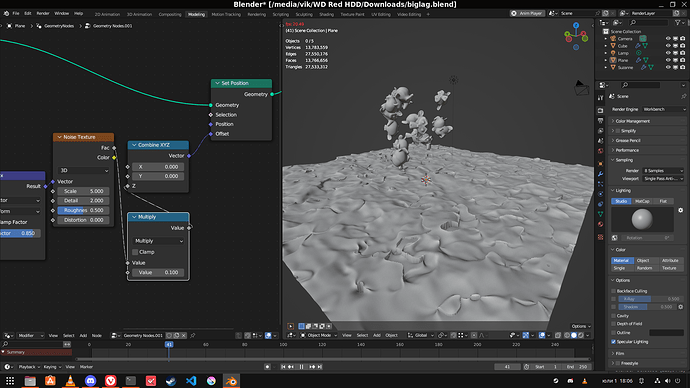

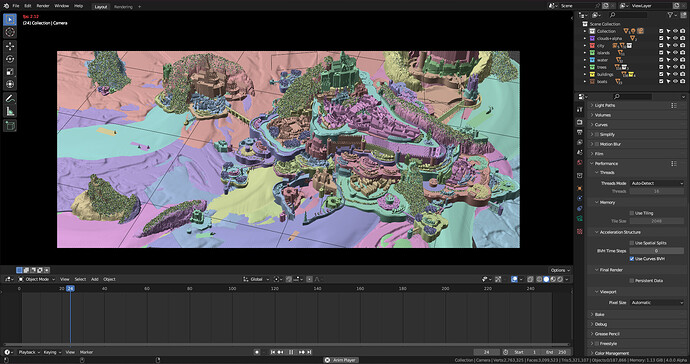

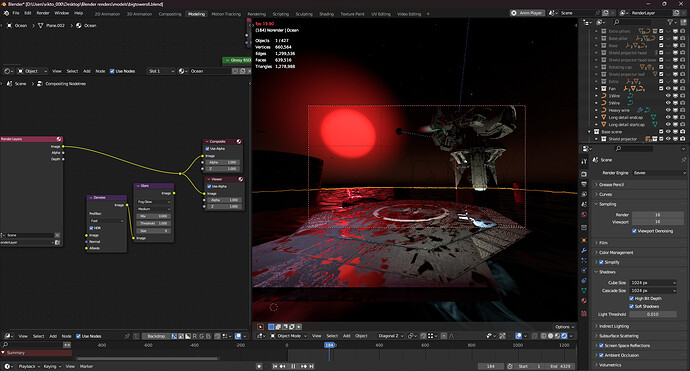

Here’s some render results, collated into a single picture so you may inspect them for artifacts (though I didn’t see any):

Pic

The above is a lower resolution picture for preview. I don’t know why the big one doesn’t work when I posted my reply, probably because it’s big, so here’s a direct link: https://i.imgur.com/kbxDOKa.jpg

Methodology / details / extras

Driver used was 4369, testing on Windows with the 5531d610f9a7 3.6 beta. The GPU is the A770 LE 16GB, CPU is the Ryzen 3700x, and there’s 48GB of non-XMP’d RAM @ 2400MHz. No overclocking on anything else either. Cycles’ render devices were set to the GPU, without CPU, as that lowers performance for any GPU anyways, not an Intel issue. Rendering was done from the command line with factory-startup, which starts faster due to not loading a ton of unnecessary addons. The files were rendered once, discarding this result, then averages of 3 renders are taken (memory is max, though, it shouldn’t vary).

Here’s the full results, including every single benchmark:

full_out.txt (7.7 KB)

Barcelona did not work in Cycles, with CPU, so not an Intel issue. All textures were white. Worked in Eevee though… Oh well. That’s why it’s not in the results. Also it complained about not having a water bump texture when packing (water still had bump). Man…

Cosmos laundromat caused a bunch of bone-related errors when loading (Intel unrelated). It rendered fine without Embree on GPU, but with it enabled Blender crashes after rendering finishes (Intel related). This is why there’s a viewport picture instead and a -1 total time. Also, the eyes are not a black artifact, just a wireframe at a low resolution.

Also also, rendering cosmos laundromat in Blender causes my PC to freeze for a bit midway through, getting a spike in 3D GPU utilization and compute dropping, then resuming as normal. No running out of RAM or VRAM, so this is likely a related bug.

Reading the full results, barbershop crashed similarly on its warmup render, but did fine otherwise… I don’t know if it’s the exact same case though, since I overwrite the log Blender gives on each render, and at a glance it doesn’t seem reproducible given the other renders worked fine.

Embree on GPU is still somewhat experimental. When it was introduced a few weeks ago, Junk Shop actually became 50% slower to render with Embree on GPU, and my nightmare hair test became 2x slower. As you can see, this has clearly changed already, and performance will most likely keep improving more and more.

Embree on GPU did not work on Linux a few days ago, but Intel JUST released a new update to their compute there / a Blender update may have fixed it.

Denoising, when present (Italian flat, junk shop) was turned off.

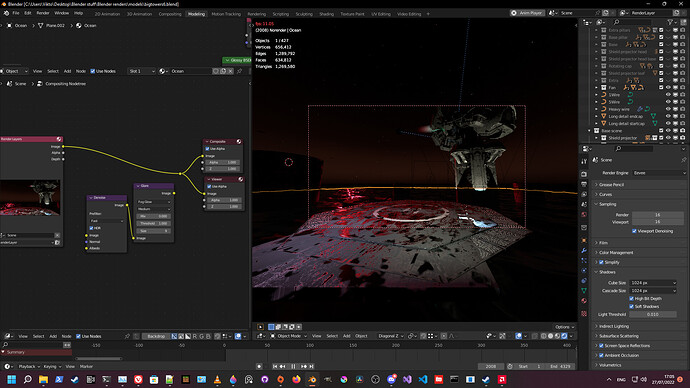

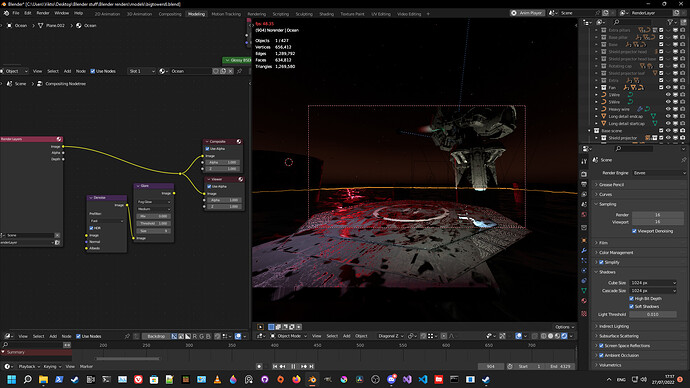

The bonus render (one_lotsa_hair  ) is a model I found online :^) and did a bunch of tweaks to. Hence, I’m not super comfortable sharing it. The hair was very painfully done using the old particle hair system. Hence, it looks slightly odd in some places. One day I’ll update to the new hair. The character is

) is a model I found online :^) and did a bunch of tweaks to. Hence, I’m not super comfortable sharing it. The hair was very painfully done using the old particle hair system. Hence, it looks slightly odd in some places. One day I’ll update to the new hair. The character is One, but is not a oneAPI mascot; rather, from a niche, not super good PS3 game, directed by the same guy who directed Automata. If you actually care about this though, please emulate it (not pirate it) instead of playing it on a PS3, you will have a much better time at 60FPS.

Included because I like benchmarking it, and because the hair’s rendering results are interesting.