Sweet!

Does anyone have experience with Arc’s capabilities in various other popular graphic creation apps, such as Unreal Engine, Stable Diffusion XL, etc? Does it “just work” like with Nvidia cards or can you expect hassle if you want to use it for a wide range of tasks?

If I buy a GPU for hundreds of dollars, I don’t want it to excel in just one / a few tasks.

I do not have any experience with arc in particular, but given how dominant Nvidia is, you can probably expect to be always a step behind.

Also, it is worth having in mind, that even if the software vendor or intel itself offers support for these Arc GPUs, there aren’t that many of them around. Even looking online, I can only see a couple of them being for sale. So, naturally, small user base, means less real world testing, less chances for bugs to be discovered and quickly resolved.

I run both Nvidia and AMD cards, and every time software like, Davinci or UE is updated, there is a high probability of encountering a bug of some sort when using the RED card. There are probably several times more of those AMD cards than there are Arc.

True, maybe “excel” was an exaggerated word, but if Arc at least ->works<- it would be good enough for me personally, I don’t need top performance or anything like that.

Anything much faster than running on CPU would be good enough xD

I just don’t want to buy pretty expansive hardware to later find out “nope, you can’t use it at all in this workflow”.

That is what I mean by being a step behind. Everything is first developed on Nvidia hardware and only then adapted to work on cards from other vendors. Now, this can sometimes be a short wait or a long wait. Also, the bugs, may render the hardware unusable.

For example, UE5. When it went from version 5 to 5.1, ray tracing was completely broken on AMD hardware. It would give you this flickering, bright effect. You would think that such an important feature would work day one, especially, when it was working fine on version 5.0. Epic games is not a cash strapped company and neither is AMD anymore. You would think features were extensively tested before the release, but something, somewhere went wrong, rendering the software unusable on anything other than Nvidia.

As for raw performance, I doubt intel can catch up to Nvidia anytime soon. It would take them several generations before that is possible.

If you want something that just works, probably Nvidia is the way to go. This intel hardware is still on its first generation, and while they are making progress on the software side, I would at least wait for gen two before making an investment.

Ok, I guess that if you’re going to buy Arc, you would first have to research whether it would work in your (current) workflows, yet future versions of various software or a change in your workflow will jeopardize this. This is what makes Nvidia convenient, if I buy an Nvidia card I know it will always work (if not, it will be bug-fixed fast as hell).

I still want to switch from Nvidia because I think their cards are overpriced cash grabs nowadays. (My current Nvidia card is way overpriced, but at least it works for everything).

In any case, ty for your inputs.

I recently bought two 16GB A770 for my Linux machine. My reasons for going with Intel this time:

Pros:

- way better performance/$ ratio than AMD cards

- open source drivers, unlike NVIDIA

- faster driver development and shipment than AMD

- supports all necessary features in Blender (hardware RT, multi GPU, light tree)

- sane GPU size (two slot)

- sane power draw

- decent VRAM size for the price

- memory pooling is listed on the roadmap (I don’t expect much, but its nice to see this as an option)

- don’t need to worry about Path Guiding support

Cons:

- GPU is not self-repair friendly

- temps and cooling (need to figure out fan speed control)

- cant see any improvement in Workbench speed between Radeon VII and A770 (only serious issue)

- Steam UI lags (most likely related to unstable Linux distro that I’m running)

I didn’t test Stable Diffusion XL but I have it on my list.

I don’t use UE so I cannot help here.

Didn’t found any other issues with day-to-day use, but I don’t use any heavy DCC other than Blender.

Right now first priority for me is to help with this: https://projects.blender.org/blender/blender/issues/110504#issue

@RightClaw if you want everything to work perfectly I’d stay away. But if you are willing to be a QC guy - go ahead. ARC prices are low for a reason.

Regardless of the pros you’ve listed you currently have probably the least compatible GPU series and operating system combo out there.

Expect to have headaches trying to run and troubleshoot bugs, glitches, crashes and compatibility issues in all sorts of software for not months, but years to come.

You may have saved some money upfront, but unless you value your time at $0/hour, you are probably going to lose a lot more in the long term.

I tested all my active Blender projects right after purchase. And outside not having any viewport performance boost over previous GPU, and the one bug I reported everything else works fine.

I think you over-exaggerate a little. Intel historically has way better Linux support than even AMD. They are actively working on mesa drivers.

And if I will hit some total wall I can easily switch to any AMD GPU since they use mesa, and ROCm is in Debian repos.

That’s a great approach, when making a purchase. You figure out what you gain, what you lose and what’s important to your workflow.

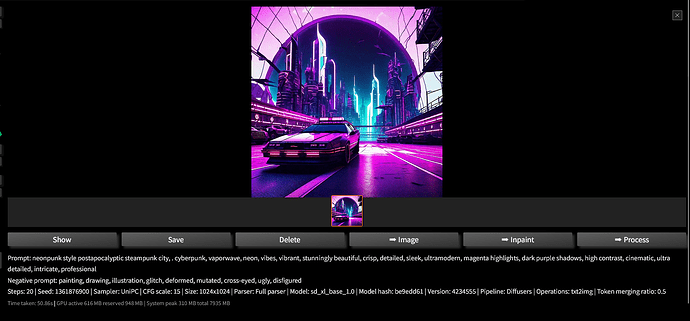

Thank you for the comprehensive text! It would be interesting to hear how well Stable Diffusion XL works on 16GB A770 when you have time to try it. ![]()

Depending on what scene/data you are testing, it could be as much related to CPU then GPU, given how some stuff can still be single core limited.

I don’t have any numbers on this unfortunately.

I have couple heavy scenes that are limited by how Workbench is treating lots of instances or heavy geometry over ~30mil poly.

Same scenes have viewport lag on both GPUs (RVII and A770). And this is only in Workbench and not in Cycles.

And no, CPU is not a bottleneck. I monitor all system resources all the time, and there is no CPU load when navigating in the problematic scenes in Workbench.

You are looking at every CPU core while doing that? I mean a whole CPU may report 14% usage, but that’s based on say a whole 8 core CPU. Where in fact a main single thread is maxing out a single core and that’s the bottleneck, as such the GPU makes way less difference.

I did some testing when I updated from a 1070 Ti to a 3080 Ti and in some case the new GPU made no difference.

Both conky and htop reports every thread separately.

Buggy, can work. Things are improving. If you want to get an Arc GPU right now and use SDXL specifically right now, expect possible headaches. IPEX, Intel Pytorch EXtension, very very recently got updated to 2.0 and got native Windows support. Not that you should use it natively. It’s probably still better to use WSL2/native Linux for the time being.

Here’s someone’s example image:

You may ask specifically for SDXL now, then get annoyed when you realize a specific controlnet annotator, tortoise or something else doesn’t work. And, regardless of Arc, many people used magic strings like ‘cuda’ when developing whatever AI app, and those magic strings aren’t going to remove themselves.