What are your thoughts on this and do you think Blender is or will be a great tool for real-time audio synced VJ loop animations ? Can it be used instead of TouchDesigner, MadMapper, Resolume, etc… ?

I am not sure Blender is any good for working with realtime input. Its own game engine was deprecated. You’ll have more luck with Unity or Unreal (though Blender can be used for assets)

Thanks for the comment , it means a lot !

Well, I’m already experimenting and there is some hope when using EEVEE, only my graphic card is too old(GTX 750ti) and right now I cannot say if the low FPS of some animations is due my GPU or Blender optimization… The purpose for EEVEE is real-time rendering , right? Also I read that there is a plan Blender rendering to be migrated from OpenGL to Vulkan soon which would benefit the performance even more they say. And I wonder once that happen and I have better GPU, will this solve my low FPS problem…

Shout out to @example.sk I am using his addon to input audio and sync it to my animations (addon is called Audvis, give it a try).

Some of my experiments so far, more to come soon: https://vimeo.com/user157076695

Thanks for the comment again, will be happy to hear even more ![]()

Looks like you understand this question more than me, if you already have working demos. I didnt know you can hide UI like that

And Eevee usually isnt “realtime” in practice. Even it is a fast renderer by itself, the bottleneck here is Blender editor, which is nowhere as optimized as game engine. Most animation - related modifiers, such as Armature and Subdivision Surface do reduce FPS if used on medium to high poly mesh. I assume particle systems arent the most performant either. Even the way Blender represents 3d meshes is slower and less optimized than a game, since it has to enable mesh editing tools. GTX 750 is low by modern standards, yes, but you wont nessesarily get more FPS just by replacing video adapter. When you play animations of any kind, the main stress is on CPU. You’ll need to optimize your effects as well in order to get closer to realtime

Exaclty ! ![]()

I’m having low FPS especially on subdivided surface.

And you’re right if I’m updating I will update the whole rig, but I mentioned GPU because when I play the animation it goes from 0 to 100% usage, and that why assumed GPU would be the “most important” hardware here.

If you’re right about the game engine and performance will be much better , for sure it’s something I should try! I want to make it with Blender just because I can use the same software for so many different things…

Have you turned on GPU Subdivision in your preferences?

Hi man, thanks ![]() ,

,

first time hearing about that. Just checked and sadly it is enabled already.

Even with that GPU animations can run smooth but as the @DeckardX08 mentioned they should be optimized and simple. That’s why I wonder if a better hardware would boost my performance and possibilites enough so I can trust there wont be low FPS during a live show. Animation similar to this one: https://vimeo.com/711812020 (It’s something I made with Blender but it’s rendered, in viewport playing it’s not that smooth at all)*

I guess the particles are slowest here. Try to replace with something, like plane with animated Displace modifier to “crumple” it, and some cylinder instances placed using Geometry Nodes. Geonodes are slow, but maybe with less instructions they can be faster than particles, IDK

Oh man thanks for the suggestion.

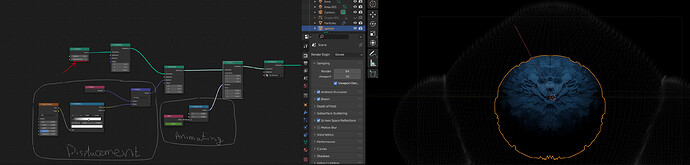

When I was making this animation I noticed what was triggering the low FPS the most was the subdivision of the Sphere.

The particles around the sphere are instances on points and animated in the translate instances node

Probably instead of subdividing the mesh I should use Displacement shading Node to achieve the same or similar effect on the sphere, what do you think ?

So its already geo nodes, sorry then. And unfortunately, Eevee doesnt support shader-based displacement yet, if you plan to use that. Maybe you can generate normal map matching your displacement to get away with less subdivs?

Okay , this is some good direction I can take, thank you ![]()

Absolutely not practical and never will be. Square peg in a round hole. You’ll never get an appropriate level of latency from Blender as it’s not designed for such a purpose.

While I’ve not tried it personally, Tooll3 seems incredibly impressive and will be my first stop if I need to build anything to accompany, and respond to to, a live performance.

Thanks for the comment man,

I know it’s not the ultimate tool for VJ, you are right that Tooll3 looks promising. There are also Touchdesigner and Resolume and probably I should try one of them, but having some success with simple/optimized animation, even using old hardware I hope there is some chance Blender to become, not the best, but some kind of a VJ tool…

Also reading the roadmap of Blender can we say developers are looking in similar direction too ?

Some quotes from Blender 3.x Roadmap

Real-time compositing system is also planned, bringing compositing nodes into the 3D viewport. The new Eevee will be designed to efficiently output render passes and layers for interactive compositing, and other renderers will be able to plug into this system too.

Blender needs a formal design concept for this; enabling all tools and options for Blender to always work, whether playing in real-time or still. Design challenges are related to animation playback, dependencies, caching and optimization. Ideally the real-time viewport could be used for prototyping and designing real-time environments or experiences.

Ongoing improvements of architecture and code will continue, aiming at better modularity and performances

As promised at the 2019 Blender Conference, compositing is getting attention from developers again. The main goal is to keep things stable and solve performance bottlenecks. New is support for a stable coordinate system using a canvas principle.

Will be happy to hear further opinion, thanks man ! ![]()

I am a video DJ and I do use Blender, but not realtime. I create the video files, rendered with the audio files as an MP4 and perform with the MP4 files. Yes, it’s static but very reliable and works well with Serato & MixEmergency, which is what I use.

Yes, Blender is awesome and great to make loop animations especially using geo nodes, but I gave up on using it for VJing. I started learning TouchDesigner and it’s promising for real time animations.

Still believing Blender would be good for that in the near future especially when they switch to Vulkan ![]()

Thanks for the answer man, will be happy to see some work of yours ![]()

This isn’t a visualization I use for VJing, but it’s something I’ve been working on. It’s a composite of blender renders and other bits, with a script that generates the whole thing from any file with audio. There’s a detailed breakdown in the YouTube description. Since I’m awful at hard surface texturing, I’ll be posting the blendfile here and see if anyone is interested in improving the texture/materials or lighting. Most of my VJ visuals were created with a grab bag of blender rendered parts and other tools, like command line tools I can get a frame by frame output of the audio visualation.

Also keep an eye on tooll3 which aims to be an open source touch designer replacement. Not that I have tried it yet, but the demos are very impressive. Windows only for now.

still-scene/t3: Tooll 3 is an open source software to create realtime motion graphics. (github.com)

On a side note, I recommend checking out the “sequencer volume” script by @BD3D. It takes the volume of the vse, and outputs the current frame to a geometry node. It’s really useful for audio visualization, and doesn’t require baking or keyframes.

Oh, and this one! It syncs audicity with the blender vse, so you can actually edit audio, and have all of the flexibility of blender.

![Reel To Reel Audio Visualizer [V1]](https://blenderartists.org/uploads/default/original/4X/b/8/b/b8ba972d05bcb4ebe9fc30d80cd698b839ca556a.jpeg)