Common misconception.

We are literally talking about three uniquely coloured lights that can project at any intensity. Think of it as a note played at a different volume or a particular colour of paint at a different density.

If you think about a simple green tree, the greenish light and the reddish light are projecting, with some blueish in the set to desaturate the mix slightly. Of course, the ratio of light intensity is highest for green, then a splash of red, and a hint of blue.

Perhaps in some random colour space the display referred output RGB intensities would be something like 0.1 0.9 0.02. So what happens if we increase the intensity? Now the green emission has hit its limit, yet the other two channels must increase to keep the chromaticity, or loosely “colour” of the light, the same. Since the green channel can’t represent a more intense green emission, the red and blue climb, with the reddish light climbing rapidly up to match the green. This leads to the dreaded trees and foliage turning yellow. Same happens with light and dark skin tones turning yellow, or skies turning cyan.

Here is a sample photo that Mike Pan found in a Twitter link as an example. It’s excellent because it demonstrates the ghastly yellow issue on skin extremely well.

Gamut mapping along intensity is somewhat of a new thing though, and folks don’t have it very visible on the radar. Gamut mapping along saturation has been engaged with for a long time via the print industry. Heck, even ICCs have gamut mapping baked right into the protocol!

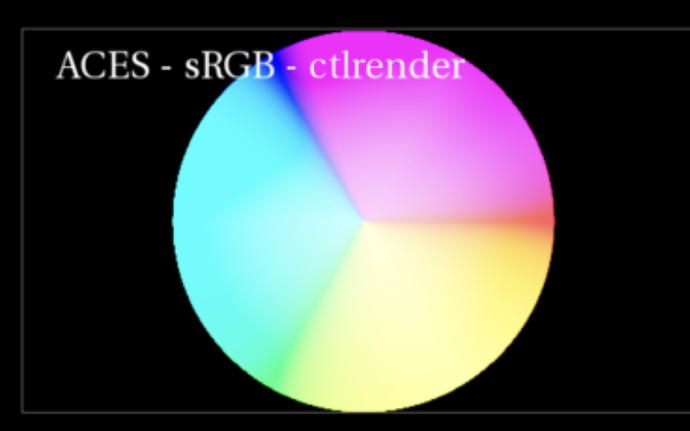

ACES suffers in just the same way as sRGB for this reason, and you end up with the same ghastly skew.

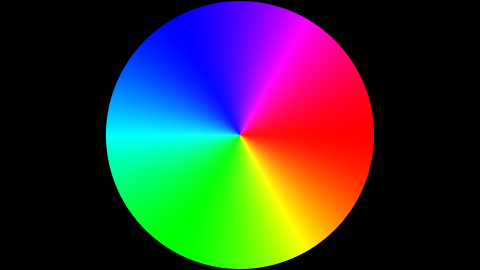

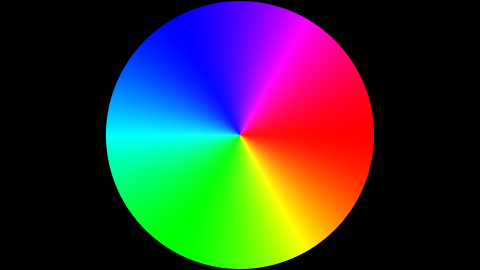

You can do this sort of test on your own. Start with a generic colour wheel mix such as the following:

Increase exposure. Presto, nasty skew! See all the mixtures between the pure primaries and how they lose the originally intended colour and skew to the pure primary mixtures of magenta, yellow, and cyan?

When you realize that in a majority of scenes the exposure may have elements that extend beyond the gamut of intensities, this sort of mangling is happening in every image you make.

Filmic has a gamut mapping, albeit a very crude one. It too can be broken, but it isn’t nearly as broken as not having any gamut mapping at all.