Hi!

After getting some hype from this great tutorial https://www.youtube.com/watch?v=jT9LWq279OI and finding out about the new “Ray Portal BDSF” shader I just had to create a lens simulator ![]()

Coming from a Houdini workflow I decided to simulate the lens virtually in a custom OSL shader, this gives me full control over everything without having to leave my cozy coding environment.

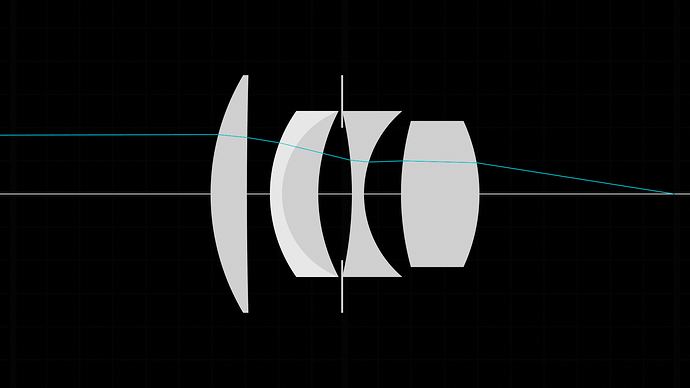

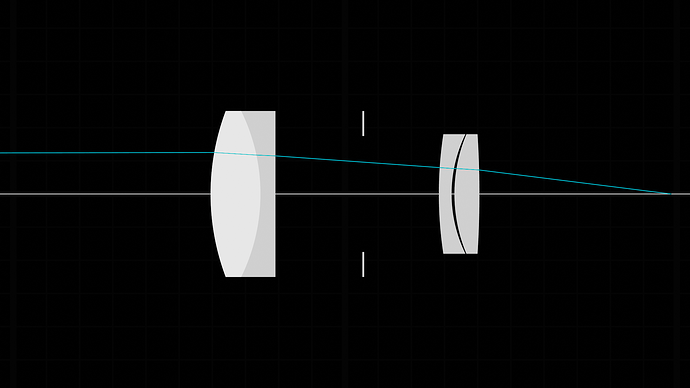

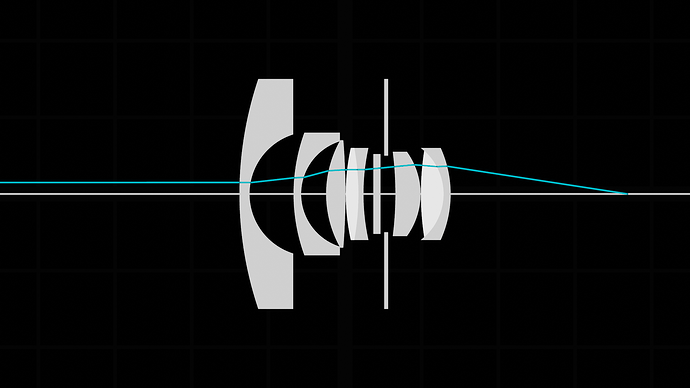

Lenses are implemented from real world lens patents, to implement a new lens I simply enter the lens data from the corresponding lens patent. I need to do a few manual adjustments pr. lens since the aperture position and size, plus the lens cutoff height is not set in the patent.

So far I have calculated chromatic aberration in a non realistic way, simply shifting the RBG rays based on a imaginary number I think looks good. A random color is simulated per sample(R, G or B), this introduces more noise in the image since more samples will be required to average the result to white light. Would be cool to simulate the wavelengths in a realistic way, but that is a little bit over my math knowledge at the moment.

Since the optimal censor position for a given focus distance varies heavily between each lens, focal length and other factors of the lens, I decided to go a more mathematical way about it instead of guessing or building a lookup table. I first ray trace a single ray from from the desired focal distance to the camera, given the ray hit position on the Y axis after tracing through the lens I get the optimal censor position to focus the image on the desired focus distance. I then proceed with the normal lens simulation based on this new censor position. This slows down the render with about 8.59437751004016%, but totally worth it. Manually setting the censor position is also possible.

I also added a lens schematic mode where I can debug and view the lens shape, this includes the ray for calculating the optimal censor position.

The rendering performance does not seem to slow down significantly when rendering through the lens, I think the real performance killer is rendering DOF in general, not necessarily the lens it self, need to do some benchmark tests on it. Also, instead of changing the size of the simulated lens aperture we can simply change blenders F-Stop, this will more or less have the same effect but greatly improve the render speed since we’re not trying to sample light through a small hole when we want to reduce the bokeh size.

A great side effect of using the “Ray Portal BDSF” shader is that most of the AOV passes ignores this shader, the light ray is simply just redirected.

Here are some results:

Early focus test:

Canon 85mm f1.5

patent: DE1387593U (old german lens)

Kodak Petzval 1950

Minolta Fisheye 1978

Cannon 53mm f/1.4

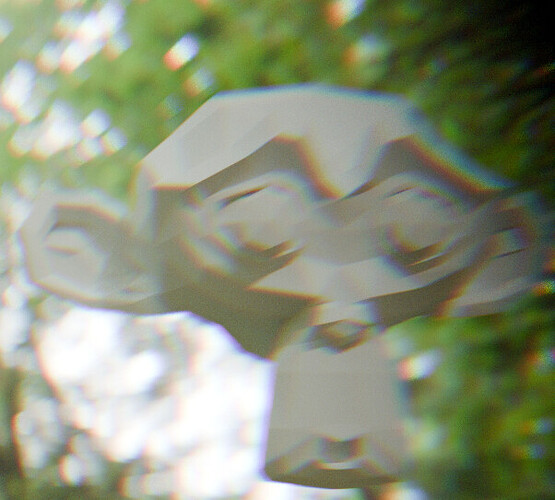

Outside view of the lens, added a toggle to flip the image for convenience, the original lens image is upside down.