This is a topic I have seen multiple times but I thought I would revisit, given that most of what I have found are old questions and I’m sure have since been forgotten.

Long story short, in cycles, clear coat doesnt look right. Can someone explain to me, with some degree of explanation as to the physics being used by blender specifically, how blender principled is handling clear coat and if you already know any special workarounds mathematically to resolving a few issues like how there is no change to the underlying layer value wise when clear coat is set to one, and why the reflections generally look like they are missing?

Let me add to that. To start. I have been using various different engines for close to 12 years now and I am certainly not new to blender or cycles, and I know what I a asking is not a ““typical”” feature of most render engines, at least that I have noticed. In my experience this almost always needs to be faked. But, given that few engines like cycles and arnold give you an extreme amount of control using math nodes and different mix drivers (well call them drivers because I am actually not sure if there is a technical word for it, if there is I would love to know), I figure, if there is a more accurate way to fake these issues than just eyeballing a layer weight facing value and eyeballing a color value change, then it would be possible in one of these engines, and given the size of the blender community I figure ill start there.

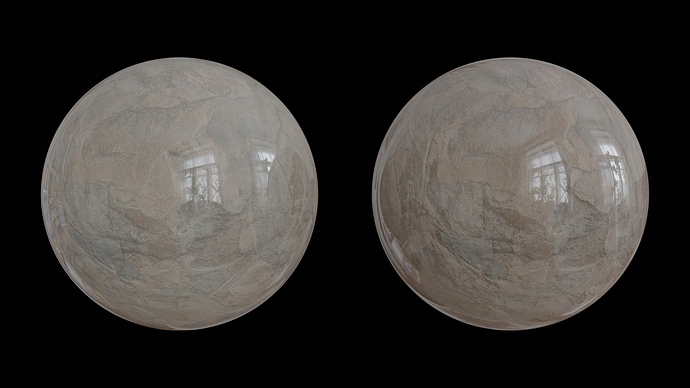

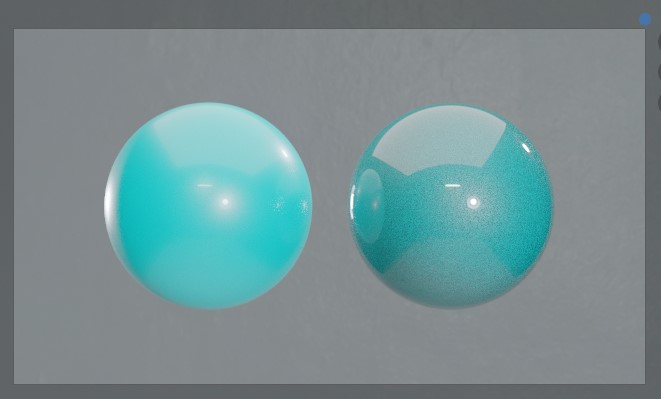

Here is a test comparing a physically modeled clear coat and a shader using “clearcoat” set to 1.

On the left you see the clearcoat in the principled BSDF shader barely reflecting much of anything and generally just feels very flat and dead. On the right, and I cannot speak to the real world accuracy of how physically modeling a clear coat shows up in a render, but it looks much closer to a real clear coat in my opinion. Reflections straight on are much stronger but still does not ignore the fresnel effect, the shadows are darker and much more rich in color. And more than just adding a layer weight node, the rich dark shadows are showing up only where there is actually light fall off, not just because its not directly facing the camera.

Like I said, I would really appreciate it if someone who understands the real world math and how blender is handling this can explain to me a little bit how, well, blender is handling this, and if there is a way to simulate this with less guess work, all of that. I am a big fan of using nodes to sorta hack the system a bit so I am eager to understand this a bit better.

I wanna mess with stuff and find out how impractical it is later and then have the tool when I need it. Not like its costing me anything extra financially… already paid for Maya lol

I wanna mess with stuff and find out how impractical it is later and then have the tool when I need it. Not like its costing me anything extra financially… already paid for Maya lol