What about when you just want the fastest configuration that can still work on air cooling and does not require a 1 kilowatt power supply? Can that still be a possibility (since things like fluids and animations require ridiculous levels of computing power to not take days) or do we need to set limits because “Gaia demands it”?

That’s why I say 10 years.

Not sure if someone already posted this, even in the past day it’s a lot of post. But someone is benching the M1 Max against a G15 with a 3070 in Blender.

It’s beating it or matching it in most task even with the Mac running stuff in the background, which he shows. He admittedly say he doesn’t know Blender well but gets the point across.

Not surprised from owning the M1 that the Max is beating the 3070 in playback even though it’s pushing true 4K worth of screen pixels vs a 1080p PC screen.

Blender doesn’t even have GPU rendering enabled yet! And does he know there is an ARM version?

Haha! After a couple of years I still forget, they are called ctrl, option and command and they also have an ascii character that I have seen sometimes being pasted.

Long stretch incoming:

But yes, my experience if I can chime in on this, I have been a 90% windows user (boot camp) and 10% macOS (mostly emails and media consumption because it’s just nicer in my experience) until about 2 years ago, where thanks to work from home I just went under radar from my employer forcing me Windows but didn’t get a clue that I was on Mac until the realization that I was making builds on iOS without hoops and tricks.

It took me about a month to be fully comfortable with the keyboard, shortcuts, etc. CMD+C and CMD+V is second nature now, that is until I Remote Desktop connect to someone’s windows (RDP works 100% on Mac) and then I’m Ctrl+C/V’ing for a few seconds afterwards.

My biggest pet peeve is the windows management, it’s overall good, it’s smooth, but there’s something about who or what is in front or goes behind a pile of windows… for this I have learned to embrace the trackpad: I use a mouse on the right hand and have a trackpad handy left to my keyboard for everything gestures (the laptop would have it built in already). It enhances navigation since scrolling with two fingers is ultra smooth (compared to mouse scroll wheel), triple finger swipes navigates between “desktops” (basically screens with apps already opened grouped in them), three fingers swipe up (to see all apps grouped this is called mission control) or three fingers swipe down (puts all windows of the current focused app next to each other).

If you jump, hope it goes as smooth as possible.

If anybody curious, why windows and Mac before?: for many years I tried many laptops which tended to last about 3 years max, would get in weird state after a random windows update (like WiFi stopped working), warranty is appalling, etc… with Macs I have a 2011 given to a sister of mine still on-going, I got myself a MacBook Pro mid 2014 15” still going strong, an iMac 2013 (it works but don’t know what to do with it now) and my current main iMac 2020 which I bought after I got convinced that I really just wanted to go full Mac and bit all the bullets (I go Parallels maybe once a month when needed).

The few times things have gone awry it’s never questioned, ‘here’s your replacement, new computer, new pair, new remote, new anything’.

And to be honest, it’s ultimately nice, everything really (mostly) works, airdrop, Bluetooth headphones, camera, microphone, take a screenshot on the Mac and if an iPad Pro is close by the image appears to take annotations with the pencil, etc… many conveniences. Spotlight is a big one for me.

I’m a fanboy by now so, also with a grain of salt ![]()

Just saw this, the article at hand: https://www.anandtech.com/show/17024/apple-m1-max-performance-review/5

In none of the CPU benchmarks does the intel beats neither the M1s 8+2 CPU nor top line AMD‘s for that matter. Not only that, in some benchmarks it scores close to three times the score of an Intel 11980HK (65W), and almost double of a desktop 11900K, also it is just shy below an AMD Ryzen 5950X… I already forget what was the TDP of the M1, 30W was it to boot?

EDIT to add a quote: “The M1 Max even manages to outperform the 16-core 5950X – a chip whose package power is at 142W, with rest of system even quite above that. It’s an absolutely absurd comparison and a situation we haven’t seen the likes of.“

Thing is, I used to do this before, thinking that I was saving money while sinking time dearly somewhere else… and you can choose your webcam, and your microphone, and your WiFi card/dongle and Bluetooth card/dongle. And then start hunting for the right drivers after windows puts a default one, then hope they don’t take each other’s channel or create any conflict, then battle why the new nice pair of headphones don’t want to connect to the Bluetooth.

Then see the machine halt to a blue screen because the crucial memory is not the best one for the motherboard/cpu combination… and then the best CPU for that memory needs another CPU slot.

I’m done with this, I used to enjoy it, used to think it’s fun… now I just want to connect my single power cable and have all of that working from minute-0 from the get go.

Not gonna deny though that choice is always good.

How many pc users seriously modify their pc?

I think mainly PC gamers and well us CG folks do that.

But are we a big part of the global PC user base ?

The last one he is using CPU only, if he use Optix the pc will smoke Mac. The one that can’t be loaded out though, that’s interesting. I think it ran out of vram?

True, Im just impressed how well its holding up with the Eevee tests. I figured something was up with the cycles render at the end. Maybe he should upgrade that card to a 64GB VRAM version.

Quite a really nice appetizer… you can hear the fans go ‘WHIIIGFFFGGF’ on the G15 Laptop even with audio noise cancelation he says he applied.

Interesting that the benchmark in general don’t really take into account the setup and the sort of preflight that occurs before rendering. The M1 does some ‘kernel thing’ (myself I don’t know what this is to be honest, I remember some talks about ‘shader compilation’ happening, related?) a whole lot faster and in general starts the rendering process considerably sooner. To join the crowd, curious then what/when the ARM and Metal dev going on behind the scenes is going to bring.

There are a lot of reviewers doing blender benchmark tests without understanding what they’re doing that I think is muddying the waters. (Although I’m glad blender is at least in the conversation!)

The cycles render test in the posted video is running on cpu on the mac vs gpu on the windows laptop. It’s not that the Mac is compiling kernels faster; that is a gpu rendering thing. So it’s a bit of an apples to oranges test until we get metal cycles support.

I also see this happening with bmw tests where they’re stating the gpu rendering is slower than cpu, not realizing that running the gpu version of the benchmark on a currently unsupported gpu will just run it in cpu, but using gpu optimized tile sizes, hence the time differences.

I’m as eager as everyone else to see how these machines perform, but some of this stuff highlights how a lot of the early coverage is people doing rushed content for YouTube views. I mean beyond a certain point it’s also kinda silly how every reviewer notifies you that yes, they got the same geekbench score that is already being reported by everyone else.

Actually, in eeVee the M1 mac does get the shader compilation faster - noticeable faster than my macPro running windows.

No, I mean the 3070 out of vram. The M1 Pro swap almost double the vram, so macs probably is hard to run out of vram even with 16 gb?

Hi, got mail from Nvidia about keynote at November 9, publish the new GTX 4000 series, Intel i9 12 series is faster than M1 Max on Geekbench …

It seams we get interesting times when user can start testing Blender Metal on CPU+GPU to compare the real power of M1 vs. Intel/Nvidia on CPU+GPU RTX mobile systems.

Cheers, mib

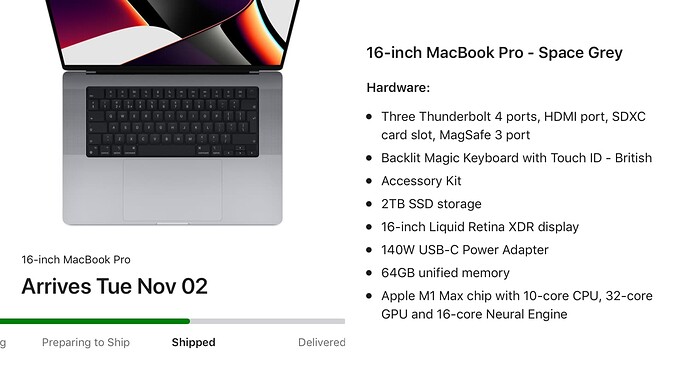

Arriving next Tues.

Happy to run some tests, let me know what you would like to see??

Very excited to have a new Mac, last (and current) Mac is a 2012 MBP, so the difference to the M1 will be dramatic.

Edit: If you have any test files for specific cases, feel free to send them over, will add them to the list I am drawing up. I’ve set up a folder in Dropbox for you to upload stuff.

https://www.dropbox.com/request/jv8c7KutLrD7Ozvs3AL8

Could you look into viewport performance with heavy instanced scene?

I just got off a forest render and the viewport performance is quite painful. I can provide the test file.

Of course. Feel free to send over the test file.

Lucky you ordered nearly the same thing but it will be like 16-23 Nov.

I had almost forgot about notch shit and suddenly:

https://twitter.com/SnazzyQ/status/1453143798251339778

Apple and developers/designers have urgent job. I hope it will not look like this:

This is a useless video and it really annoys me.

- He’s comparing only cpu’s, yet the title says Max vs 3070.

- The playback on second and third test was better on the G15.

- He renders different frames to compare.

While there is something to be said about kernel loading times, that’s about it. I expect a lot from the Max, but there’s simply no point in doing comparisons like that until we get a Metal build. This is why we’re called fanboys or sheep. Let’s stay objective.

I am jealous, maaan!  Only thing I would like to know is how hot it gets when doing rendering with Cycles. Thanks!

Only thing I would like to know is how hot it gets when doing rendering with Cycles. Thanks!

Are you using Apple Motion?