I cannot reproduce it.

Can you tell me the exact steps to recreate the problem? What are the shapekeys of the shirt object? Does it happen only for the shirt? What version of Blender are you using? What happens if you delete the template model before the fitting?

I manually painted the weights.

Thank you.

Thanks volfin. I have not tried it yet. I am still strugling with that “other” software that Manuel wrote years ago. For no particular reason other then I am just more familiar with it.

Yeah, I tried that. I’m not sure how uncanny it looks, though. ![]()

The Manuelab to Rigify conversion is already done, especifically says is done for Mauelab addon

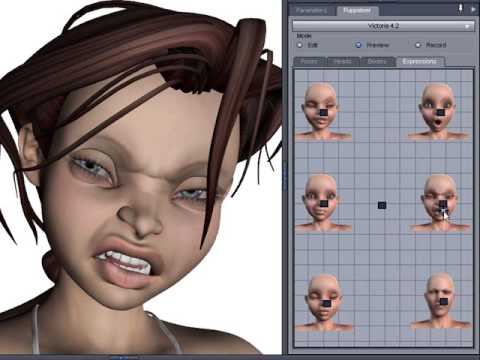

Of course it dont work with the face expresions, so it have a buton to simply erase it, anyway it would be usefull to have some bone control to the mouth, to make some automatic voice to speech animation work.

focusing on more realism is ever rewarding, but can become easily too obsesive :D. i think that maybe focusing on better character animation controls is less eyecatching but necessary cause blender is good at general object animation and control but on character control is still too far from other software as DAZ. as example this control addon.

UPDATE: I posted an article about this, with more pros and cons: http://www.manuelbastioni.com/wip_170002_rigging_vs_shapekeys.php

I’m thinking about it, but it’s not easy to decide what’s the best method.

SHAPEKEYS ONLY

PROS:

- Shapekeys permit to reproduce fine details like the main wrinkles and muscles behaviour. Also shapekeys can create the extreme expressions of anime and toons.

- Few shapekeys (< 50) can be combined in order to create most of existing expressions and visemes.

- Shapekeys are easy to export

CONS:

- Shapekeys require a lot of modelling work

- Shapekeys need an algorithm to fit them from the base character to the specific character

- Shapekeys are linear so the bending of some element (for example the tongue) is often wrong when applied with values < 1.0

FACE-BONES (without corrective shapekeys)

PROS:

- Doesn’t require dedicated modelling

- Rotations don’t need to be scaled, so an expression based on rotated bones should fit better than shapekeys

- Expressions can be exported and mixed as poses

CONS:

- A realistic face expression requires an high number of bones.

- To create an user-friendly realtime interface, an high number of bones require a very complex drivers-based system

- A very complex drivers-based system is practically not exportable out of Blender

- Coding a very complex drivers-based system is prone to some problems too long to describe here.

FACE-BONES (with corrective shapekeys)

It can reach the same level of realism of pure shapekeys system, but has the sum of all cons of shapekeys and bones + some new cons caused by the complexity of implementation.

CONSIDERATIONS

Shapekeys are easier to code and maintain, and easier to port in external engines, but they require a lot of modelling work and sometime the results are not perfect.

Probably a system based on facial bones is better, but to avoid the cons listed above the lab should provide only the rigging, without the controllers. Then the user will choose his preferred method to animate them (for example linking them to a motion capture system).

The development of an hibrid system is excluded due to the big number of cons.

If I decide to switch to facial bones, the current utility based on shapekeys will be not supported in future versions

The lab is a tool to parametrically create characters ready to be animated.

It’s not an animation tool.

The goal is to provide an excellent character with professional topology and with a solid and standard rigging, plus some specific utilities for easy proxy fitting and animation retargeting.

So I will stay focused on very specialised field of character creation, while the improvement of the general character control is matter of Blender Foundation. It would be not a good strategy to spend a lot of time implementing in python something that sooner or later will be perfectly developed by the official developers, with C based optimizations.

Manuel, have you consider tout use bendy bones on the face rig like this easy rigging method https://vimeo.com/190801903 ? With a tension map, it could do wonders !

The bendy bones are already used for the basic muscle system, but I have not yet received a clear feedback about the quality of models exported in external engines.

To better evaluate their use in the lab, it would be useful to know their behaiviour in the most common external game engines (I can’t test them):

- Are bendy bones supported in external game engines?

- Are bendy bones exported from Blender to external game engines?

- If exported, are they converted in big unique bones, or many little bones, or what?

- If they are converted in many little bones, are the weights automatically splitted?

This feedback would be very useful.

Tension maps are amazing. Has anyone info about the possibility of an inclusion in official Blender?

I don’t believe bendy bones are supported by most/all external engines. Nor are they supported by the fbx export.

The only workaround I can think of to support external engines is somehow converting the bendy bones to regular bones or morph targets / blendshapes with a bone driver based on angle and/or twist.

Perhaps the bendy bones are creating confusion in exported characters. I’m evaluating if continue or not to support them in 1.7.x. Waiting more feedback.

Are the drivers correctly exported and handled in external engines? I don’t think so.

It doesnt supported in Unreal. Where we can vote for FACE-BONES (with corrective shapekeys) ?

I think the best solution is facial bones without controllers.Is not viable to keep remodeling the characters all the time. There are lots of characters creators out there, but Manuel Bastioni Lab stands out due to the realism of the characters.Character creation and animation is always going to be very complex and time consuming. Part of what motivates me is to know the tools I’m using are going to allow me to achieve the best possible results.

I was planning to introduce my project when it’s out of pre-alpha, but since you’re considering switching to bones, my project is kinda in danger :')

My framework, https://surafusoft.eu/facsvatar/, first extracts the facial expressions of the user in a FACS format. Then, using a Python module, I change these FACS values to Shape Keys matching your characters. These data is then forwarded to Unity 3D, where I imported your MB character. Unity 3D then uses these values to animate the face of the character.

A demo video can be seen on the linked website.

I would vow for Shape Keys for 2 reasons. The first one, from a Game perspective, is that if animations are exported as poses, it might conflict with poses created by other developers. E.g. a character walks (which is not fixed) and you want to animate the facial expressions independently.

Secondly, I think what makes this add-on stands out from the crowd, is the quality of the characters. If the quality of facial expressions is going down, my project that I want to use for Human-Agent Interaction, would decrease in quality. But also for psychological research, where expressions of avatars for stimulus has to be as high as possible, quality is important.

I think your characters have a lot of use-cases outside blender, so it would be sad to see it being restricted to 1 program.

A final option that could yield some interesting results is a mixed system, but most of the mixing is on the back-side.

What I mean is:

To reduce the complexity of the conversion between different characters, you could potentially take the route of Disney’s physics-based facial animation structure by modeling and rigging facial muscles on the back-end, and later bake muscle actions into shapekeys.

I don’t know, that first part might be even harder to pull off than pure shapekeys

The part that I’m pretty sure would be beneficial is this:

At the end, the only parts of the face left controlled directly by bones would be the tongue, major jaw movements and maybe eyelids and eyeballs, as these often see error-cases in a pure shapekey setup.

i vote shapekeys. i agree that i would be nice for a game environment to keep a facial animation and a movement animation.

This can be easily avoided exporting the face animation as separate from the body animation.

Also I’m evaluating the feasibility of an utility to bake the expressions as shapekeys.

Don’t worry about quality. My intention is always to increase the quality of models and animation, never decrease. Using the rigging instead of shapekeys should increase the quality of expressions, in particular for the characters that are very different from the base one and in general for all movements that require rotations.

Anyway to have high quality face expressions is required an high amount of bones. Since the Blender drivers (and perhaps the Blender constrains) are not exportable to external engines, what can decrease is the UX of user, that to create a new expression have to manually pose a lot of bones.

I’m even tempted to create two alternative systems, one motion-capture oriented with a lot of bones (more than 100) and one simplified for manual posing. Anyway there are some doubts about the former. I quote one of my replies from another discussion: “Each motion capture system uses a customized set of feature points, that can greatly vary in number (from tens to hundreds) and placement. So it’s almost impossible to design a face armature that is “standard” for motion capture systems.

As far as I know, the only way to design something “oriented” to face motion capture is using an high number of bones, but I have no idea about the amount of work required to link existing bones to a motion capture system: I’m afraid that in most of cases the users will prefer to delete the existing face rigging and replace it directly with their custom scheme. This would be the worst case, since the face rigging will be useless for people with motion capture tools, while people without this technology will have to manually pose a very high number of bones.

It would be interesting to have more feeback about this, in particular from people that regularly use motion capture for their projects.” I added the bold here.

Perhaps the solution is the utility to bake the expressions as shapekeys.

Nice idea, but it is not feasible with the current resources…

On FB discussion, most of users are asking about a system rigging-based, because they feel it as more flexible and usable.

I think that it appears more user-friendly because it’s easy to visually grab a bone and create any type of expressions, even anatomically wrong: it’s a powerful expression editor. On the contrary with shapekeys the user is limited to the existing library, and to add new elements he must sculpt the model manually.

It’s hard to decide and even the direction of technology for the future of facial animation is not clear. Certainly for scanned characters the shapekeys will win, but for fully synthetic characters the parametric animation seems still valid. I think an expression system based on pure rigging is more consistent, for two reasons:

- The expression can be entirely descripted in one format (example bvh) and exported everywhere

- The expression is handled completely with an unique system. On the contrary using shapekeys it would be required to use rigging for some things (eyes, tongue and jaw) and shapekeys for others.

Thank you for your extensive reply. It seems my worries were mostly due my inexperience as animator (I’m coming from a programming background). If baking expressions as Shape Keys is possible, it seems that the final result for people just exporting your characters won’t change notably, and it would be great if user’s get more freedom in creating their own expressions.

Good to hear this ![]()

Would it work if instead of directly mapping every motion capture system directly to face animation, instead use some intermediate data format? For example, you could use FACS (Facial Action Coding System), which is an universal facial expression description standard. Then the people using motion capture only have to make their motion capture compatible with this intermediate representation. You would only have to implement the conversion of FACS to your facial bones once.

Also, if you would ever chance the amount of facial bones (or a fantasy character with different number of bones), only this conversion from FACS to the bones have to be changed, without having to worry that suddenly all motion capture system conversion you’ve painfully added 1-by-1 has to be done again.

I have to code it in order to be sure, but yes, it should be feasible.

When people decide to use a motion capture system for the face, they want the max realism in the shorter time. Max realism = capture even micro expressions and uncommon muscle movements, even “defects” of the actor. Shorter time = just link the raw data to the armature and see how it works, in realtime.

There are two approaches:

-

They scan an actor and create hundreds of expressions directly from the real face. Then they mix these shapekeys in realtime using motion capture.

-

They identify a lot of feature points (even hundreds!) on the face and transfer their motion to correspondent bones, with a ratio 1:1.

An interesting effect of the (1) is that after someone acquires a full database with thousands of expression of an actor, in future will be possible to create entire movies, with realistic and believable actors only using virtual clones…it will be difficult to see the difference. A died actor will be able to play the main role in new movies.

Anyway, back to the topic: when people use motion capture, usually they want to capture all. If they are forced to filter the data using a limited set of discrete elements, they will loose the realism of animation. This technique can be advantageous only in case of very simple capture system, that are limited to few points, but if someone have a fantastic data with 200-300 points per expression, why lose these details?

Also the creation of the intermediate layer should be done with the motion capture software and I have many doubts about the fact that the main manufacturers will comply with this.

I think your experience with FACS data is that of FACS raters. They usually only rate binary whether a certain AU (Action Unit) is in the face or not, or somewhat better, a 5-point scale (but then often not all AUs). However, technically, if you model all AUs and accept say a continuous value between 0-1, you should be able to create any facial expression by combining AUs, including micro-expressions, because FACS describes the face in terms of muscle contraction/relaxation and there is only a limited set of muscle groups in the face.

E.g. micro expression: AU09, Nose Wrinkler, Levator Labii Superioris, Alaeque Nasi (muscular basis).

https://web.cs.wpi.edu/~matt/courses/cs563/talks/face_anim/ekman.html

Maybe if FACS doesn’t describe the muscles in enough details (since it’s about muscle group movements that are visible in the face, and not single muscles), there is another representation of muscles in the human face?

Using an intermediate representation would make it easier to use the same motion capture data with anatomically different characters (anime / fantasy).

Some maybe useful lecture slides I found online: http://www.cs.cmu.edu/~yaser/Lecture-8-Face%20Representation.pdf

The output data should be able to be converted to an intermediate layer by catching the data the software outputs, but I can imagine the technical challenges of this, so I agree, this might not be ideal.