What do you mean with “background mode”?

Yes. I realize that a separation between chest and stomach is particularly problematic from an anatomical perspective.

I have a revised proposal for loops separating interchangeable areas (head, upper body, lower body, arm, leg).

Head (without neck):

Loop above thyroid, posterior approximately cervical vertebra C2.

Waist (splitting upper and lower torso):

Loop bisecting the exact center of the umbilicus, posterior approximately lumbar vertebra L3.

Arm (without shoulder):

Loop around arm about upper third of biceps.

Leg (without glute):

Loop around leg about upper third of biceps femoris.

If the loops could be compatible across all the variants (male, female, obese, emaciated, muscular) except from a few vertices missing or added, then it would still be highly useful, provided that the loops are oriented and located similarly across all the variants. The loop could of course also contain poles, provided those particular poles exist in all the topologies.

Again I am not expecting this to be possible. But it would be really cool if it was. :eyebrowlift2:

standardizing the meshes is exactly opposite of the goal of making many separate meshes. as such, it would be a waste of time and effort.

im guessing the lab is designed to give artists a great starting point for creating characters. i think this is wise, as different needs have different workflows. being all inclusive is to be partially exclusive.

it would be better to make one “Generic” mesh which would have a much greater range of morphs at the cost of efficiency. i think that would much more useful to a wider audience then trying to standardize the meshes.

Background mode is running blender without GUI, just execute blender with “-b” option in comandline.

Eg. “blender.exe -b --python myscript.py”

It executes python script without displaying GUI, that is helpful when rendering using cycles.

Manuel: This might have been asked before. If so just refer me to the answer. I notice that you do do not get skin colors on the figure IN THE 3D VIEWPORT unless you change blender over to cycles. Is this intentional. Also I cannot seem to find that material to turn off the censor parts when blender render is active. Was this intentional. I guess my real question is how do I get a textured view of the figure WITHOUT using cycles.

the lab does not support blender internal, only cycles. a bit of know how and you can make some nice blender materials. but you need to actually know blender.

how people have not grasped how to remove the censor yet is beyond me. just set the censor material to the skin material.

Used the addon to create a base model, handpainted the textures and added other assets and sculpted and edited the model to get this:

Saved time :- )

Thank you but I was waiting for a DEFINATIVE answer from Manuel. That is why I specifically address my question to him.

Very nice.

Hi, thanks for this great work.

Maybe a BUG report: I notice that the mesh exported(with modifiers) has some self-intersection at toes. Can you confirm and fix it for me? I really need this. Thank you.

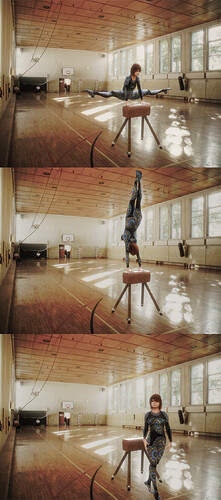

I am using the LAB to create characters to clothe in Marvelous Designer. It works very well and I am able to create any character, export it out of Blender as Collada and import it to MD. The problem is with poses. The import comes in face down in MD. The first image is the stock pose imported perfectly. The second is after posing.

In Blender, I take the initial LAB character and select one of the stock Poses (glamour07). Then I apply the Armature Modifier and the Corrective Modifier. I’ve also been deleting all the Shape Keys (I don’t know if this matters). I use the Pose menu to Apply Pose as Rest Pose. Then I export in Collada and import to MD.

She’s face down every time. Does anyone know where the rotation is happening and how I might fix this?

Attachments

Thank you for this great work.

Maybe a BUG report tho: it seems that the mesh generated(after modifiers) has some self-intersection between toes. Can you confirm and fix that please?

Thank you.

Thanks Shaba1. :- )

Whoa!! This is pretty cool. Can you make a tutorial on how you set all this up? Looking at your test video, it appears that the character has some jitter in it. Do you have a way of adding smoothing to remove this? Also I would recommend making the expressions a bit more sensitive so the expressions are a bit more exaggerated. Is this add-on utilizing all of the shape-keys from the lab?

I was thinking about getting an Iphone X and trying something like: https://www.youtube.com/watch?v=hw7kPyVmUHQ&t=

But this would mean I could use any device with a web camera.

My goal is to have some sort of poor mans motion capture studio for animation. So far planning on using Kinect, Kinnector is cool but still has some ways to go, and the facial animation in kinnector I have not been 100% sold on.

Thank you.

At the moment the background mode is outside the scope of the lab, and in theory it should be not required to call the fitting during an animation.

I mean that after the first fitting (in lab 1.6.1) the rigging is propagated from the body to the clothes, so the character can be animated without further fitting and it should work in background mode too.

Does it happen only for glamour07? Or for any pose?

I don’t know how the exporter works, but there are a couple of things to try:

-

“Apply pose a rest pose” should be never used for a character that is not in rest pose. It will make any animation relative to this initial pose, with wrong results.

-

In the Blender exporter I see that there are two ways to export transformations: Matrix or TransRotLoc. You can experiment both them.

Cool…!!!

Thank you! It’s still in alpha-phase, so how to use it is still changing a bit. By the end of the month the structure will mostly be fixed and the documentation will be proper as well (I need to run experiments, and another researcher in my lab is going to use my project).

The jittering is due to the use of the raw data OpenFace produces. I’m currently implementing a smoothing function, so expect to see soon a video with smooth facial expressions AND smooth head movements!

My project is aimed to be used for many purposes. That’s why it’s set-up in a modular fashion. The network pattern is a Publisher-Subscriber network, so you just listen to the data stream you need. Every module receives data in some format, processes it, and outputs it own data.

The smoothing function does the same. It receives Facial Action Coding System (FACS) data, smooths it, and publishes the smoothed FACS data. Exaggerating the data is as simple as multiplying the FACS vector with a scalar (e.g. just * 1.2) in the smoothing function.

Per Action Unit (AU, muscle group), I looked a which shape-keys were relevant. Then I manually engineered a function that transform the value of an AU to shape-keys of the MB model. If you drag the files found on my GitHub to the MB addon folder where you find the .json files for emotions like ‘Anger’, you can use the AUs as sliders ![]()

I saw the video too, and I think it’s amazing! OpenFace (so including my project in its current form), won’t give you that detailed facial expressions as you see there. However, it’s not one or the other. If you hook the iPhone up and convert it to FACS data (or directly to MB blendshapes), you can still use every other part of my framework, including real-time streaming of facial expressions to Unity 3D (in this case the only think you’re doing is replacing the OpenFace module). The good thing of making the iPhone compatible with my framework, FACSvatar, is also that you can use the same pipeline when using a camera or the iPhone X. Also, when something better than OpenFace comes along, you can just use that as FACS input. If you want to make the iPhone (or other FACS extractor) compatible with my framework, let me know!

My framework won’t require any special hardware, so it fulfills your poor man requirement. If you want better quality, however, I would advise AGAINST the Kinect. The Kinect v2’s production has been stopped a few months ago, so your project would already start outdated. I would instead recommend to use the Intel RealSense Depth camera D415/435, because it seems that this is becoming the next generation Kinect. Not sure about any facial expression extraction support though.

What is your time frame for your project? I’ll graduate end July, so my project will be fully mature by then. Although I think I’ll keep working on it to some degree. I hope the research world for Embodied Conversational Agents (ECA) and Affective Computing picks up my project as well.

Here, I made an explanation how to uncensor a MB model with a picture: http://manuelbastionilab.wikia.com/wiki/How_to_remove_censor%3F

Since I don’t come from an animator / modeller background, I was also struggling with this. I started using Blender because of this add-on, so don’t feel bad you were also struggling with this. To prevent other newcomers from struggling with the same things, I hope that the linked wiki becomes a community source ![]() So if you struggled with something else, and figured it out, please include instructions with pictures on that wikia

So if you struggled with something else, and figured it out, please include instructions with pictures on that wikia ![]()