Hello Everyone!

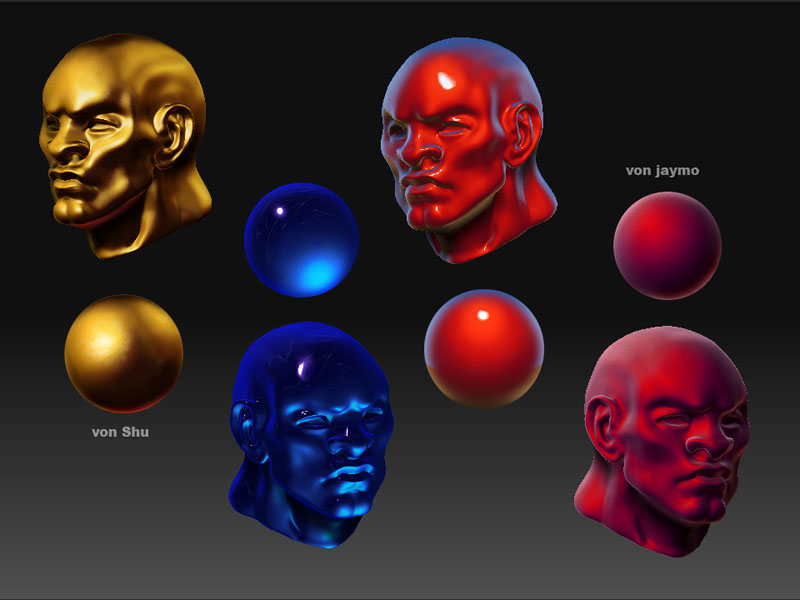

I had an idea on how to implement something like zBrush’s possibility to have a model shaded by selecting an image of a painted or rendered sphere of a certain material. I hope you are roughly familiar with that feature or at least understand what I mean.

I’m looking for a way, to use this technique in blender. I’ll soon be working on a comic, and it would be awesome to use such a feature to achieve non-photo-rendering.

I thought there may be the following way of implementing it:

One could bake normals (probably cameraspace, I don’t know) onto a sphere and then could render an image of that sphere. This would be the refference. One could then take this to Photoshop, get a selection from the alpha channel, and paint a sphere of the desired material. Then comes the tricky part, where I don’t know how to do it in blender and think some coding would be necessary. To project the sphere’s material onto the final mesh, severel things are needed in a script:

-loading of refference image with normals of a sphere

-loading of refference image with material of the sphere

-access to a usable normal render pass of the target model (should work without UVs and baking of normals)

-mapping of the image data of the sphere to the rendered image, using normals as a refference. I have no clue of coding or optimization, but I think it might work somewhat in the following way. There will be better ways, I’m sure, but I can’t code.:

First, build a 3 dimensional array of RGB values for the normals (0…255 per R,G, and B channel). Second, get the RGB values of the painted sphere and insert them in the array as full color values (unless the image is very big, i think there won’t be many pixels in the normal map that have the exact same RGB value on a sphere?! When it happens, a blending of values would be perfect, for a first try, I’d say “first value wins”). Third, lots of points in the array will be missing, this is probably the hardest part, because interpolation between values and extrapolation towards borders of the field is needed. I have absolutely no idea on how to do this! Fourth, the normal pass of the target mesh is converted to the actual NPR material, that has been painted on the sample sphere. This should be pretty straightforward: Get the first pixel, check RGB value, look corresponding RGB value up in the array, replace, repeat for every other pixel in the image.

It might also be cool to include alpha values in the process, so that one can paint alpha values and have them assigned that way. This could be used to break up the silhouette of a model. But I admit, once there is the way for RGB mapping in the described way, one could find a workaround for the alpha channel using another pass and a second black and white image.

That should be it. I have no idea if there already is a way to do this in blender, if yes, please point it out to me. If no, then I think this could become a very cool render node or material node. Some creative mind will probably come up with other good uses for this.

I hope I’m not bugging you with a feature suggestion. I can imagine, that there are quite a lot of people wanting their cool custom feature ;). If I can help the process by faking an example or if you haven’t understood my confusing description, let me know :).

)

)