This popped up on youtube a day or so back - looks interesting

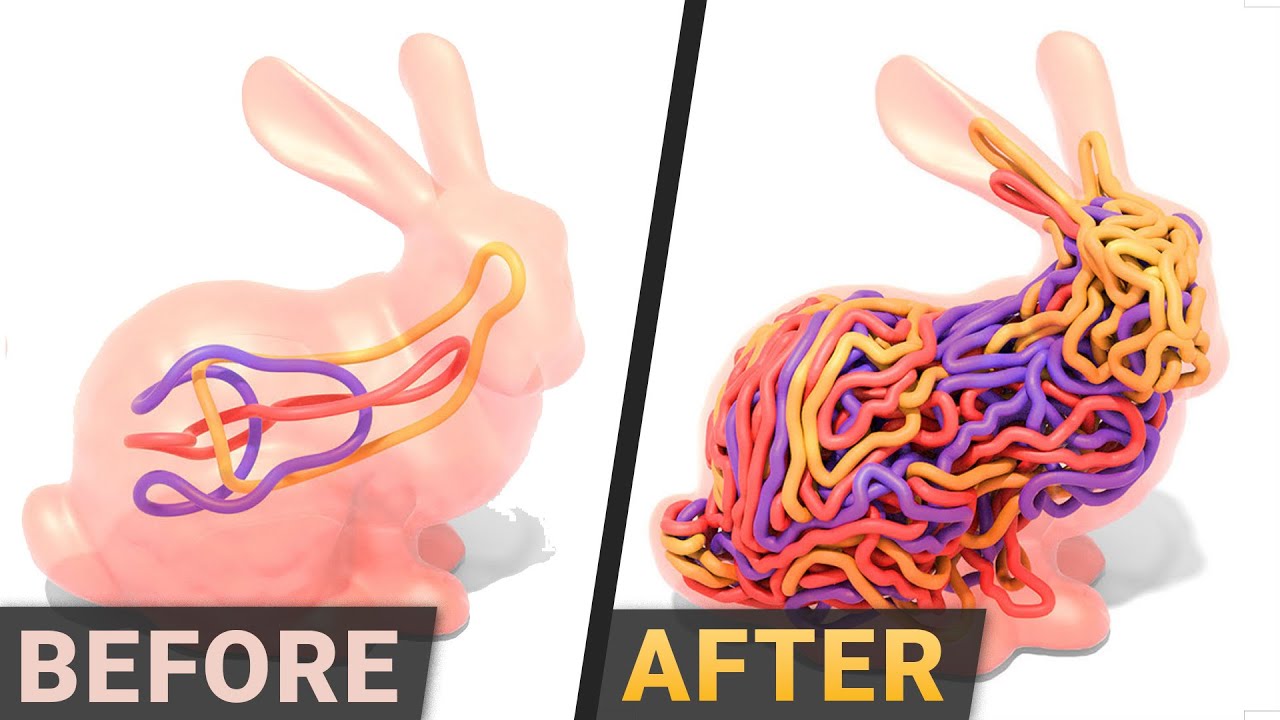

This is giving me the creeps. Makes me think of these worm parasites that grow inside of insects and get out when they’re fully developed. Nightmare fuel

Breakthrough in GPU performance technology from NVIDIA, IBM, and universities to increase the performance by directly connecting to SSDs instead of relying on the CPU

Big accelerator Memory, or BaM, is an intriguing endeavor to lower the dependence of NVIDIA GPUs & comparable hardware accelerators on a standard CPU such as accessing storage, which will improve performance and capacity.

Microsoft’s DirectStorage application programming interface (API) promises to improve the efficiency of GPU-to-SSD data transfers for games in a Windows environment, but Nvidia and its partners have found a way to make GPUs seamlessly work with SSDs without a proprietary API. The method, called Big Accelerator Memory (BaM), promises to be useful for various compute tasks, but it will be particularly useful for emerging workloads that use large datasets. Essentially, as GPUs get closer to CPUs in terms of programmability, they also need direct access to large storage devices.

Modern graphics processing units aren’t just for graphics; they’re also used for various heavy-duty workloads like analytics, artificial intelligence, machine learning, and high-performance computing (HPC). To process large datasets efficiently, GPUs either need vast amounts of expensive special-purpose memory (e.g., HBM2, GDDR6, etc.) locally, or efficient access to solid-state storage. Modern compute GPUs already carry 80GB–128GB of HBM2E memory, and next-generation compute GPUs will expand local memory capacity. But dataset sizes are also increasing rapidly, so optimizing interoperability between GPUs and storage is important.

There are several key reasons why interoperability between GPUs and SSDs has to be improved. First, NVMe calls and data transfers put a lot of load on the CPU, which is inefficient from an overall performance and efficiency point of view. Second, CPU-GPU synchronization overhead and/or I/O traffic amplification significantly limits the effective storage bandwidth required by applications with huge datasets.BaM essentially enables Nvidia GPU to fetch data directly from system memory and storage without using the CPU, which makes GPUs more self-sufficient than they are today. Compute GPUs continue to use local memory as software-managed cache, but will move data using a PCIe interface, RDMA, and a custom Linux kernel driver that enables SSDs to read and write GPU memory directly when needed. Commands for the SSDs are queued up by the GPU threads if the required data is not available locally. Meanwhile, BaM does not use virtual memory address translation and therefore does not experience serialization events like TLB misses. (…)

Hah, at bloody last ! it was bound to happen, and it will completely change the game.

Nvidia and its partners plan to open-source the driver to allow others to use their BaM concept.

Wait… it’s only a driver ?? I thought it was some kind of hardware interface…! and it’s going to be opened ?? it’s mad. Does that mean it’ll possibly run on current generation hardware ?

Don’t think so.

BaM instead provides software and a hardware architecture that allows Nvidia GPUs to fetch data direct from memory and storage and process it without needing a CPU core to orchestrate it.

From The Register

Ah that seems like a more complete article… cheers

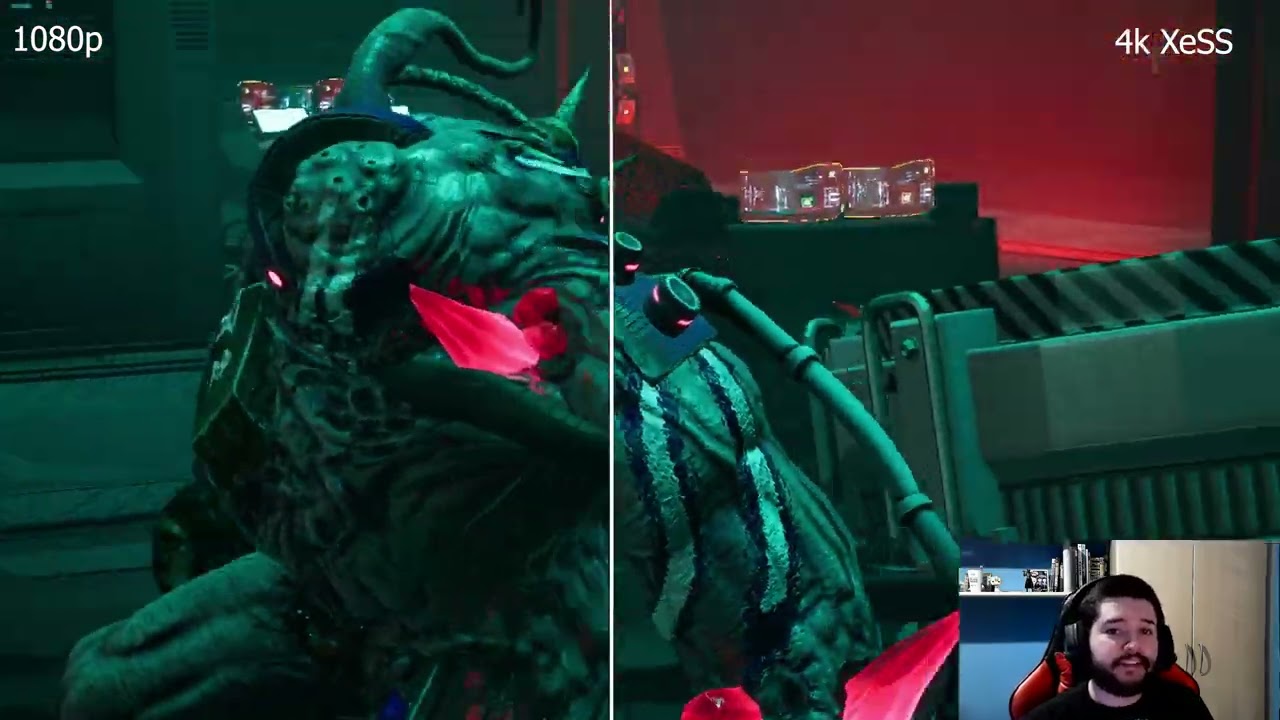

The new Intel discrete GPUs will come with Raytracing. Cylces X will get suport?

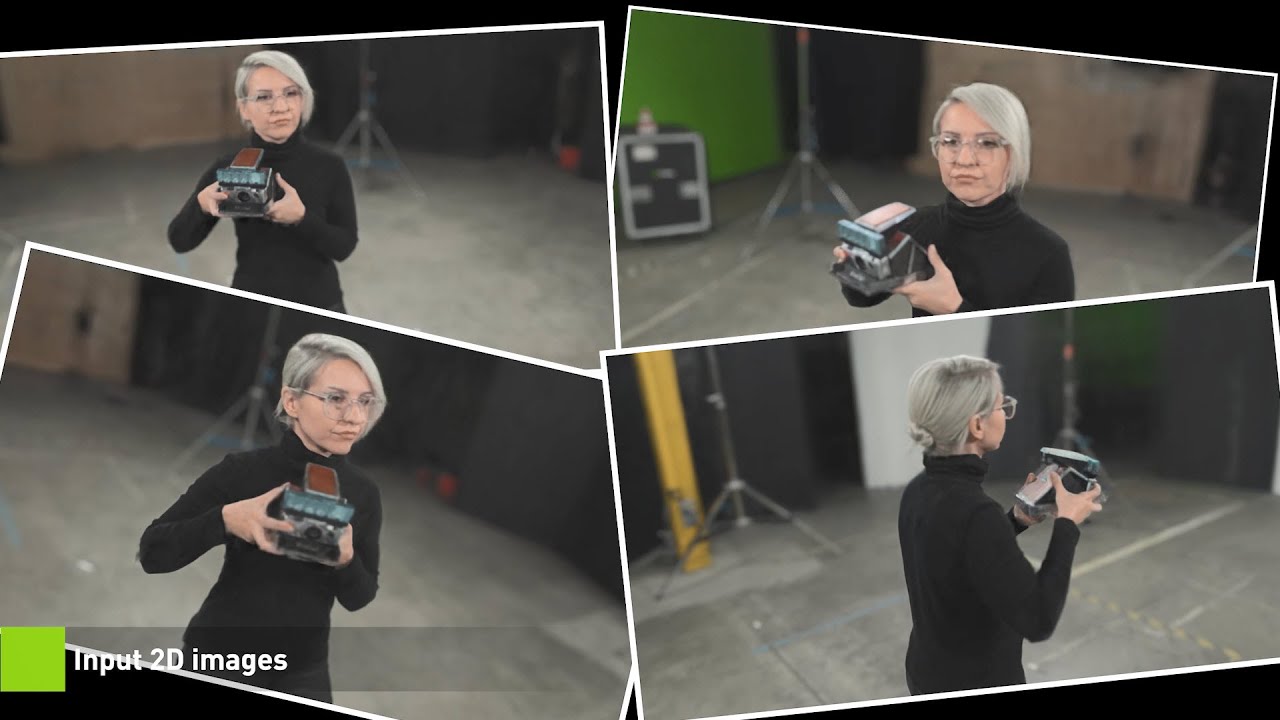

When the first instant photo was taken 75 years ago with a Polaroid camera, it was groundbreaking to rapidly capture the 3D world in a realistic 2D image. Today, AI researchers are working on the opposite: turning a collection of still images into a digital 3D scene in a matter of seconds with the implementation of neural radiance fields (NeRFs).

David Luebke, vice president for graphics research at NVIDIA, said in a statement:

If traditional 3D representations like polygonal meshes are akin to vector images, NeRFs are like bitmap images: they densely capture the way light radiates from an object or within a scene. In that sense, Instant NeRF could be as important to 3D as digital cameras and JPEG compression have been to 2D photography — vastly increasing the speed, ease and reach of 3D capture and sharing.

The applications for the Instant NeRF technology can be many, from quickly scanning real environments or persons so that game creators can then use the digital scans in their projects to training self-driving cars or robots to understand the shape and size of real objects.

I wonder if this technology can be used to speed up simulations.

We put 3D in images/video what about putting 2D objects correctly in 3D scenes?

Really cool tech, could it be implemented into Cycles? : https://www.youtube.com/watch?v=yl1jkmF7Xug

This is pure witchcraft, we need this in Blender for character animation! https://vimeo.com/707052757?login=true#=

Instant Monsters ? For Real ???

I was just sent this by a friend.

Looks a lot of fun.

It’s like Teddy 3D, but more advanced, and I’ve wanted it in Blender since forever. Hopefully this time it makes it way to Blender!