To be honest, so far I am pretty underwhelmed with this new ai toy.

I love how AI can be helpful for artist in practical ways these days. The two immediate examples come to mind are ai denoising and upscaling.

Currently though this ai text-to-image tech produces mostly visual garbage. Sometime it’s pretty interesting garbage but still I see very little value so far. It’s very difficult to create art with intention with this right now. Maybe it’ll evolve into something better and useful in time. There probably some value for concept artists as an ideation stage to jump start creative juices flow if you’re in a rut.

For now though I think we’re doomed to get flooded with this kind of “art”. Until people get sick with it or the tech evolves.

AMD has released the source code for FSR 2.0.

As with FSR 1.0, it is available under an open-source MIT licence, and includes the full C++ and HLSL sources.

AMD has also posted detailed API documentation online, and updated the FSR 2.0 product page with more information and comparison images.

AMD has unveiled Fidelity FX Super Resolution 2.0 (FSR 2.0), the latest version of its open-source image upscaling technology, widely used in games and now also supported in AMD’s workstation GPU drivers.

The update, which is being previewed at GDC 2022 this week, and which is due to become available in “Q2 2022”, introduces support for temporal as well as spatial upscaling to improve the visual quality of output.

FSR render upscaling improves the frame rate of games – and now CAD and DCC apps

Launched last year, FSR is an image upscaling system, enabling software to render the screen at lower resolution, then upscale the result to the actual resolution of the user’s monitor.

Licensing and release dates

FSR 2.0 is compatible with Windows 10, and supports DirectX 12 and Vulkan. The source code is available under a MIT licence.

Can anyone elaborate why this FRS 2.0 open source release is a big deal? If it is, of course.

I understand it in the nutshell. In gaming its pretty clear so far what value it brings but how will this improve 3D artist’s life? Will it come to Cycles or other render engines like Intel Open Image Denoiser did? Will we be able to upscale our renders seamlessly spending less time on rendering?

What possibilities this opens up in both short and long term for us?

P. S. I’ll tag you here @bsavery. I believe you might have a good understand of this.

A upscale tool. It would be nice to have it also for Cycles viewport and also Eevee.

You can render at 1080 and this make possible to upscale that to a bigger resolution, maybe 4K for example. If you are time challenged in your project it might be important. But it would also be great for viewport.

This. Probably most useful for viewport speed.

What was that video even about? Like if photogrammetry hadn’t existed before (without machine learning)?

So it’s supposedly faster or what? Even if it is, I could probably just have thrown more computational resources at the problem, to make it faster either. But that would have cost a hell of a lot of money.

Fortunateley, Nvidia’s graphics cards don’t cost a hell of a lot of money, right?

.- Wait a moment…

greetings, Kologe

That is not wise.

They don’t cost. You can buy a 3060 laptop for about 1000 euros that have a lot of other uses for us 3D like for example Cycles, or 2D video editing encoding and FX in several video editors.

The more jobs a GPU can do, the cheaper it is. So we should welcome more and more jobs a GPU can do, be either Nvidia or ATI or Intel or …

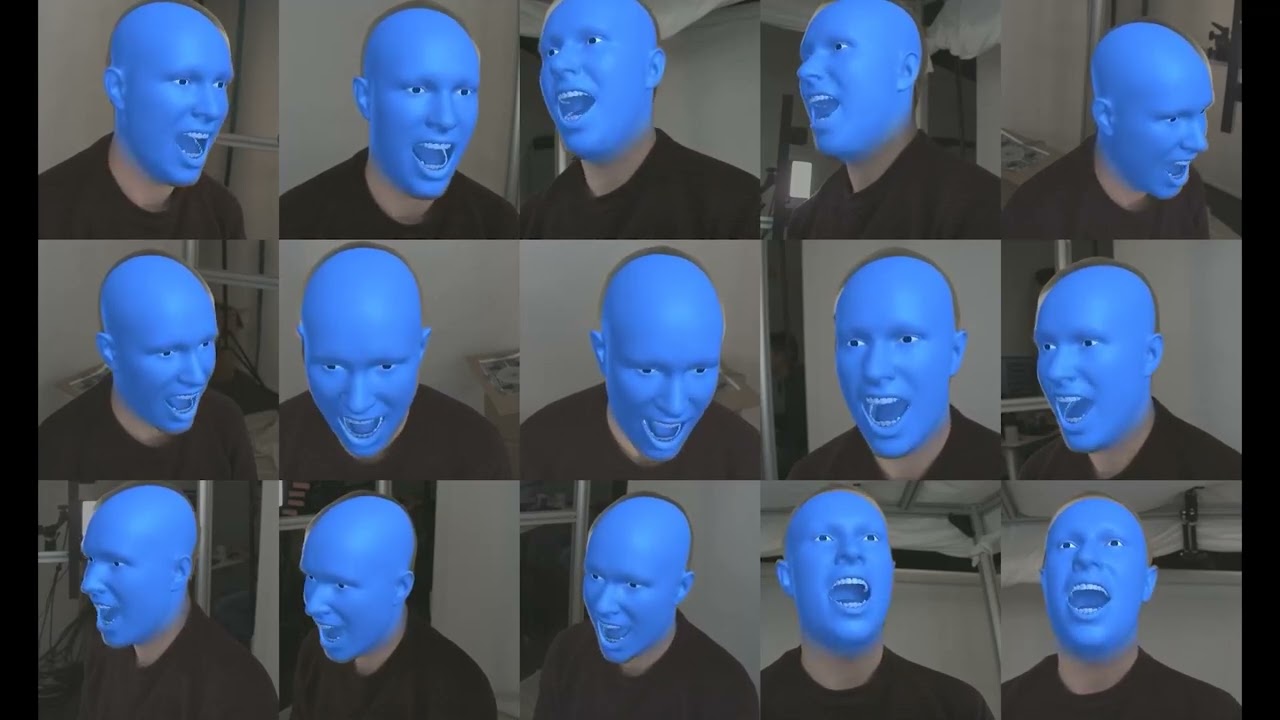

This video (part #1 of an ongoing tutorial series) introduces Mitsuba 3, a new differentiable renderer developed by the Realistic Graphics Lab (https://rgl.epfl.ch) at EPFL in Switzerland. The tutorial series provides a gentle introduction and showcases the work of different team members.

I think this is probably one of the coolest things I’ve ever seen. ![]()

I don’t think it’s that grim. These tools will never replace humans for creativity, because it’s impossible for a machine to be ‘creative’ - unless, of course, it goes full ‘Ghost in the shell’.

People still use 2,500 year old sculpting and painting techniques, even though they could use computers.

They will be used for more technical/laborious tasks, but not creative ones. Take mocap for example. It’s been around years, but even though it’s 100% realistic movement, it looks terrible against a skilled human animator’s work. Ironically, it’s lifeless.