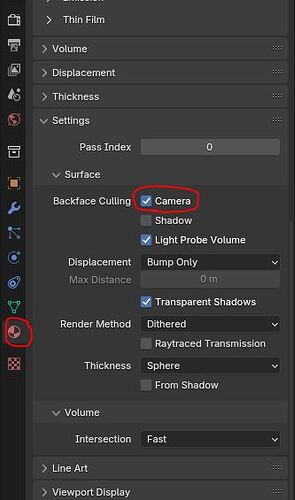

If you are talking specifically about the new global illumination, it’s all about understanding what its settings do.

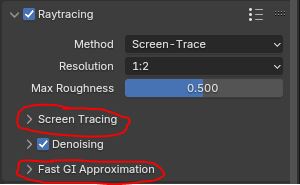

The new GI is actually composed of 2 different methods: screen tracing and fast Gi.

Screen tracing is the higher quality and expensive method (the denoiser is there for this method, as it’s noisy). Fast Gi is a faster, usually less-noisy method that’s a bit less realistic looking.

Which one of the 2 methods is used is controlled by the “max roughness” slider. Any material that’s less rough than that value uses screen tracing and any material that’s rougher uses fast Gi. That’s because screen tracing really benefits reflections, but tends to be noisier on rough surfaces. Fast Gi works best on rough surfaces, so it can take care of those.

If you put the max roughness at 1, you will use only screen tracing, getting the high quality method everywhere, but your image will become noisy and take many samples to render, almost like a mini Cycles.

If you put the max roughness at 0, you will use only fast GI. In that case, your image will render very fast and with little noise, but you lose a bit of detail, especially in the reflections.

Fast GI has itself 2 methods you can choose: Global illumination and Ambient occlusion. This allows you to turn the global illumination off and get a faster render, similar to old Eevee. If you do this, you would need to use an other way to do bounce light, either use the “volume” light probe, or fake it manually with lights.

The exact settings you need will depend on what’s in your scene. An exterior scene with bright day light will react very differently than a dark scene with lots of small emissive objects (The GI really doesn’t like those, makes lots of noise).

Honestly, the default settings are pretty well optimized for most scenes. Most changes you could do would increase quality (like turning off denoising, increasing precision, using 1:1 resolution).

The main thing to know is to set the fast GI to ambient occlusion to get a faster render that’s more like old Eevee, especially in scenes with lots of emissive materials that would otherwise make noise.