BD3D

July 29, 2019, 5:01pm

187

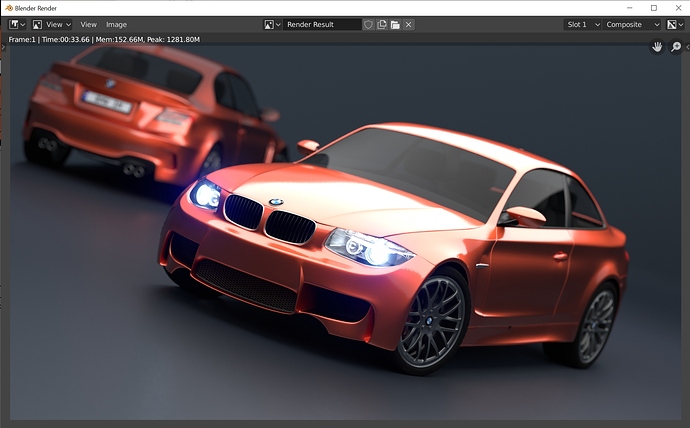

viewport performance for 200 samples [2x 2080TI]RTX ON: 37s RTX OFF: 55s

@2200s [2x 2080TI]16x16 tilesRTX ON: 14m65s56 RTX OFF: 20m55s59

32x32 tilesRTX ON: 9m34s34 RTX OFF: 17m07s77

64x64 tilesRTX ON: 9m09s96 RTX OFF:16m24s26

256x256 tilesRTX ON: 8m43s40 RTX OFF: 14m16s22

i got a crash on the first try

6 Likes

shrisha

July 29, 2019, 5:41pm

189

@BD3D Did you install 435 driver and OptiX 7?

BD3D

July 29, 2019, 5:43pm

190

https://blender.community/c/graphicall/Cfbbbc/

used this build and it will not work witouth the driver anyway

1 Like

shrisha

July 29, 2019, 5:43pm

191

Todays Studio drivers not make any differents. Still not compatible GPU found for path tracing

Right now you still need to install this beta driver to make Blender recognize Optix.

Did a first test, but before the rendering starts I get the message “OptiX implementation does not support shader raytracing yet.”

are you using the ao or bevel node by any chance?

Yep, the Bevel node. That’s not supported yet?

just a guess, try removing that node.

I know they had to jump through some hoops to get that working, it’s possible that the optix bvh acceleration doesn’t work with the cycles specific shader code.

1 Like

Thanks for the pointer. Going to check it right away. BRB.

Yup, the Bevel shader turned out to be the error-causing culprit. OptiX renders noticeably faster than CUDA, nice!

Looking at @BD3D ’s render tests I assume that larger bucket sizes can be reintroduced for faster GPU rendering, as was once the case?

2 Likes

BD3D

July 29, 2019, 6:11pm

200

indeed, small tiles no more i guess ? so disorienting

1 Like

I’m curious where the tipping point of rendering speed increase is. Will 512 x 512 pixels buckets be even faster if your GPU has enough RAM?

its only rtx card? or is my old gtx960 also supported?

This is what NVIDIA answered on their forum:

"All CUDA capable devices starting with sm_10 (G80) are supported, but the most features are supported on the newest generation of cards (currently Kepler).

We recommend using Quadro or Tesla based parts as these have the greatest amount of memory and are designed for a more rigorous use. However, GeForce based products should also work. If you go the GeForce direction, I would recommend the GeForce Titan card."

LazyDodo

July 29, 2019, 6:49pm

204

Metin_Seven:

This is what NVIDIA answered on their forum:

"All CUDA capable devices starting with sm_10 (G80) are supported, but the most features are supported on the newest generation of cards (currently Kepler).

if the ‘current generation’ is kepler i’m gonna guess that post you are referencing is atleast 6-7 years old.

the optiX support in blender is currently RTX cards only.

Oops. @Cyclesreality . I’ve been a Mac guy during the past years, and have just recently returned to Windows + NVIDIA.

Testing on an interior scene I’m working on yields very promising results.

RTX 2070 only:

I wasn’t expecting double the speed. I’m sure it will vary greatly depending on the scene, but for now, it’s a lot better than I was expecting.

3 Likes

Sorry about that,

Sorry about that,