Except… neither are path tracers. They do use raytracing but not the pathtracing algorithm. (Actually I think you can use V-Ray in full pathtracing mode, but it’s not the default).

Excuse me, what?

Nope. Pretty much all of the modern renderers allow you to trace secondary rays using brute force path tracing. They just don’t default to that because it’s inefficient. That is why I very explicitly wrote raytracers/pathtracers, not just pathtracers.

Of course if you compare them to Cycles, then it makes sense to switch them to full path tracing mode, instead of using caching of secondary GI rays, otherwise it would not be a fair comparison. But these days, the terms path tracing and ray tracing are more or less interchangeable, since vast majority of renderers allow you to optionally trace rays with 1 or more bounces without any caching used.

I’m not an expert in either, renderer, but IIRC V-Ray is only technically path tracing if you turn on the progressive image sampler option. That might be the default in newest versions, but in the recent past it was not.

Redshift is not a path tracer. Both of these are ray tracers though, fyi.

But again I could be wrong as I’m not an expert in the inner workings of either. Feel free to show evidence otherwise. https://www.cgvizstudio.com/biased-vs-unbiased-rendering-engine/

That might be true from a general perception standpoint, but from an algorithm standpoint the terms path tracer and ray tracer certainly are not interchangeable. Please do not use them interchangeably.

I have to agree with bsavery, your definition of a pathtracer is not what my understanding of it is. As far as quality of the renders go I will take a pathtracer over a raytracer any day. I can spot a Vray render a mile off, and always wished the artist had used a pathtracer instead (any pathtracer) as it would have looked so much better.

If you really want to test it, I’m willing to give it a try. We could find or create a test scene and then render it in several engines. I can render it in Octane, and I’m sure there are people here who would be willing to render it in Cycles. You or someone else could render it in Vray and/or Redshift and then we could compare.

Alright, let me first debunk this:

Next, about Redshift or Vray not being path tracers. First of all path tracing != progressive rendering. Simple proof being that Cycles can render in bucket mode too and rasterizers can render progressive as well.

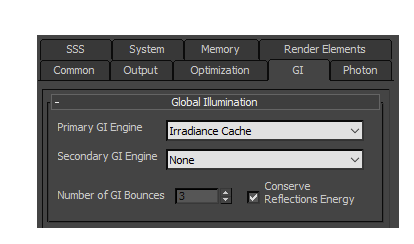

Both Redshift:

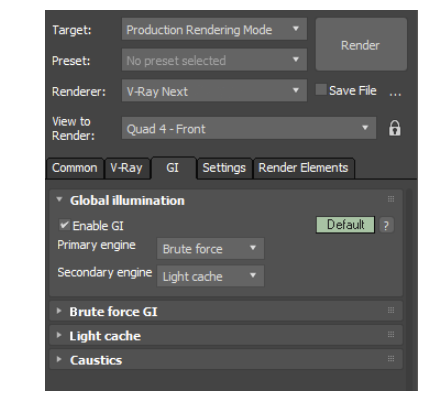

As well as Vray:

…let you select both primary and secondary GI solver. The primary one defines what happens with the primary bounce, and secondary one defines what happens with all the other bounces. In both, you are able to set both solvers to Brute Force. Also in both, you are able to set samples per pixel to just 1 to disable any ray branching. Conversely, in Cycles, you are able to utilize Branched Path-Tracer, which as a the name suggest, is still considered a path tracer.

Now, if we set Vray/Redshift to full brute force, by setting both primary and secondary GI solvers accordingly, and we match the ray intensity clamping value and trace depth values to the ones we’ve set up in Cycles, which is again possible in both, then what difference in the rendering mechanics do we get? All 3 renderers then end up tracing rays, and on intersections, tracing further rays to trace continuous paths until the trace depth limit is reached. That is, by nature, a path tracing; just racing multiple consecutive rays. It may not be an unbiased path tracing, but Cycles is not unbiased path tracer either.

Hi. I think that people out there have already done that other times:

I think anyway that this is an old test without the improvements that Cycles received in very recent last time.

I’ve done more of these tests than you can imagine. They all ended up almost identical. I mean you can take for example a look here: Cycles Performance The first two pictures are rendered in Cycles and Corona respectively. You can see remarkable visual parity at identical setup, except slightly different handling of shading normals to compensate for terminator line issue.

If you can spot Vray render a mile off, can you pick out which of these are rendered in Vray? (Without being dishonest and reverse-searching them):

OH I thought you were refering to " Bias a little here, bias a little there, bias the shading a little, your dream of making realistic images endures the digital equivalent of “ `death by a thousand cuts"" I mean, Vray, Corona and redshift has lots of bias and they are faster and can output same or better quality than blender :)))

The issue here whatever you call it, has to do with adding random ray samples up to some sample number to clean up noise. Even if you don’t see it happening I assure you this is what is happening behind the scene with each tile in Cycles when in bucket mode. It just doesn’t display till the end of the tile.

The one thing that isn’t clear to me is if Redshift or V-Ray when in “brute-force” mode are actually path tracing or brute force ray tracing. There is a difference. Either way I stand by my original statement that by default neither is a path-tracer (your words). They might have path tracing solvers for secondary modes, but neither by default.

I would agree with the statement “unbiased” is overused and incorrect (the v-ray post is very good).

but even if you are using cache for secondary bounces you still have to trace path to that cache entry. Cache is not directly visible it is sampled by secondary rays. Which can and will still have multiple bounces. A renderer would be a simple raytracer only if you had all the secondary gi directly baked in some sort of lightmap. Like unreal engine lightmass + RTX raytracing…

Well, then what would you say happens behind the scenes when Vray or Redshift render a bucket in a full brute force mode? Why would you assume it’s any different. Adding samples to clean up noise is a definition of a progressive rendering, not a path tracing.

Let’s look at it this way. Let’s imagine you write a simple path tracer which shoots only one ray per pixel and bounces it let’s say 25 times, then stops. It does not progressively add any more random samples over the time, so the noise would be extreme, but it would still trace paths. Would it not be a path tracer?

Or even better example. If you take any progressive renderer you deem path tracer, and set the SPP amount to just 1, so that after initial trace pass, no progressive refinement happens, but keep trace depth values high. Would the renderer stop being a path tracer at that moment?

Alright, if there is a difference, then can you describe it? Let’s imagine a brute force ray tracer, which has all the trace depths (diffuse, specular, transmission, etc…) set to the same value of 25 bounces, and has any ray clamping (max ray/sample intensity) disabled.

Now let’s imagine a path tracer, which has all the trace depths (diffuse, specular, transmission, etc…) set to the same value of 25 bounces, and has any ray clamping (max ray/sample intensity) disabled.

Both are set to progressive refine (no bucket rendering). What would be the fundamental differences in the tracing mechanism?

Or do you really define if renderer is a path tracer just by defaults? That would be kind of silly ![]()

Technically, at 1spp, it would be a naive recursive ray tracer. The integration of multiple samples is central to path tracing. But I can’t find an official definition, so I’m just going off of wikipedia.

But if I were to mediate this debate(which is exactly what nobody is asking for):

@rawalanche is being pedantic (but is generally correct)

@Grimm & @bsavery are being obstinate

Honestly i see basically the same level of “crappiness” in all the examples

This is wrong, no interchangeable at all, just look at last UE4 release, which introduced realtime raytracing (for example in reflections), but they also added a full “standalone” pathtracer, to better compare realtime output vs ground truth offline rendering for example.

No, actually this is wrong ![]() The only difference of the PT mode in UE4 is that it has high number of bounces, no ray clamping and no denoising. UE4 RTGI is path tracing with limited number of bounces, strong ray clamping and denoising.

The only difference of the PT mode in UE4 is that it has high number of bounces, no ray clamping and no denoising. UE4 RTGI is path tracing with limited number of bounces, strong ray clamping and denoising.

The generally accepted difference is the way random ray samples are chosen for recursive hits. Aka bias according to some.

I’m not really sure where this discussion is going but the original quote was:

This digression and my disagreement is whether that is correct. And I stand by my statement that it is not correct. Cycles is built from the ground up (and completely) as a path tracer. Redshift and V-ray may have secondary path-tracing lighting solvers or modes (even if they are not the default mode), but you can not state that fundamentally the renderer architectures are equivalent and lighting algorithms are the same.

And that is why I did not state that. There are differences in architectures of Cycles, Vray, Redshift and others respectively. In core architecture, in the ways they handle explicit sampling of light sources, etc… But in their brute force setup, all 3 trace paths. There is just no other way to categorize a renderer which does multiple bounces and does not do caching.

Out of curiosity, would you consider Arnold a path tracer?

There’s no authority defining those terms, so there’s no official definition. If you go by the terminology used by Kaijya, who published the paper that introduced path tracing, then path tracing is a form of ray tracing:

“We essentially perform a conventional ray tracing algorithm, but instead of branching a tree of rays at each surface, we follow only one of the branches to give a path in the tree. […] this new fast form of ray tracing----called path tracing […]”

Two years later, the technique that we now call irradiance caching was introduced by Ward. There, path tracing is simply referred to as “standard ray tracing techniques”

But, that was over 30 years ago. Language is evolving.