Hi,

I am creating a new thread to not pollute Cycles Development Updates thread, but this thread is a spin off from the discussion here:

I’ve done some more tests, mainly to showcase how much performance benefit can be gained from having ability to use cached GI for secondary bounces.

In my previous post, I have done a comparison between Corona and Cycles in pure path tracing scenario (no caching), and they both achieved relatively similar performance. Both got to some reasonable result in about 5 minutes in 1280x720 resolution. However I think it’s hard to appreciate just how much time has to be spent rendering using pure path tracing to get to a result with noise level low enough for us to be able to hand over an image to a client:

So, I have rendered the same comparison again, but the goal here was to achieve a noise level acceptable enough to consider image at least close to final quality:

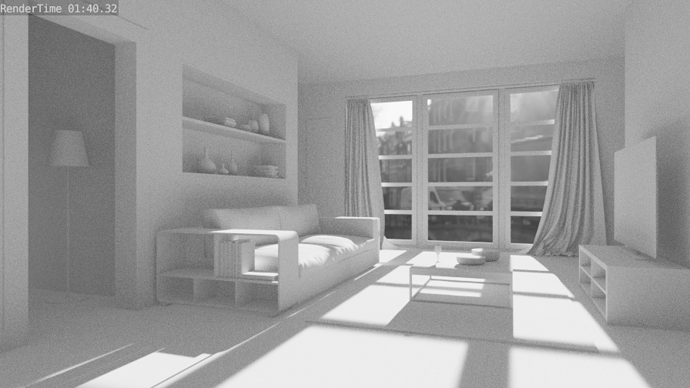

Corona:

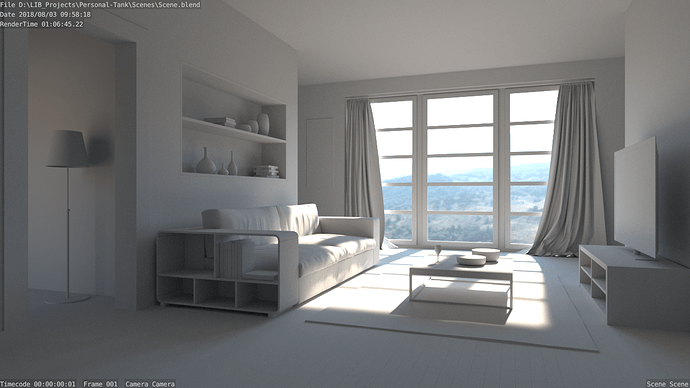

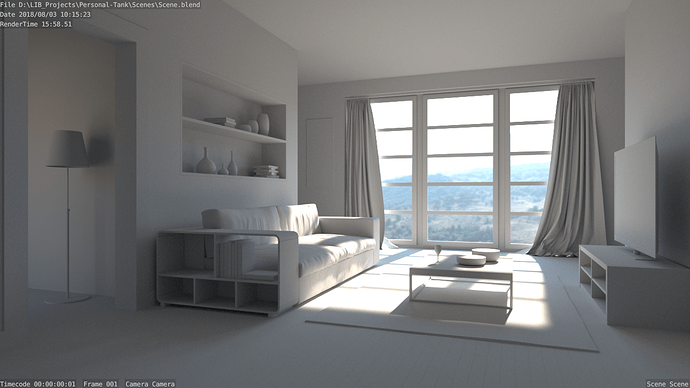

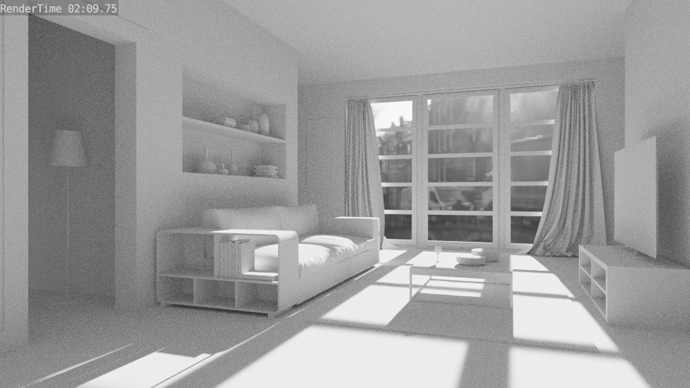

Cycles:

Corona took 1h 15m to achieve reasonable noise level

Cycles took 1h 5m to achieve slightly noisier level. Overall the performance is again comparable.

Both renderers ran on CPU only, with same pure path tracing settings, same max ray intensity, same ray depth, etc… Cycles seems to have slightly more aggressive russian roulette as it terminates more rays in deep areas, resulting in very slightly darker result at the same ray depth.

These days, standard delivery resolution for an archviz render is around 3508x2480 (A4 format), so to extrapolate rendertimes from 1280x720 (921 600 pixels) to 3508x2480 (8 699 840 pixels), it’d take 9.44 times longer to get reasonable quality at that resolution, that’s about 11h 15m. 11 hours and 15 minutes per image on i7 5930k is nowhere near acceptable unfortunately.

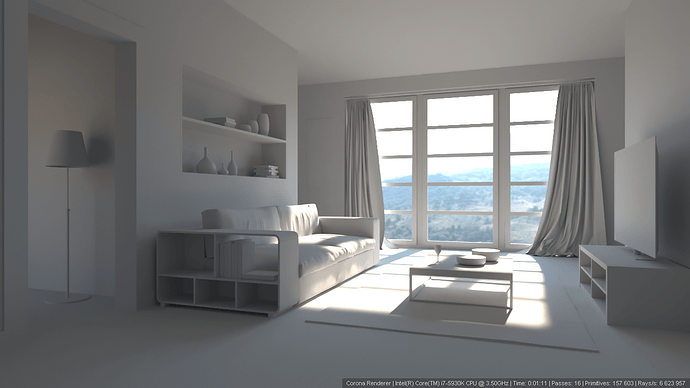

Now let’s take a look at another set of renders. This is Corona with caching for secondary GI (UHD cache):

We have achieved slightly cleaner quality than the PT+PT result in 3 minutes. That is 25x speed up in this particular case.

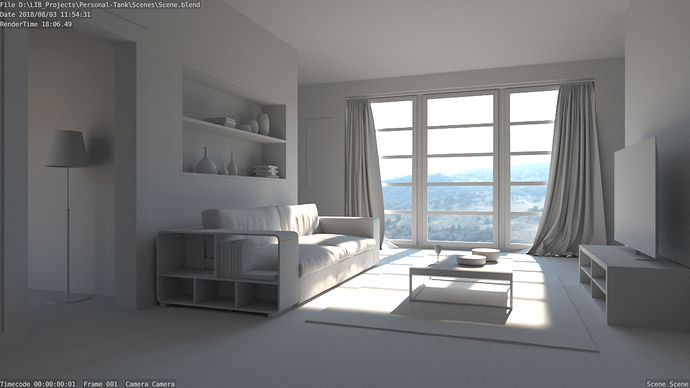

Cycles on the other hand has a benefit of adding GPU to the work. I have used latest master and used both i7 5930k as well as my high end GTX1080Ti to get this:

Same result in 16 minutes, so that’s a speed up of 4x compared to pure PT.

Now, what’s often confusing is comparing CPUs and GPUs of different prices, generations, and performance tiers. People often make mistake of claiming how faster the GPU is when they are comparing a shiny new GPU they bought to a rusty 5 year old CPU they have. So let’s do a little conversion of my i7 5930k to Threadripper 1950x, because of following reasons:

1, 1950x and GTX1080Ti release dates are very close

2, 1950x and GTX1080Ti are equivalent generations of the product

3, 1950x and GTX1080Ti retailed at roughly the same price

4, 1950x and GTX1080Ti were the representatives state of the art GPU and state of the art CPU at their release

5, They achieve nearly the same performance in Cycles:

6, 1950x has almost precisely 3x the multithreaded performance of my i7 5930k (1071 vs 3180) in Cinebench, which makes the conversion super easy.

SO:

To get the approximate rendertimes on Threadripper 1950x, all I got to do is to divide my i7 rendertime by 3. This means that on 1950x, pure PT rendertimes in both Corona and Cycles would be somewhere around 23.5 minutes for 1280x720 preview, and around 3h 40m for final resolution image. 3h 40m is still somewhat slow, but starts to get close to acceptable. Again, keep in mind you would have to get almost the latest, very expensive CPU.

For cached secondary GI, 1950x rendertime would be 1 minute for 1280x720 preview and 9.44 minutes for final 3.5k resolution. Yes, that’s correct! With good CPU and good secondary cached GI, you can get final quality 3508x2480 render of this average interior scene in less than 10 minutes!

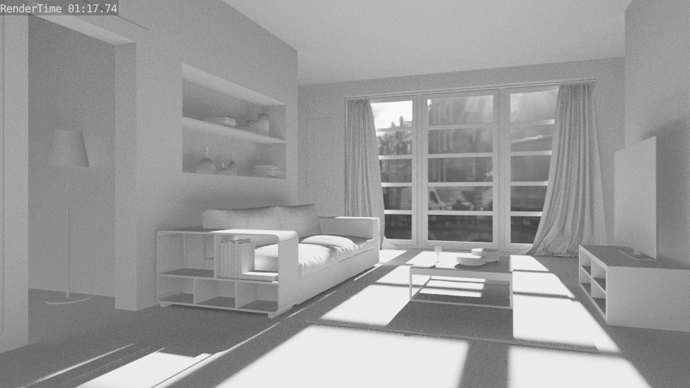

Now, let’s add one more render to the mix. Cycles with GTX1080Ti only, without i7 5930k:

Took 18m 6s for pure PT, while I estimated 23.5 minutes for Threadripper 1950x. This is interior scenario, and if you look above at the GTX1080Ti vs 1950x graph, you can see that the scene where GPU won over the CPU most was the interior scane, classroom, so this estimate again sounds about right. It also shows that performance of CPU and GPU is not simply additive.

Now, with all these estimates established, let’s get to the conclusion. Let’s say you were a person who happened to get a new PC, and spend roughly the same money on top end CPU and top end GPU of the latest generation. You would get Threadripper 1950x and GTX1080Ti, a great baseline for CPU vs GPU comparison for all the reasons listed above.

IF Cycles had secondary cached GI that’s comparable to Corona, you could render preview resolution of this scene in 1 minute at a noise level low enough to show this to a client.

Without cached secondary GI, but with GPU of the same power as your CPU, you could get the same quality in 18 minutes. With adding your CPU to the work as well, you could perhaps cut it down to 12.

Bottom line is that to get to the performance of cached secondary GI, you would have to add 12 more GTX1080Tis into your computer to match that. And even then, you would still be limited by GTX1080Ti’s 11GB of VRAM vs the average 64GB of CPU RAM.

Tangent animation has stated they could not use GPUs to render their movies as the scenes just would not fit in VRAM. They also said that rendering performance was very important to them to get the job done, hence their have their own Cycles developer.

My conclusion is that the best thing which can be done to Cycles, in terms of getting more performance, is definitely caching for secondary GI.

Now, I know that GI caching on GPU is extremely difficult to do, but I would be more than happy to have it on CPU only. It’s not like GPU/CPU feature parity is a stone carved rule, since, for example, we already have OSL support specific to CPU only. I would be very thankful for CPU only secondary caching.

I do not expect this to happen anytime soon, but I just wanted to display a practical example of how much performance benefit can be gained from this. A software level optimization, which can quite literally add performance comparable to adding a dozen of top end GPUs to your system. And in turn, save people, and studios a LOT of money ![]()