I’m trying to figure out how to do “reverse perspective” warping in blender for IRL projection mapping.

I don’t really have the right vocabulary so apologies up front for awkward descriptions. I suspect this is probably a solved problem, if I new what to call it ;-).

So in non technical terms, I want to make artwork that is only fully visible from a given perspective. e.g. take a series of rectangular sheets and place them at angles from an observer at the front of the room. Point a projector on an angle perpendicular to most of the boards and project an image. It’s broken up from the front, but becomes connected when you look from where the projector is. But it’ll be stretched vertically on wide side (perspective effect?) If you pre-stretch the projected image the “opposite” way, it will appear square (parallel top and bottom) on the physical sheets. Take photographs, scale, print, and stick to the sheets, you don’t need the projector anymore :-).

I think this is typically called projection mapping- and I’ve used VPT8 & ofxPiMapper to do some simple, single projector, examples.

I recently discovered the really cool export paper model add-on, and found out it not only handles geo, but also can render textures onto the output.

So I’d like to set up in blender several vertical prisms with a number of facets, and project several images from different view points. I’ve figured out how to use the UV projection modifier, and confident I can stack several of those with multiple UV maps and textures and combine it all in with a shader so all the projections will be visible and overlap/interfere correctly.

But to make it more intelligible I want to pre-warp the projected images so from their projected vantage point they appear horizontally/vertically square. I also want to play with rendering each image init different colour monotones, different half toning patterns, different stroke styles - I think the changes in shape of the elements making up the images will help make them visible as the viewer walks around.

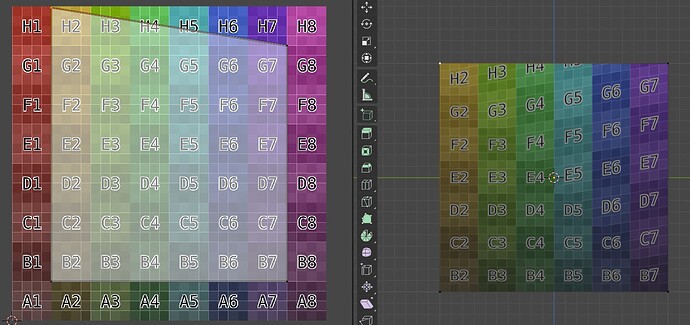

I thought I’d come up with an approach that involved copying all the verts visible from the projector/camera to a new object, adding a vert behind the camera, adding edges from all the copied verts to this new vert, and then intersecting this with a plane in front of the camera. The projected shape on that plane, when used as a UV, plus several copy/bake steps ends up with an image that works great on (is scaled down, but square to the horizontal and vertical from the camera perspective) on a plane in front of the camera… but it’s “wrinkly” when used with the UV projection modifier on the real shape. I’ve tried subdividing, but it didn’t help. (Note: I’ve just realized I subdivided at the end, maybe subdividing earlier in the process might have changed things.)

It’s almost usable, but not quite.

I’ll share my step-by-step process in a comment to this (as this is already pretty long!) if that’s helpful.

Thanks in advance for any suggestions!