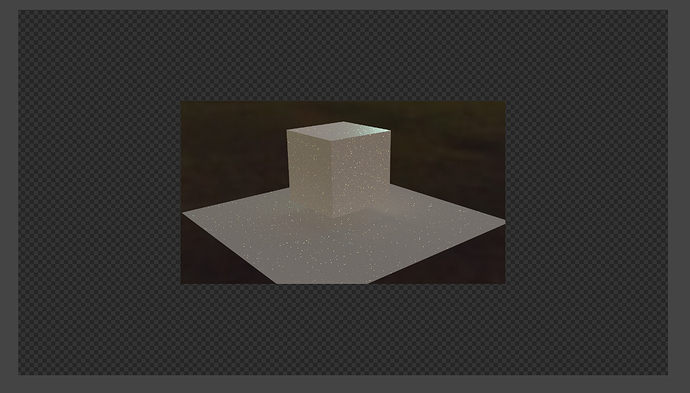

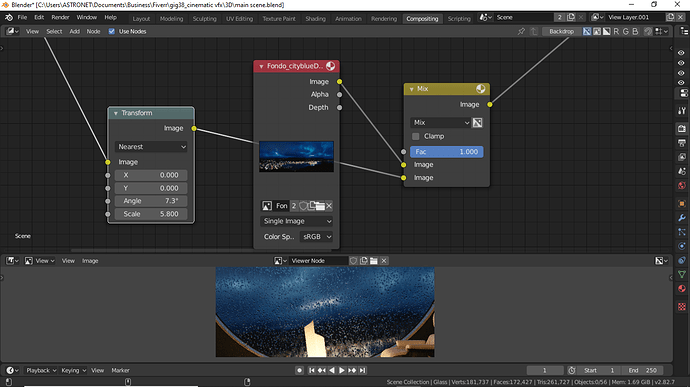

Recently I’ve found that for my project it is better to render at twice the resolution of the final image, then downscale to the goal resolution. However, I’ve yet to automate this within Blender’s compositor; instead having to resort to other programs (GIMP, Affinity, Photoshop, etc.) after the fact. Setting the render resolution to 200% seems to keep the canvas locked at whatever 2x the height and width parameters are, and using the ‘Transform’ and ‘Scale’ nodes seems to leave a large margin of empty alpha bordering the render…

Would it be possible to render an image at, say, 3840x2160, and then use Blender’s compositor to automate downscaling of that image to 1920x1080? Maybe the solution isn’t in the compositor per se, but located elsewhere in the menus (or found in an extension for all I know, I’m not sure). But the goal is to achieve scaling the render to half res without resorting to outside software.

This is my first CG question thread ever, and I may be explaining my predicament poorly. Regardless, patience and/or recommendations are greatly appreciated, and if there’s anything I should elaborate on, do let me know!