I couldn’t find any tutorial on the subject so i decided to make my own. Any suggestions welcome.

Sorry for the lengthy title but sincerelly there is no better way to describe it.

And sorry for the failed previous attempt to start this topic.

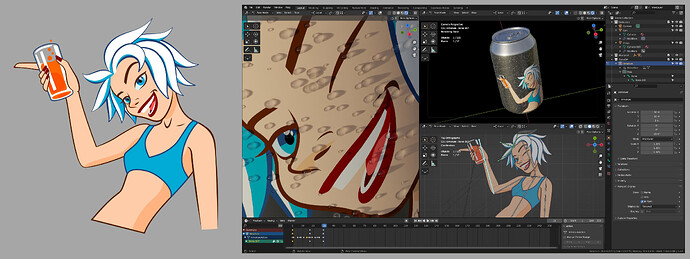

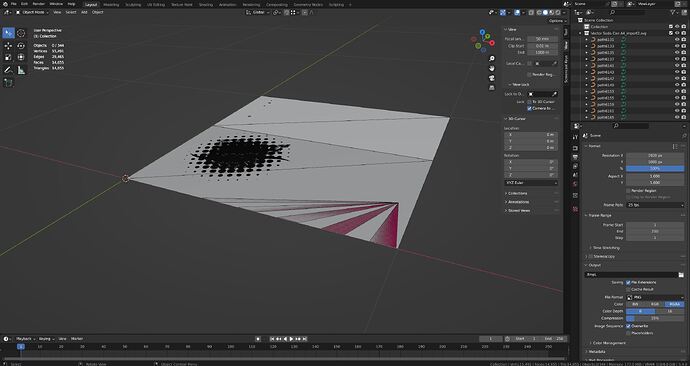

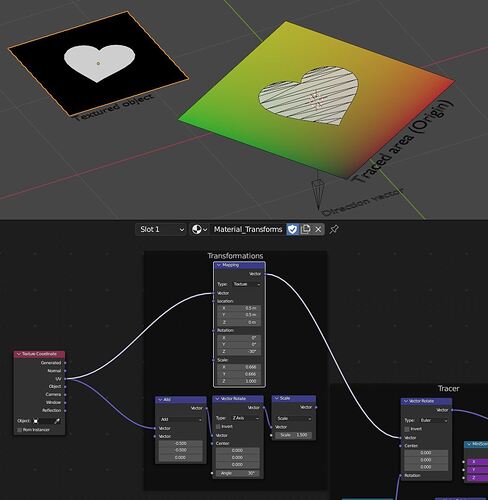

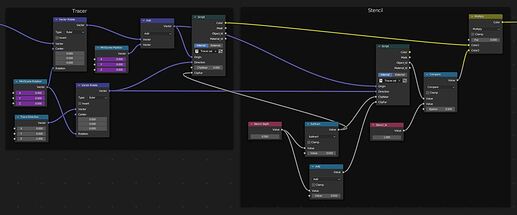

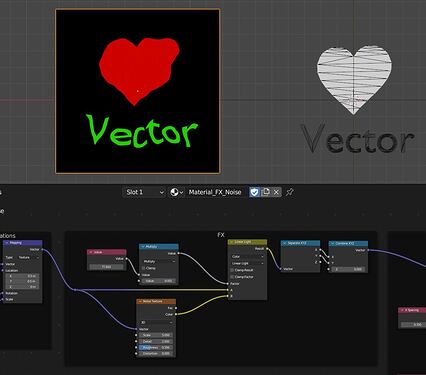

Left: Inkscape Illustration. Right: SVG file imported, animated an applied as texture in Blender

The subject is rather versatile and the uses and options are huge so I will try to stick with the basics and expand in future posts.

Also, I think this method is software agnostic (even if Blender is my favorite choice), so if you have the opportunity to test it in other enviroments, just let me know.

00 PURPOSE

This method’s main usage is the creation, modification and animation of textures from within Blender.

If we want to use lineart as textures in our 3d models, we wont’t have to convert our designs to raster images. Just import and use them.

Also this method puts an end to the endless going back and forth when our designs are subject to revision or the animated texture changes and we need to export or render over and over again.

Another advantage of this method is that the textures are calculated on the fly and are resolution independent in a similar way to what in other applications is called continuous rasterization.

Hope it is useful for your projects, so let’s get into it

01 THE BASICS

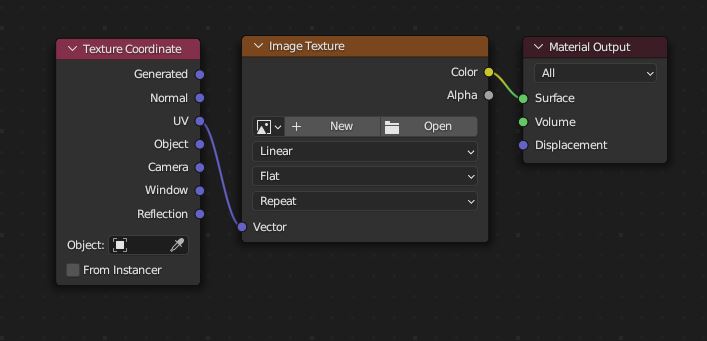

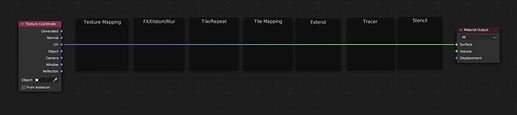

The standard way the render engine checks which texture is in an object surface is by linking (mapping) the pixel coordinates of an Image Texture with the Texture Coordinates of the surface. Then, for every Texture Coordinate there is a color parsed from the Image file.

The most basic texture setup.

The Traced Texture method is based on substituting the bitmap image coordinates by 3D World coordinates mapping them with Texture Coordinates.

Being the two spaces defined in float numbers, the first effect will be that the texture generated keeps its sharpness no matter the zoom or the resolution.

Also, being generated from within Blender, we can create designs, modify and animate them on-site, and the Traced Texture is updated continuosly without the need of pre-rendering.

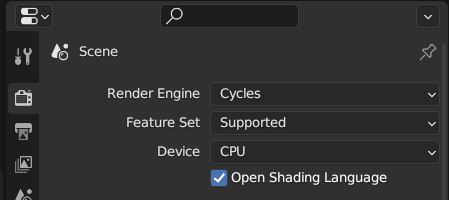

We can know the contents of a 3D coordinate by the use of the function trace in OSL language. So yes, we have to script, and no, we don’t need expensive hardware.

The function trace ( Origin, Direction ) --No options for this basics— sends a ray in the scene from the Origin point in the direction of the Direction vector.

If the ray hits a mesh, the function returns 1.

If the ray misses, the function returns 0.

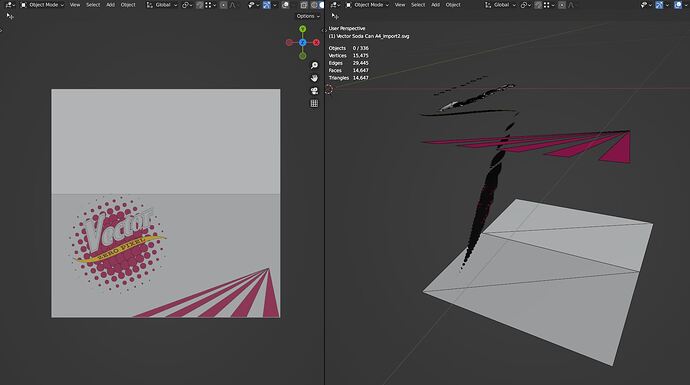

If we put this function instead of the Image Texture node above, feeding the Origin with Texture Coordinates (as if they were the plane XY from wich the rays depart) and set a vector pointing downwards (0,0,-1) as Direction, we can trace the contents of a box contained in the 1x1 XY plane and down.

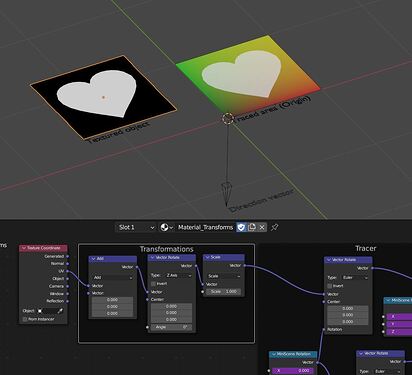

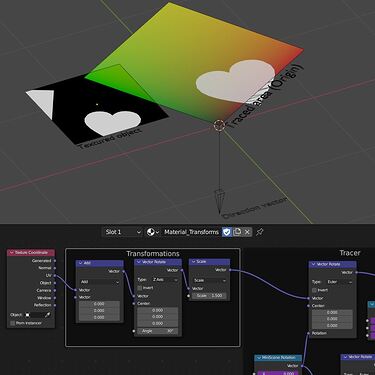

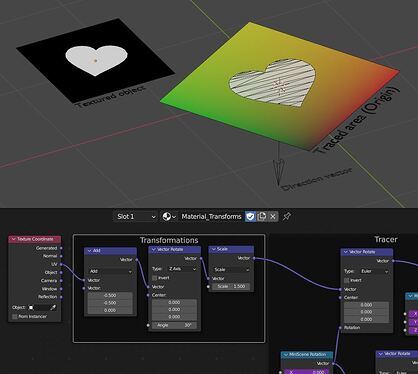

The basic procedure is as follows:

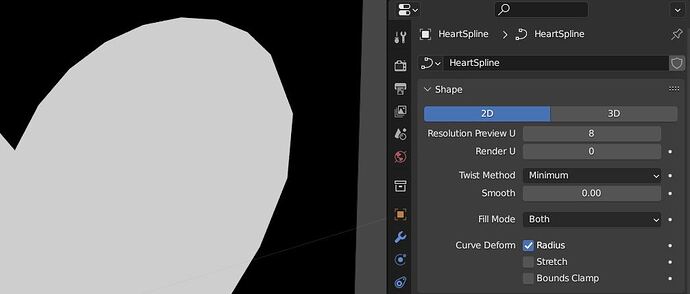

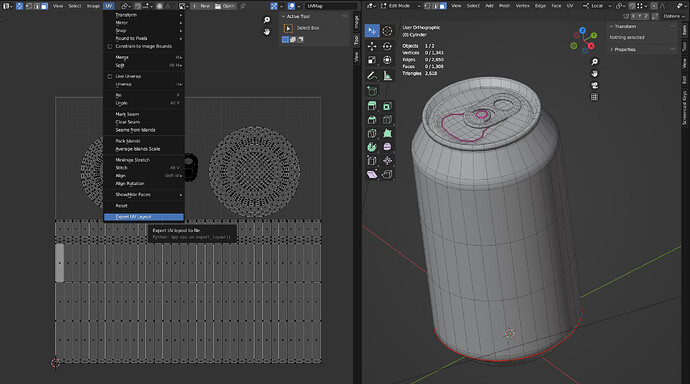

Place the objects to create the texture in the unit XY area. Keep them below Z=0 to avoid possible clipping errors. Any mesh object will be trace, be sure to fill your splines to be seen. And any mesh also can be a particle system, a metaball a fluid…

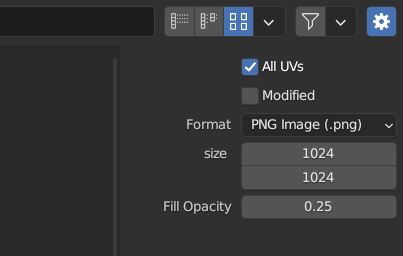

Aside will be our textured object. The object needs to be properly UV unwrapped or use Object coordinates to avoid distortion.

To use OSL we have to set the render settings acordingly: CPU and check Open Shading Language. Reduce your carbon footprint until OSL for GPU arrives.

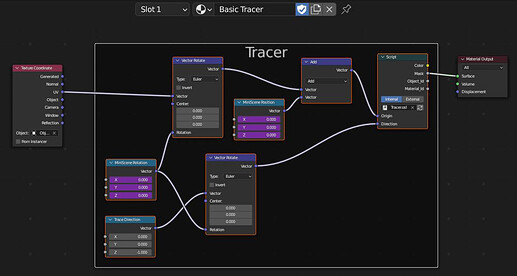

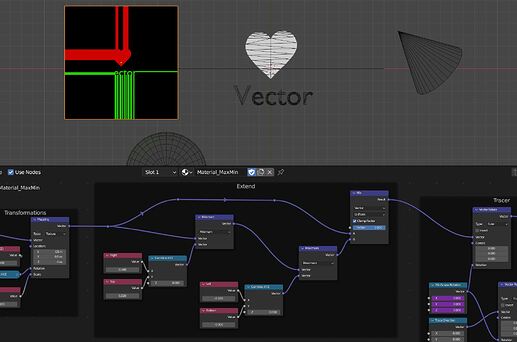

Open the Text Editor, create a new text, name it eg. “Tracer.osl” and write:

shader Tracer(

vector Origin = 0,

vector Direction = 0,

output float Mask = 0

)

{

Mask=trace(Origin,Direction);

}

The shader is pretty self explanatory.

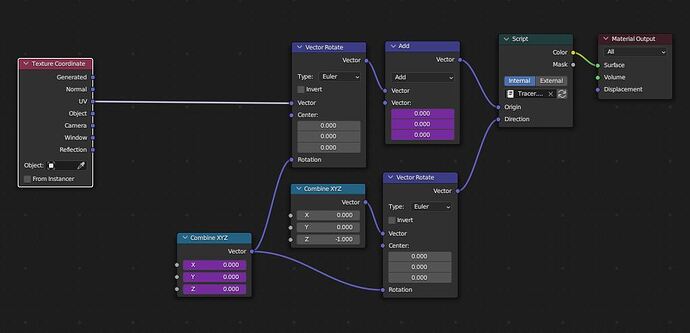

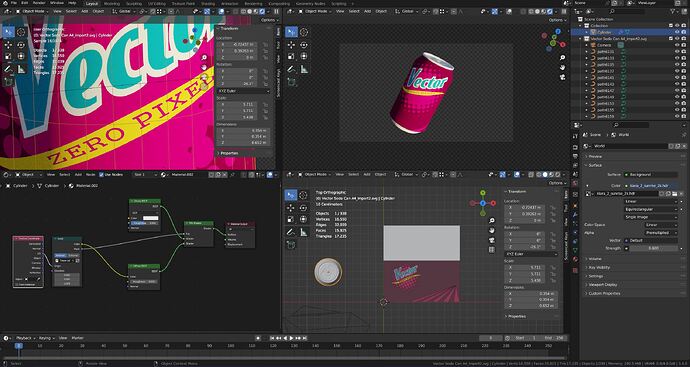

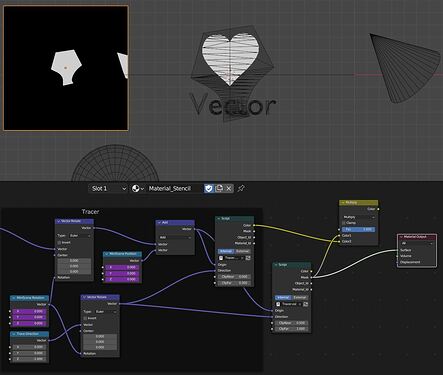

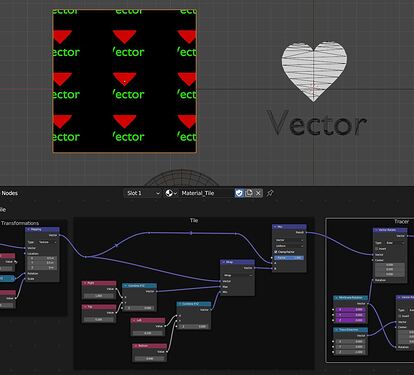

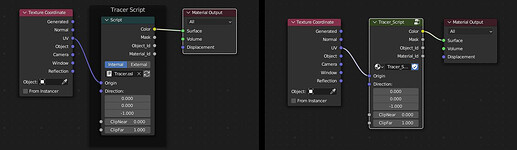

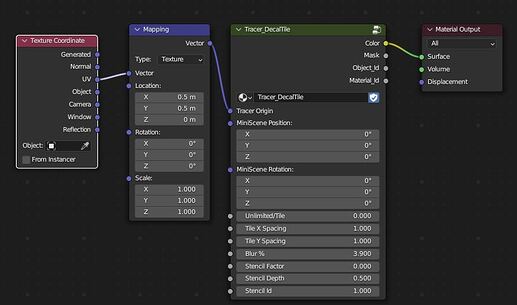

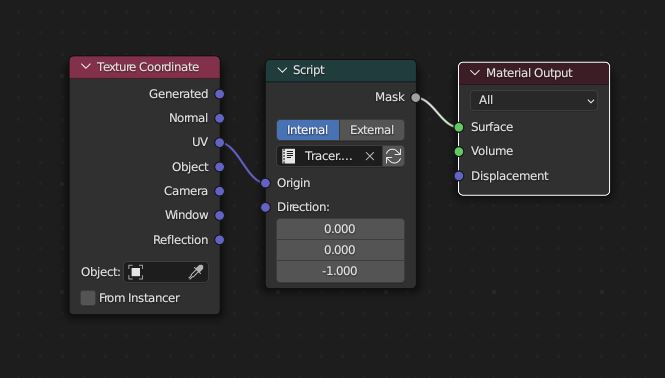

In the Material Editor add a Script node, load your Internal written shader, plug UV to origin and set Direction to (0,0,-1).

We have substituted the Image Texture by a Traced Texture. Set the View to rendered and watch the results.

If we zoom, we see that the texture mantains its sharpness, maybe the poligonal edge contour is noticed but increasing the Resolution of the curve fixes the issue.

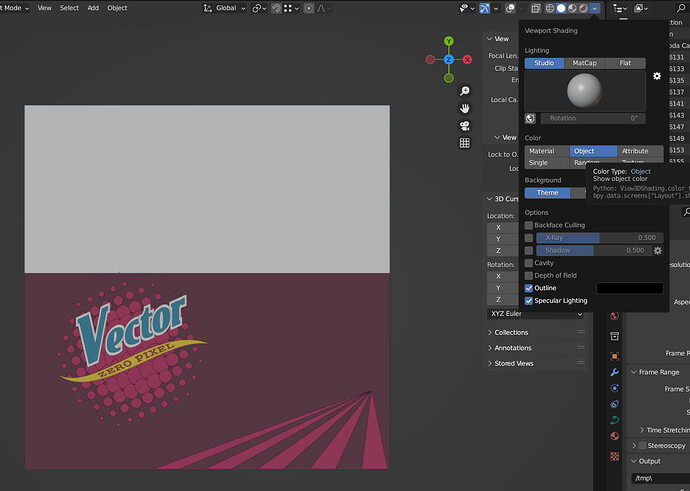

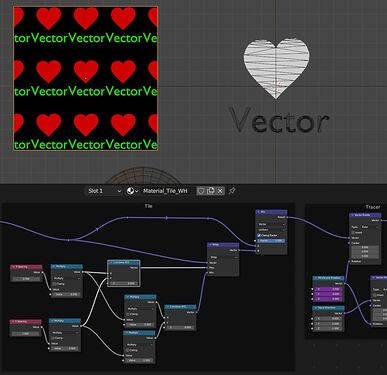

More to come, the trace function not only gives us hit data, it collects data from the hit mesh and it is delivered through the getmessage function:

getmessage(“trace”, “object:color”, Color)

reads the object:color data of the object hitted by the last trace call and assigns the value to the Color variable. This color is not the color of the object material, is the Viewport Display>Color in the Object settings panel.

Our extended shader:

shader Tracer(

vector Origin = 0,

vector Direction = 0,

output color Color = 0,

output float Mask = 0

)

{

Mask=trace(Origin,Direction);

getmessage("trace","object:color",Color);

}

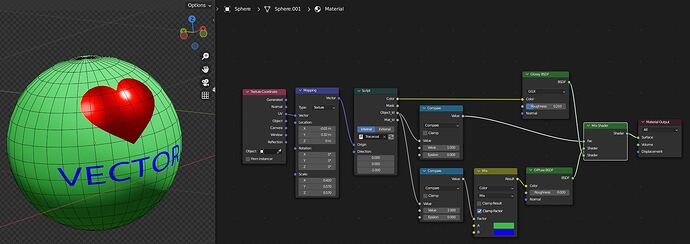

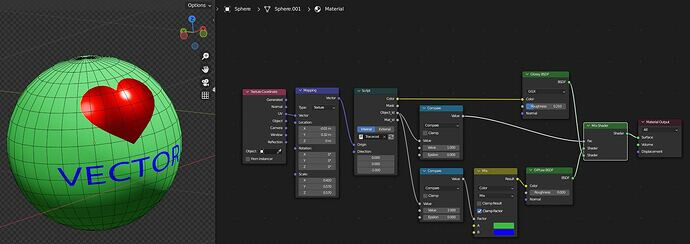

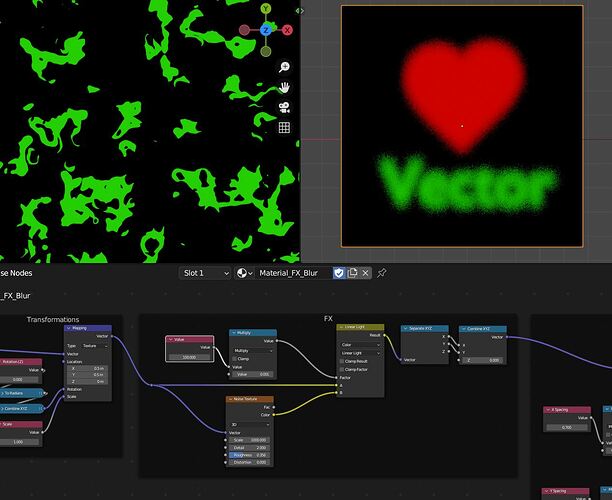

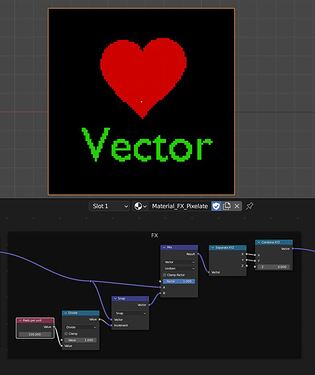

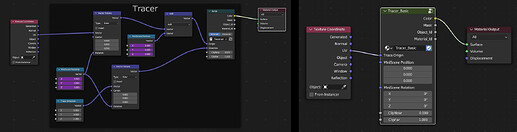

The shader in use:

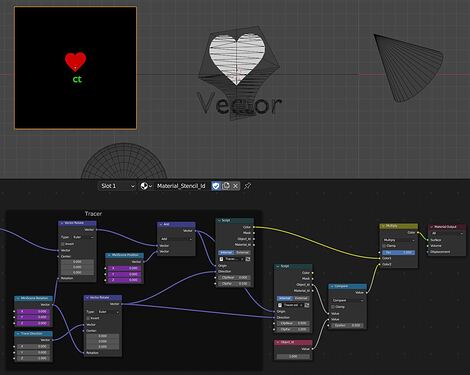

Want more? play with:

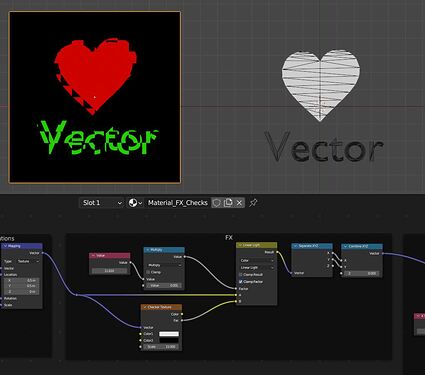

getmessage(“trace”,“object:index”, Object_Id)

Returns the value (per object) in Object Properties>Relations>Pass Index into the Object_Id (float) variable.

getmessage(“trace”,“material:index”, Mat_Id)

Returns the value (per material) in Material Properties>Settings>Pass Index into the Mat_Id (float) variable.

With Converter>Math>Compare we can separate de results in different masks.

A crazy useless setup with Object Index: The heart’s Pass Index is 1 y and text’s is 2.

The compare nodes split the Object_Id output allowing the heart to have a glossy surface keeping its Object Color and the text to act as factor mask in the blue-green color mix.

The basics end here.

Next chapter: Scene management and troubleshooting.