From 2017:

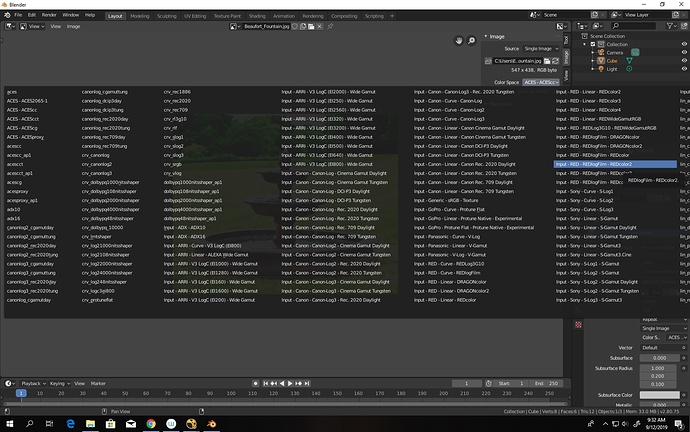

If you rebuild your ACES configuration via Python, you can help this out a little bit by putting the proper displays into separate displays. The default ACES configuration chose the meatheaded way that existing software did things (wrong / poorly) as opposed to how OCIO was designed. Also, software needs to support the OCIO idea of “Families” to make it more manageable.

That’s due to broken logic that was coded in, despite warnings from some raving idiot. If you search the tracker, you will see a never ending list of entries where I suggest that the UI needs to be managed, and based on the audience selected transforms. There’s a long bit of reasoning there, but I won’t bore anyone here with it unless it is of interest.

See above. Le sigh.

Tied to above. ACES has a poor selection of roles, and it was discussed recently. The main issue is that the UI needs to be managed.

OCIO has some API functionality to try and match colour transforms to filenames. I wrote a patch as an example years ago, but no one cares. There are plenty of folks that truly believe that Blender’s colour handling doesn’t have crippling problems. So here we are.

The issue is that it is up to you folks, the culture, to understand what you are doing enough to make sure the software and developers pay attention and listen. We aren’t quite there yet, but we sure are a million times closer in recent years thanks to you folks doing the heavy lifting of learning and helping others.

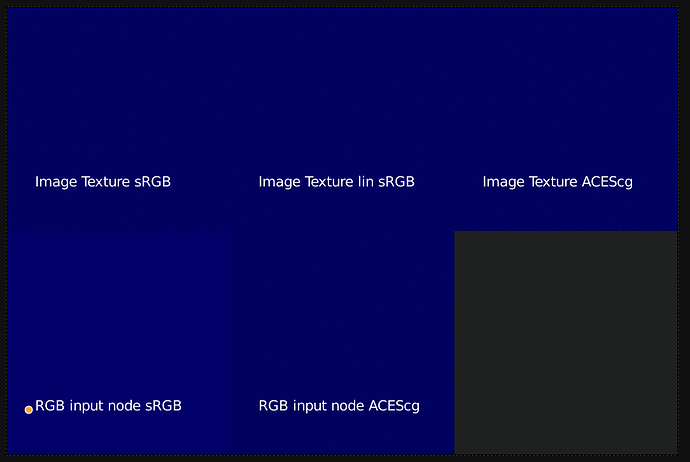

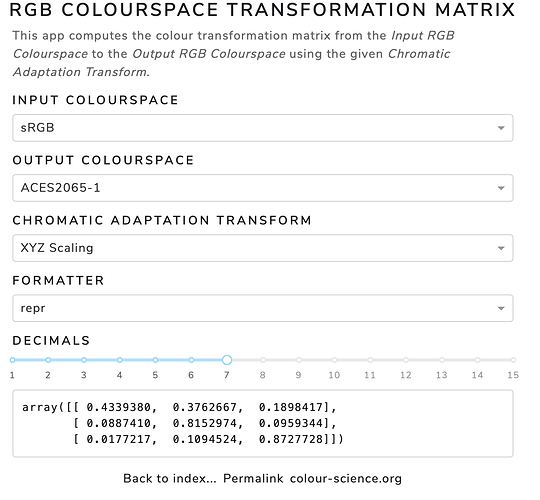

The TL;DR is that Blender is still hard wired with completely incompatible pixel math from hack software like Photoshop. That is, the Mix node uses strictly hack display referred math. For scene referred emissions, the formulas are complete garbage and have no place in Blender by default. They simply do not work. You can look at the blend math in the Adobe PDF specification on or around page 320 if memory serves. Also, with regard to the colour transforms that are hard coded, they include:

- Sky texture

- Wavelength

- Blackbody

Those need to be fixed, and @lukasstockner97 did some work towards that. Not sure if they have been fixed yet. I’d doubt it, and even if they were, they need testing.

So by default, plenty of nodes are fundamentally broken. But it is even more nuanced than this, in that the context of what everyone is trying to do dictates the appropriate math.

Some examples:

- If you are operating on a rendered scene, with scene emissions, the value range is some extremely low value to some potentially infinitely high value.

- If you are operating on albedos, the colour ratios represent a percentage of reflection from 0.0% to 100.0%, plus physically implausible values that absorb or return more energy than input.

- If you are working on data, the data isn’t colour information at all. An alpha channel is a good example here, where it represents a percentage of occlusion. But how should it appear visually, despite being data? 50% alpha will not have a 50% perceptual appearance. Same goes for strictly non-baked-into-data visualizations of other data forms!

It’s a deep rabbit hole. Fixing the software isn’t complex. It requires pressure and willpower and most importantly, an informed and educated culture to properly demand and rigorously test it.

…

…