Hello !

Thanks for giving some examples, that helps !

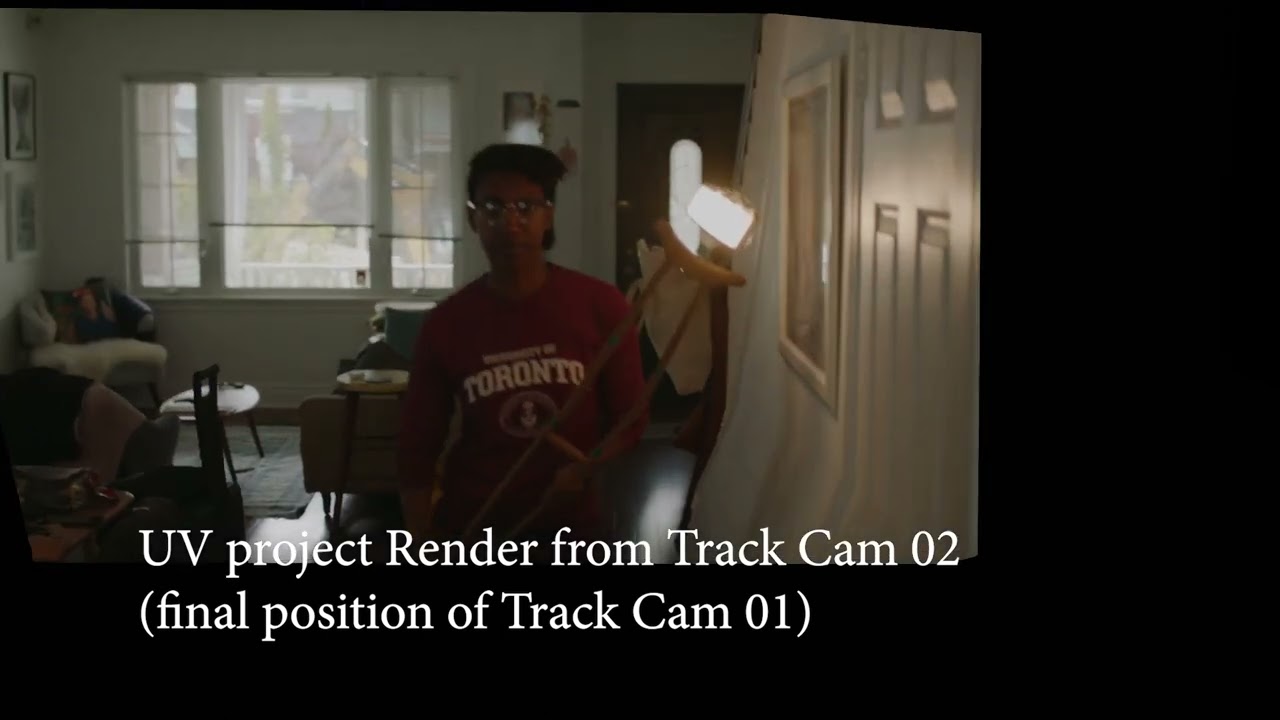

Yet I’m not 100% sure of what exactly you have in mind, is it something like this that you want to replicate ?

If not please show an example in nuke or else…

If Mocha allows you to say remove the light easily you should definitely use that as it’s probably going to be the simplest. You don’t want to use more complicated techniques to spend more time cleaning a footage don’t you ?

I think mocha, and Nuke-X got plugins that allows to remove automatically parts of the image , like you select one element and it will scout for surrounding frames to remove it. That would be the first thing I’d try if I had to remove said light, and that might give a basis simpler to clean.

In the end, the shot you are trying to cleanup looks quite challenging, in the snow example you’ll see that it’s much simpler, the light stay coherent and they basically track a plane, where in your case there would be several planes to clean with the ‘stairs’ part with a lot of parallax involved, reflections, changing lights, motion blur and such.

If I had to cleanup something like that, first I’d ask for the phone number of the person responsible for the shot so I can tell them right away what I think, and then it’s probably going to take a lot of time and energy without a guarantee of complete success.

Anyway, if the technique you’re trying to recreate is the same from the tutorial I posted, first I really encourage you to use an appropriate software for that, blender and AE is going to slow you down a lot since you’ll be bouncing back between the two and they are limited since they don’t have the right tools for that ( even tho it’s always possible to work around) .

I’d look into fusion which you can probably use for free within resolve, not sure it can do that , but that’s probably a better option.

If you insist on using blender, what you are missing is an animated bake : https://www.youtube.com/watch?v=uRNssN00CVw

Basically the method goes like that :

1/ Once you tracked the shot, you create a 3D model of the set, it needs to be very precise to be effective, if not everything will slide and you’ll have an harder time to clean up.

That’s why in the cleanup tutorial they use a plane aligned with the ground, if you have several “walls” to cleanup you need some faces perfectly aligned with your 3D track.

2/ You need to UV unwrap the model, again, cleaner UV will help the cleanup process a lot.

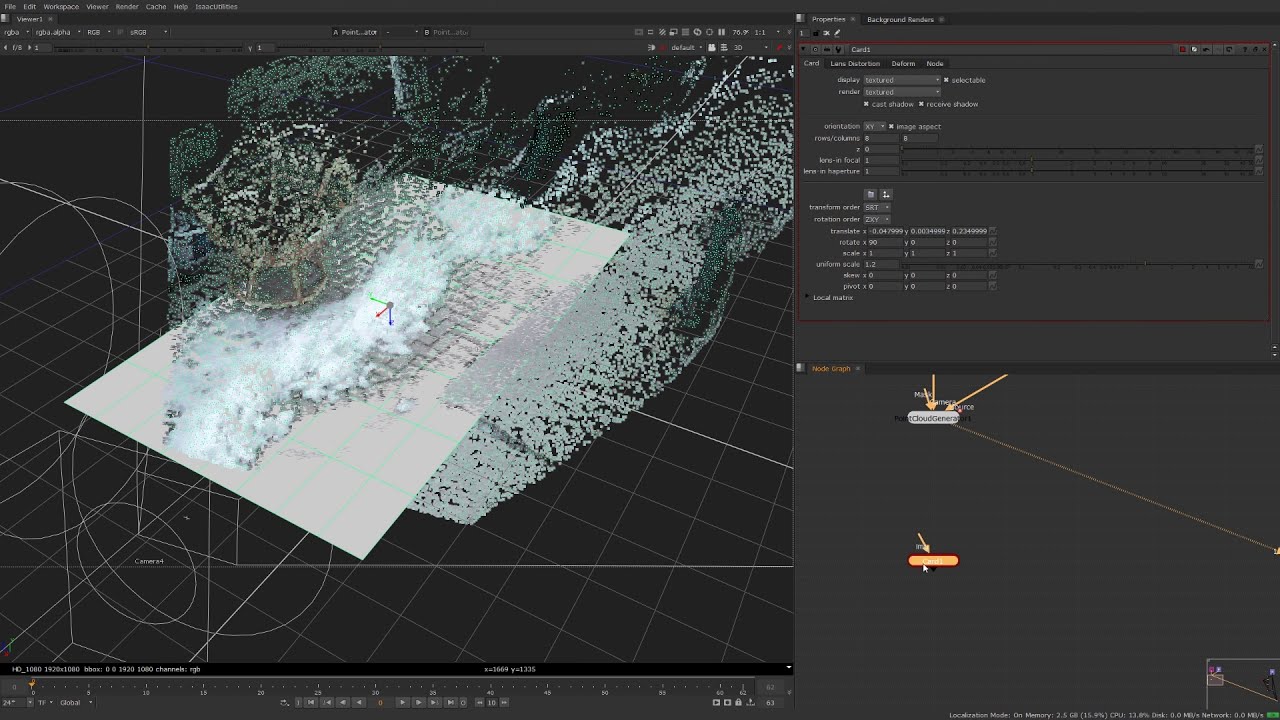

3/ Then you have to bake the whole shot from the tracked camera , as a result you’ll get an animated texture baked from the UV which are static.

That’s what they get here :

4/ Then you clean that up in a compositing software

5/ back into blender you use the cleaned animated bake, map that as a texture within your UVs and render the shot, which should give you an animated clean plate.

6/ back in AE, you use the clean plate to remove the object you want.

But, you’ll probably quickly see that it’s better to shot smart, and try to minimize these kind of cleanup at the shooting stage. The most important is the effect you’re going to put in the shot, this is where you want to spend the most of your time !

Have fun !