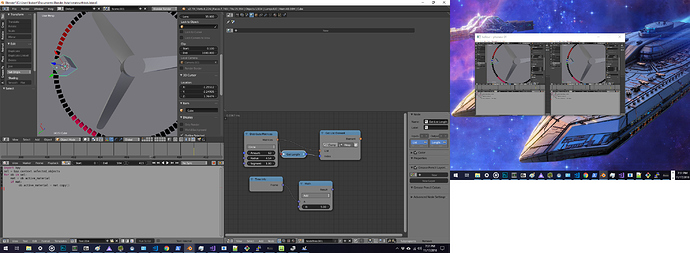

Update: I got the basics working! see the last code block

I’m inspired by this guy to make a camera mover for VR for Blender:

I would like to move a camera via python in realtime , without playing the timeline. I would like to update it every time the screen refreshes or more than that. Is there:

A) a way to make a app.handler.on_window_redraw() ,

2) a way to kick of a python script asynchronously so the Blender UI doesn’t have to wait on the script to complete?

Here is my test code that will break the GUI - you have to CTRL+C on a mac/linux or close the gui on windows (or just wait 5 minutes )

import bpy

# select camera

import time

import math

camera = bpy.data.objects['Camera']

i = 0

while i < 100000:

x= time.time()

print(going on and on...)

camera.location =(math.sin(10*x),camera.location[1], camera.location[2])

i +=1

I want a stream similar to websockets to change the location of the object.

I could do a client -server , but this also freezes blender.

I need a stream of information - location and rotation - to be handled by blender.

Do I need to go into C++ and modify source/ submit a patch for this?

I was thinking about doing python Popen, but it just does command line parameters and I’m stuck with 2 open blender apps trying to communicate with eachother - which is another problem I have that I do not know if python can solve.

Edit1:

I found modal timer operators. https://blender.stackexchange.com/questions/15670/send-instructions-to-blender-from-external-application

and got the basics working here - the camera updates in realtime without freezing :

import bpy

import os.path

import time

import math

class ModalTimerOperator(bpy.types.Operator):

"""Operator which runs its self from a timer"""

bl_idname = "wm.modal_timer_operator"

bl_label = "Modal Timer Operator"

_timer = None

def modal(self, context, event):

if event.type == 'TIMER':

camera = bpy.data.objects['Camera']

x= time.time()

camera.location =(math.sin(10*x),camera.location[1], camera.location[2])

return {'PASS_THROUGH'}

def execute(self, context):

wm = context.window_manager

self._timer = wm.event_timer_add(.01, context.window)

wm.modal_handler_add(self)

return {'RUNNING_MODAL'}

def cancel(self, context):

wm = context.window_manager

wm.event_timer_remove(self._timer)

print('timer removed')

def register():

bpy.utils.register_class(ModalTimerOperator)

def unregister():

bpy.utils.unregister_class(ModalTimerOperator)

if __name__ == "__main__":

register()

# test call

bpy.ops.wm.modal_timer_operator()

Now I would like to communicate through some sort of message passing - ZeroMQ I heard is what leap motion uses .

Perhaps I don’t have to ?

this prints out data to python, I just have to read it in my code.

It looks like I will need pip :

So once I get a steamVR headset , and intstall PIP, this thing should read location/rotation of the headset.

I might use a wii remote until I can get ahold of a headset - it will give rotation data I think

I was thinking about serial - can I get serial data r/w ?

I want to get basics working first - so the internal pyopenvr will do for now.

Next iteration:

- Location / Rotation coming from somewhere