Hi,

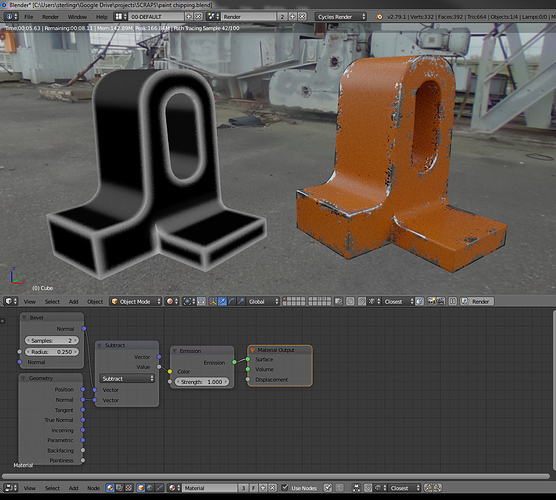

one of the things I am most missing in Blender is an ambient occlusion map. Cycles only has AO material, which has one, globally defined distance and can not be used as a map. In 3ds Max, I’ve been utilizing AO map for years to create procedural material effects, such as edge wear and dirt. Here are some examples:

neither of these 3 models have any UVs. It’s all just box mapping projection with AO maps driving the distribution of dirt and wear & tear effects.

Unfortunately, in Blender, I am unable to achieve that.

1, As I already said, there’s only AO material in Cycles, not a map, and AO radius of this material is driven globally, not per material. Even if it somehow worked, it’s still missing options such as inverting ray casting normals to create edge wearing effects.

2, There’s a curvature map and option to convert curvature to vertex channel. Both of these options are unfortunately not sufficient, as they rely on a model topology. I need to keep my polycounts reasonable and my workflow quick and flexible enough, so I can not afford to modify my mesh topology specifically for materials. It would make my process much more slower and my resulting models much heavier. A simple example is that you can’t really use vertex color or curvature map to create procedural on a simple 6-quad cube, while you can easily do that with an AO map.

3, There has been OSL shader that does this, but unfortunately:

A, It does not seem to work with Blender 2.79

B, It seems very slow (compared to a speed of native implementation)

C, It doesn’t work with GPU mode

I already found out a proposal for this map here: https://wiki.blender.org/index.php/User:Gregzaal/AO_node_proposal

So my question is, what would be a best way to convince some of the Blender developers to implement this? AFAIK, implementation of AO map is something so simple it could take just 1-2 days of a single developer. I would be even willing to pay for that time. But there do not seem to be any feature request channels. There is a bug tracker specifically made for bugs, not for feature requests, and only site for feature requests is a Right Click Select (a name I have very strong aversion to) which Blender developers supposedly don’t even visit.

Thanks in advance

(BTW I would not mix AO and curvature terms. AO outputs data based on cast AO rays, while Curvature doesn’t cast any rays but evaluates neighboring topology

(BTW I would not mix AO and curvature terms. AO outputs data based on cast AO rays, while Curvature doesn’t cast any rays but evaluates neighboring topology