This has really astounded me for years… the concept of blurring cannot possibly be alien to the developers of blender. So Why isn’t there already just a straightfoward blur node? It makes absolutely no sense. It’s not a new concept, blurring has been a known thing in photography for 100s of years.

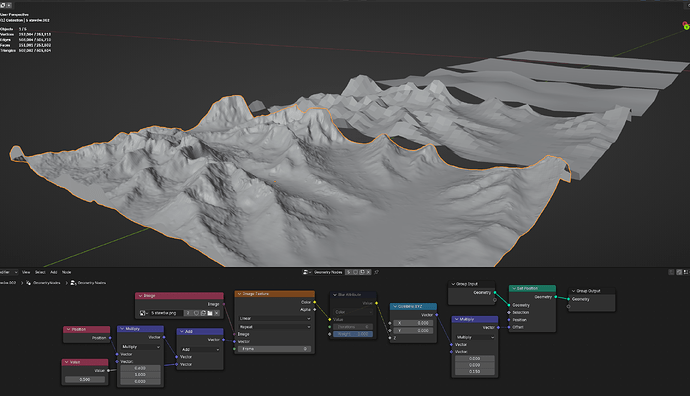

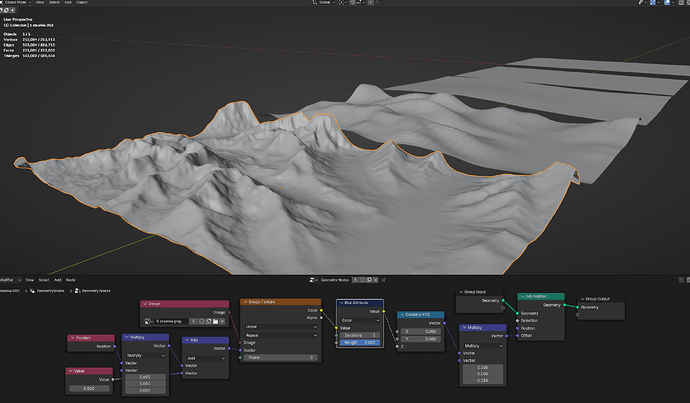

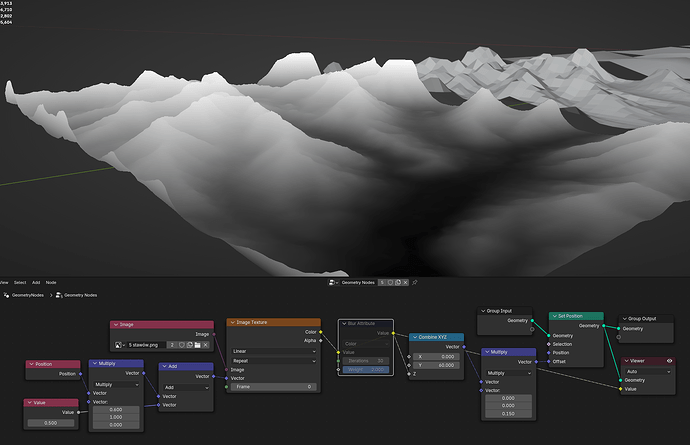

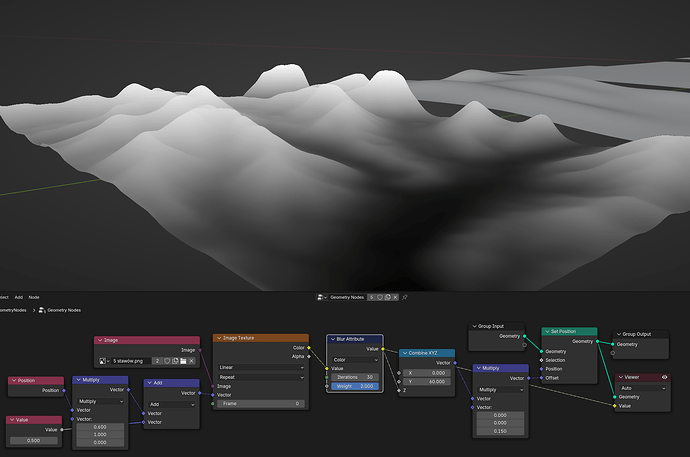

So can someone explain what is so difficult, above adding a node that simply takes the color/image from a previous node, and apply a honest to god straight up blurring? With perhaps a few parameters the user can tweak, like blur size/range and amount (e.g internally mixes with the original image/texture/color input). Every single option for doing this I have seen relies on manipulating the mapping on the vector inputs long before any images or textures are input. There doesn’t seem to be any way to do this further along your node chain because it relies on the mapping, which can only be done before any images/patterns/textures are made. After that point, there is nothing.

Can someone explain to me why this simple thing wasn’t added to blender years ago? I don’t get what is so difficult.

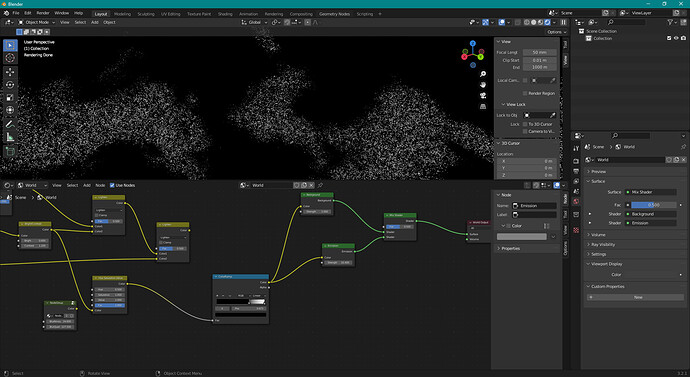

Case in point: In the image below, I have a generated sky/starfield in progress, and some of the star clusters, I wanted to have them shine brighter, as they are denser together, so have seperated out those stars.

To achieve the effect I want, I would now blur this, before mixing with the original unconnected node. But there isn’t any way to have the generated image as an input, so basically I am stuffed by the lack of what really should be, a basic functional node.