video in the link above…

It has to be maya ![]()

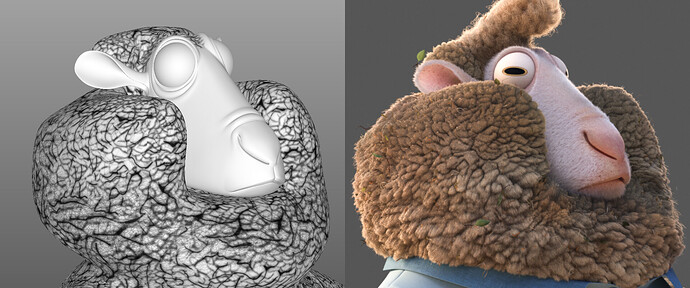

To make the animals look realistic, Disney’s trusty team of engineers introduced iGroom, a fur-controlling tool that had never been used before. The software helped shape about 2.5 million hairs on the leading bunny and about the same on the fox. A giraffe in the movie walks around with 9 million hairs, while a gerbil has about 480,000 (even the rodent in the movie beats Elsa’s 400,000 strands in Frozen).During the research phase, the team paid close attention to the underlayer of animal fur that gives it plushness in real life. But the same detailing couldn’t be recreated on a computer. “It’s not practical for production to do it,” said senior software engineer David Aguilar as he displayed iGroom at aZootopia presentation in Los Angeles. “We created an imaginary layer with under-coding so the animators could change the thickness and achieve the illusion of having that layer to create the density of fur.” That kind of trickery made it possible for them to create characters like Officer Clawhauser, a chubby cheetah with a massive head of spotted fur on his face.

The software gave the animators a ton of flexibility. They could play around with the fur – brush it, shape it and shade it – to create the stupendous range of animals for the movie. “The ability to iterate quickly makes all the difference,” said Michelle Robinson, character look supervisor. “You can push the fur around and find the form you want.” From the slick pouf on the shrew’s head to the puffy, dirty wool on the sheep, the grooming made it possible for them to stylize the characters with quirky features.

Before this tool, animators had to work with approximation. When creating the silhouettes or posing their creatures they had to predict the way their characters would change with the addition of fur. “We have to wait hours and hours for renders to come back to see how the characters looked,” said Kira Lehtomaki, animation supervisor. “That works for one character but not for Zootopia. Animators are obsessed with posing and silhouette, so if the render changes shape, any discrepancy can ruin the performances.”

To keep the performances intact, the engineers turned to Nitro, a real-time display software that’s been in development since Wreck-It Ralph (2012).The animators were then able to see realistic renders almost instantly to make decisions on the fly. The tool sped up the process, making it possible to keep subtle expressions on the furry faces in the movie.

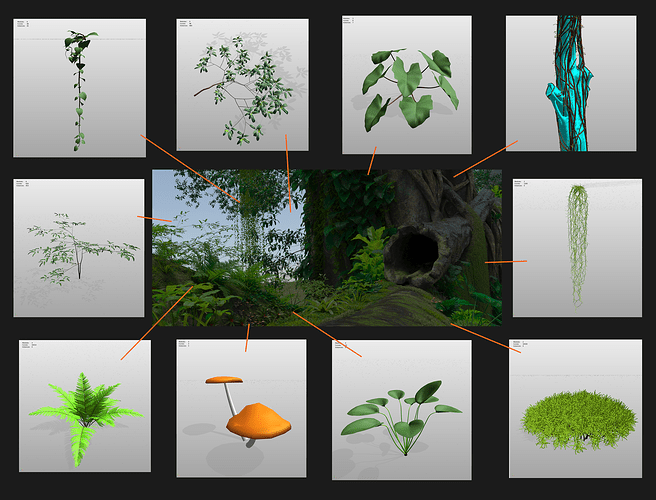

While the animals were getting ready to inhabit their virtual world, a team of environment CG specialists put together the backdrops that made their lives believable. The modern-world setting in the movie captures the essence of a city designed for animals. When a train pulls up at a crowded stop, tall mammals step off the train through high doors and tiny commuters scurry through little mouse doors. But the Zootopia zone has different districts to suit the peculiar needs of its many species. Tundratown supports polar bears, and Sahara Square is home to camels. While the rainforest isn’t marked by a specific species, the Amazonian density of the vegetation stands out.

Each environment was meticulously crafted on Bonsai, a tree-and-plant-generation tool that was first used for Frozen in 2013. Once the software learned how to make a tree, it regenerated many different variations to create a rainforest with intricately layered foliage.

It takes a powerful tool to create a universe of complex creatures and detailed environments. Disney’s secret animation weapon is the Hyperionrendering system. It’s an in-house software that has changed the way scenes have been simulated in the past couple of years.

What makes the image generator unique is that it traces a ray from the camera as it bounces around objects in a virtual scene before hitting a source of light. This allows the engineers to replicate the natural movement of light to create photorealistic shots. Disney first introduced the renderer with Big Hero 6 (2014). But with Zootopia, the engineers had to add a new fur paradigm to the existing software. So the renderer also followed the rays as they moved through dense animal fur.

“One of the problems before Hyperion was that you had no idea what the lighting in your scene was going to look like,” says Byron Howard, co-director of the movie. “Now, very early on, almost as soon as we have the layout of the scene with a camera set up, we can get an idea of what that scene is going to look like and do intensely complex calculations. It’s made making films at Disney so much easier.”

If it wasn’t for blender, I would’ve never tried my hand on 3d modeling.

If it wasn’t for blender, I would’ve never tried my hand on 3d modeling.