Hi All,

I came over here to maybe get an laymen answer on setting up my camera settings in Blender to match my CMOS sensor in my vid cam? I came here because of physics and thought you guys over here would think this was a cinch.

I emailed Canon my cam manufacture. As the drop down did not have my type of cam. I got this as reply when asking for the width and height of the Cmos sensor. A 1\4 inch sensor

Determining pixel dimensions from sensor width & height

If you are told the actual dimensions of the sensor, determining pixel area is simple:

Area of entire sensor (in mm2) = width in mm * height in mm

Area of entire sensor (in µm2) = 1,000,000 * area in mm2

Area of one pixel = area of sensor in µm2 / # pixels

Determining pixel dimensions from 1/X size

While often it is possible to learn the width and height of a sensor from a company’s camera manual or specification sheet, sometimes all you can get quickly is the 1/X" value.

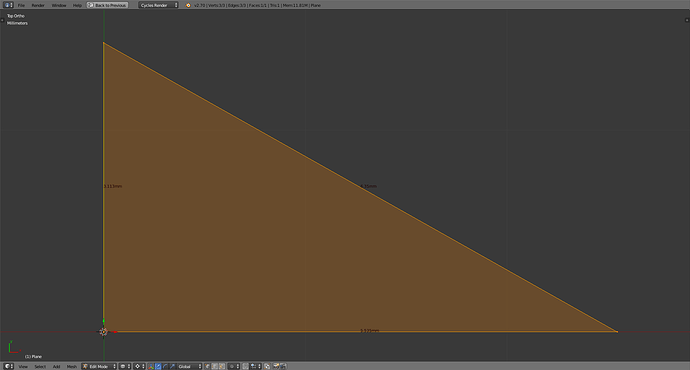

To determine 4:3 sensor width and height from 1/X", let’s solve this equation:

(1 / X") * 0.667= sqrt ( (4a)2 + (3a)2 )

Or simply…

0.444 / X2 = 16a2 + 9a2

And from this we get…

0.444 / X2 = 25a2

And then this…

sqrt (0.444 / 25X2) = a

And like so…

0.667 / 5X = a

And finally…

Width = 4a = 4 * 0.667 / 5X

Height = 3a = 3 * 0.667 / 5X

…but we need the total area, too:

Area = width * height = 0.21333 / X2

Plus we need to convert to metric!

Width in micrometers = 25,400 * width in inches

Height in micrometers = 25,400 * height in inches

Area in µm2 = 645,160,000 * area in inches

So, the final equation is:

Area of the entire sensor in µm2 = 137,630,000 / X2

The area occupied by one pixel is:

Pixel area = area of sensor in µm2 / Y

…where Y = total pixels

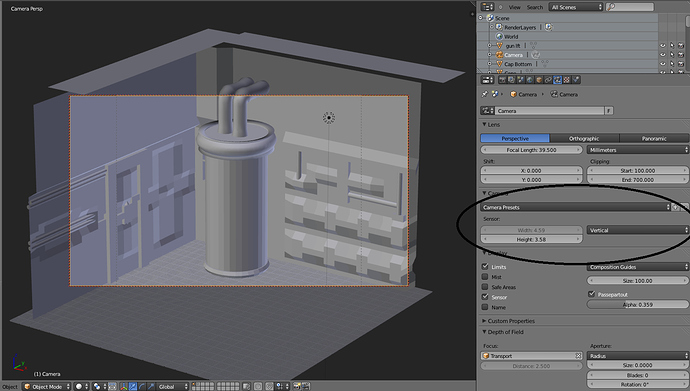

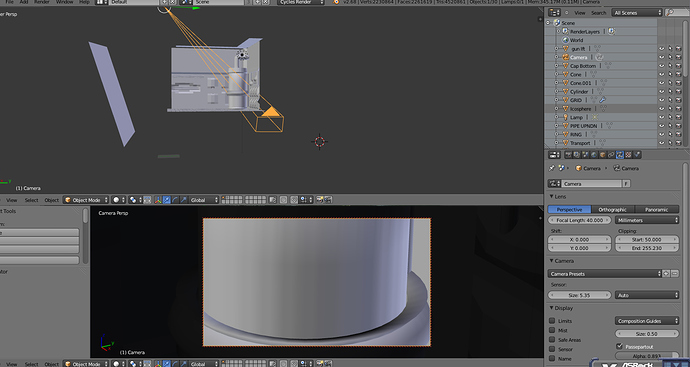

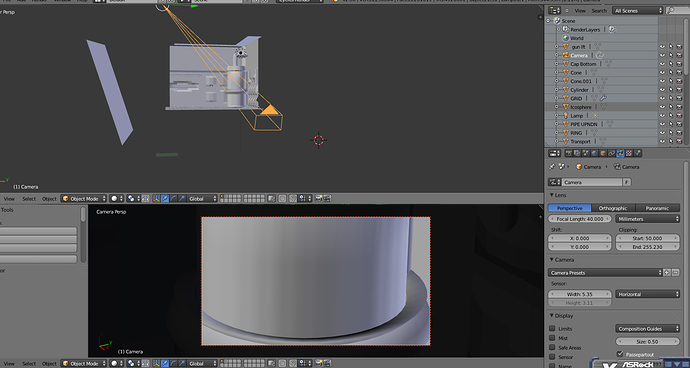

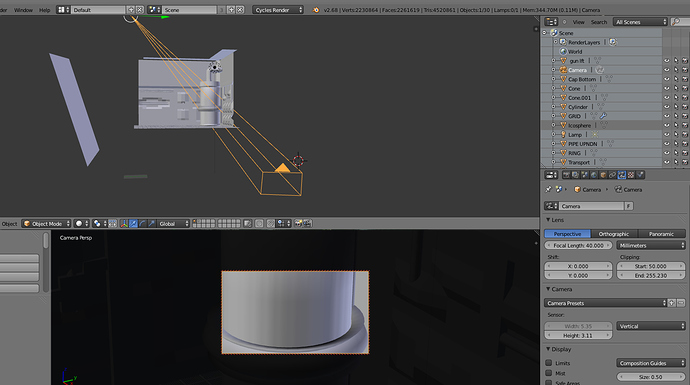

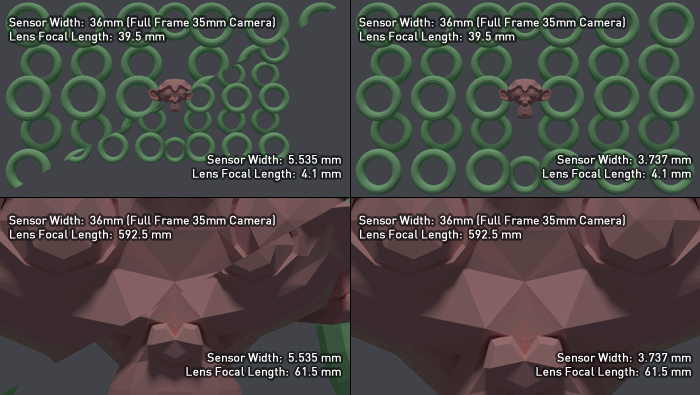

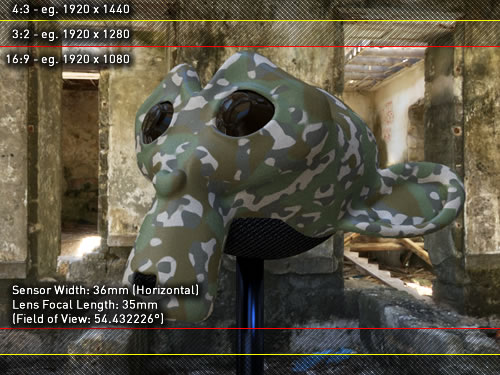

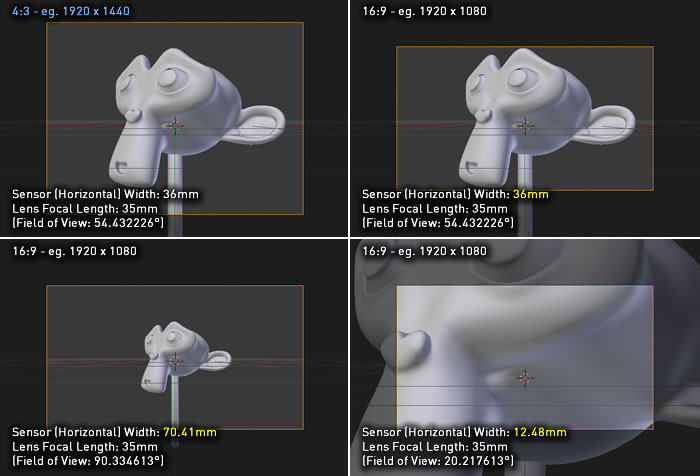

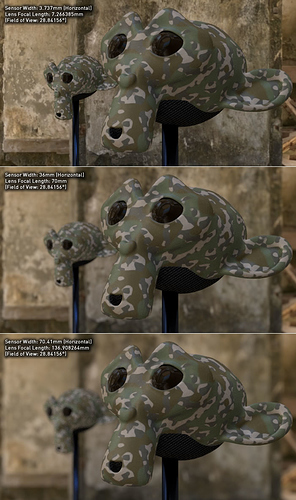

And after I got my head to stop spinning and my eyes back in my head. I came over here to ask what is the settings I should input into these two areas to have it match my camera settings. Part 1.?

This is the camera I am using BTW

http://consumer.usa.canon.com/cusa/support/consumer/camcorders/high_definition_camcorders/vixia_hf_m31#Specifications